and the distribution of digital products.

How Do We Teach Reinforcement Learning Agents Human Preferences?

- Abstract and Introduction

- Related Work

- Problem Definition

- Method

- Experiments

- Conclusion and References

\ A. Appendix

A.1. Full Prompts and A.2 ICPL Details

A.6 Human-in-the-Loop Preference

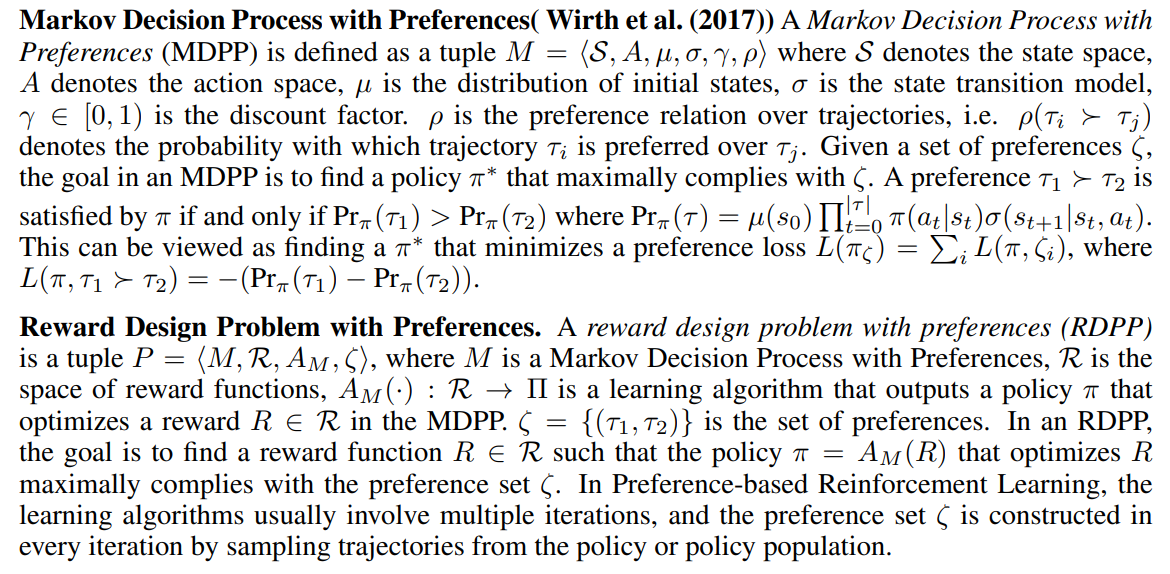

3 PROBLEM DEFINITIONOur goal is to design a reward function that can be used to train reinforcement learning agents that demonstrate human-preferred behaviors. It is usually hard to design proper reward functions in reinforcement learning that induce policies that align well with human preferences.

\

\

:::info Authors:

(1) Chao Yu, Tsinghua University;

(2) Hong Lu, Tsinghua University;

(3) Jiaxuan Gao, Tsinghua University;

(4) Qixin Tan, Tsinghua University;

(5) Xinting Yang, Tsinghua University;

(6) Yu Wang, with equal advising from Tsinghua University;

(7) Yi Wu, with equal advising from Tsinghua University and the Shanghai Qi Zhi Institute;

(8) Eugene Vinitsky, with equal advising from New York University ([email protected]).

:::

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.