and the distribution of digital products.

DM Television

On-Device AI: Making AI Models Deeper Allows Them to Run on Smaller Devices

On-device AI and running large language models on smaller devices have been one of the key focus points for AI industry leaders over the past few years. This area of research is among the most critical in AI, with the potential to profoundly influence and reshape the role of AI, computers, and mobile devices in everyday life. This research operates behind the scenes, largely invisible to users, yet mirrors the evolution of computers — from machines that once occupied entire rooms and were accessible only to governments and large corporations to the smartphones now comfortably hidden in our pockets.

Now, most large language models are deployed in cloud environments where they can leverage the immense computational resources of data centers. These data centers are equipped with specialized hardware, such as GPUs and TPUs, or even specialized AI chips, designed to handle the intensive workloads that LLMs require. But this reliance on the cloud brings with it significant challenges:

High Cost: Cloud services are expensive. Running LLMs at scale requires continuous access to high-powered servers, which can drive up operational costs. For startups or individual engineers, these costs can be prohibitive, limiting who can realistically take advantage of this powerful technology.

Data Privacy Concerns: When users interact with cloud-based LLMs, their data must be sent to remote servers for processing. This creates a potential vulnerability since sensitive information like personal conversations, search histories, or financial details could be intercepted or mishandled.

Environmental Impact: Cloud computing at this scale consumes vast amounts of energy. Data centers require continuous power not only for computation but also for cooling and maintaining infrastructure, which leads to a significant carbon footprint. With the global push toward sustainability, this issue must be addressed. For example, a recent report from Google showed a 48% increase in greenhouse gas emissions over the past five years, attributing much of this rise to the growing demands of AI technology.

That’s why this issue continues to catch the focus of industry leaders, who are investing significant resources to address the problem, as well as smaller research centers and open-source communities. The ideal solution would be to allow users to run these powerful models directly on their devices, bypassing the need for constant cloud connectivity. Doing so could reduce costs, enhance privacy, and decrease the environmental impact associated with AI. But this is easier said than done.

Most personal devices, especially smartphones, lack the computational power to run full-scale LLMs. For example, an iPhone with 6 GB of RAM or an Android device with up to 12 GB of RAM is no match for the capabilities of cloud servers. Even Meta’s smallest LLM, LLaMA-3.1 8B, requires at least 16 GB of RAM — and realistically, more is needed for decent performance without overloading the phone. Despite advances in mobile processors, the power gap is still significant.

This is why the industry is focused on optimizing these models — making them smaller, faster, and more efficient without sacrificing too much performance.

This article explores key recent research papers and methods aimed at achieving this goal, highlighting where the field currently stands:

- Meta’s MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

- Huawei’s Kronecker Decomposition method for GPT compression

- “TQCompressor: Improving Tensor Decomposition Methods in Neural Networks via Permutations,” a recent open-source project and research paper that improved the Kronecker decomposition method, allowing GPT models to be compressed by 1.5x without significantly increasing time requirements, a study I was involved in as a co-author.

This summer, Meta AI researchers introduced a new way to create efficient language models specifically for smartphones and other devices with limited resources and released a model called MobileLLM, built using this approach.

Instead of relying on models with billions or even trillions of parameters — like GPT-4 — Meta’s team focused on optimizing models with fewer than 1 billion parameters.

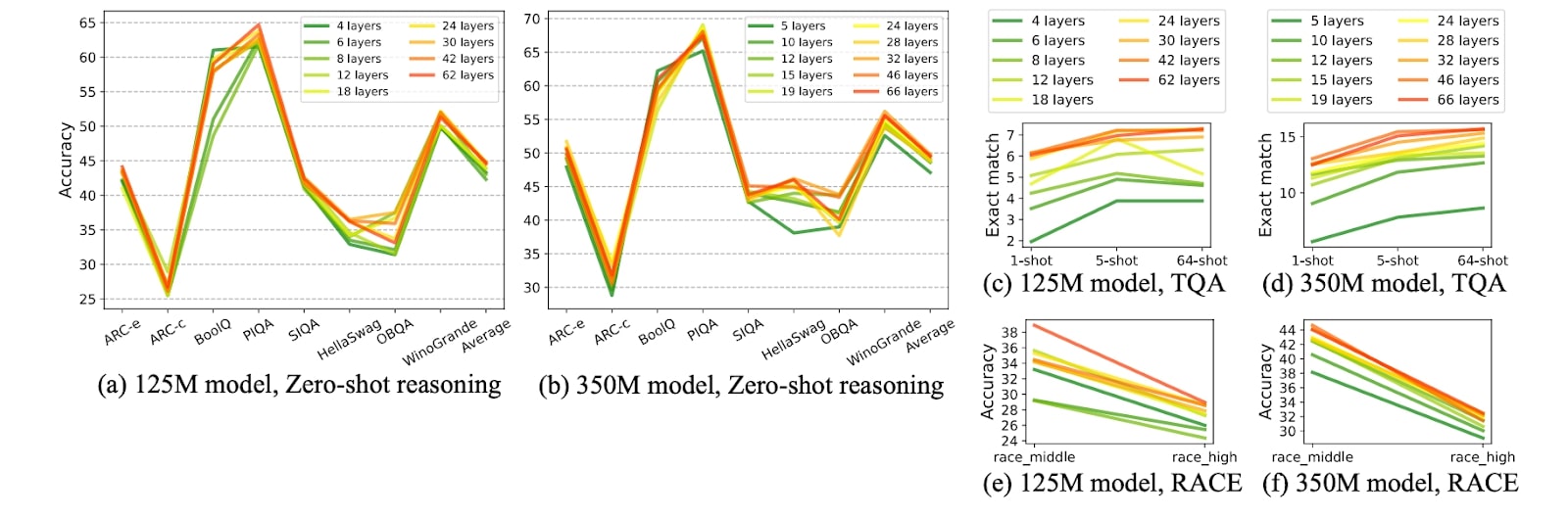

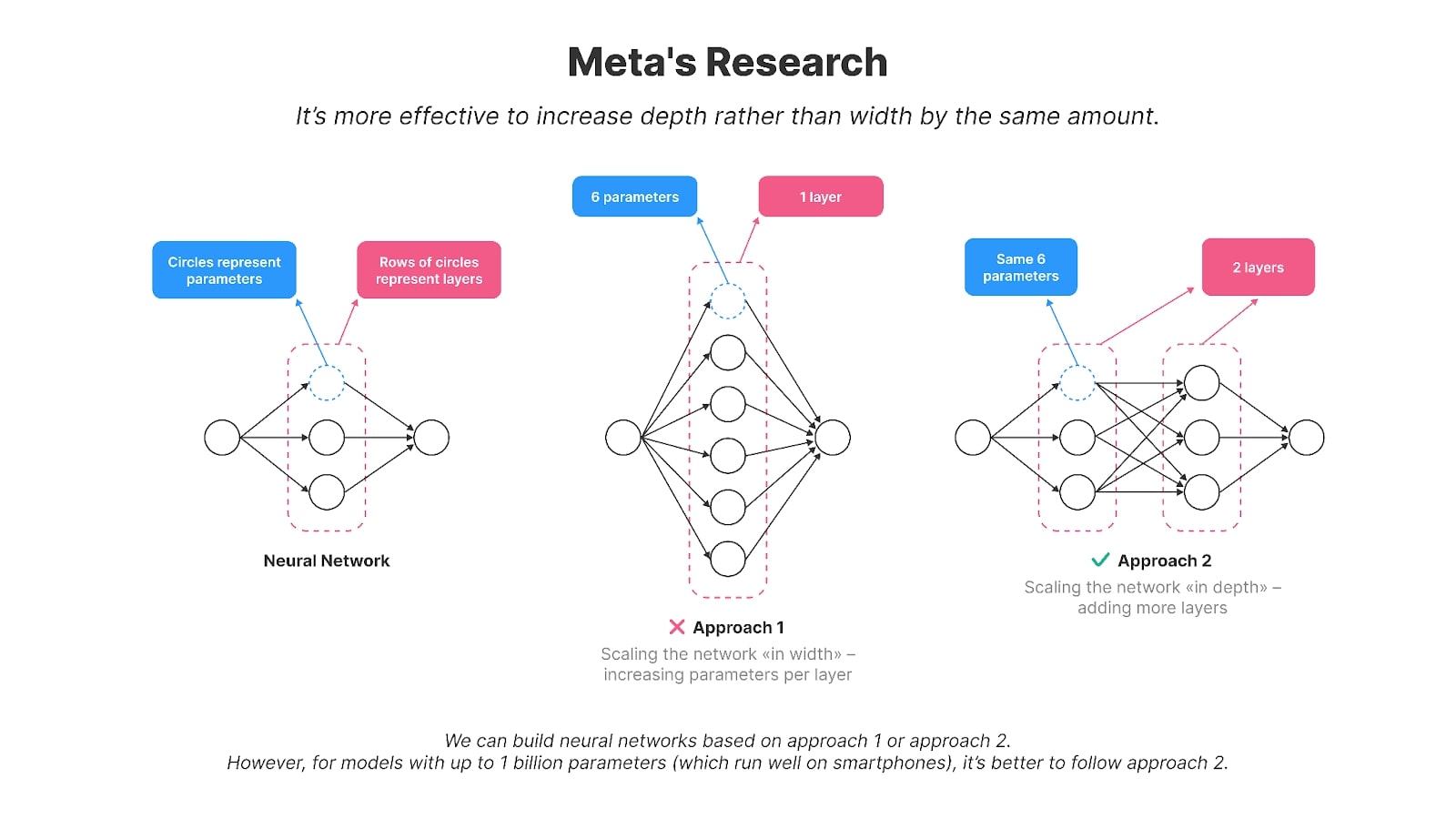

The authors found that scaling the model “in-depth” works better than “in-width” for smaller models with up to or around 1 billion parameters, making them more suitable for smartphones. In other words, it’s more effective to have a higher number of smaller layers rather than a few large ones. For instance, their 125-million parameter model, MobileLLM, has 30 layers, whereas models like GPT-2, BERT, and most models with 100-200 million parameters typically have around 12 layers. Models with the same number of parameters but a higher layer count (as opposed to larger parameters per layer) demonstrated better accuracy across several benchmarking tasks, such as Winogrande and Hellaswag.

Graphs from Meta’s research show that under comparable model sizes, deeper and thinner models generally outperform their wider and shallower counterparts across various tasks, such as zero-shot common sense reasoning, question answering, and reading comprehension.

Image credit: MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

Layer sharing is another technique used in the research to reduce parameters and improve efficiency. Instead of duplicating layers within the neural network, the weights of a single layer are reused multiple times. For example, after calculating the output of one layer, it can be fed back into the input of that same layer. This approach effectively cuts down the number of parameters, as the traditional method would require duplicating the layer multiple times. By reusing layers, they achieved significant efficiency gains without compromising performance.

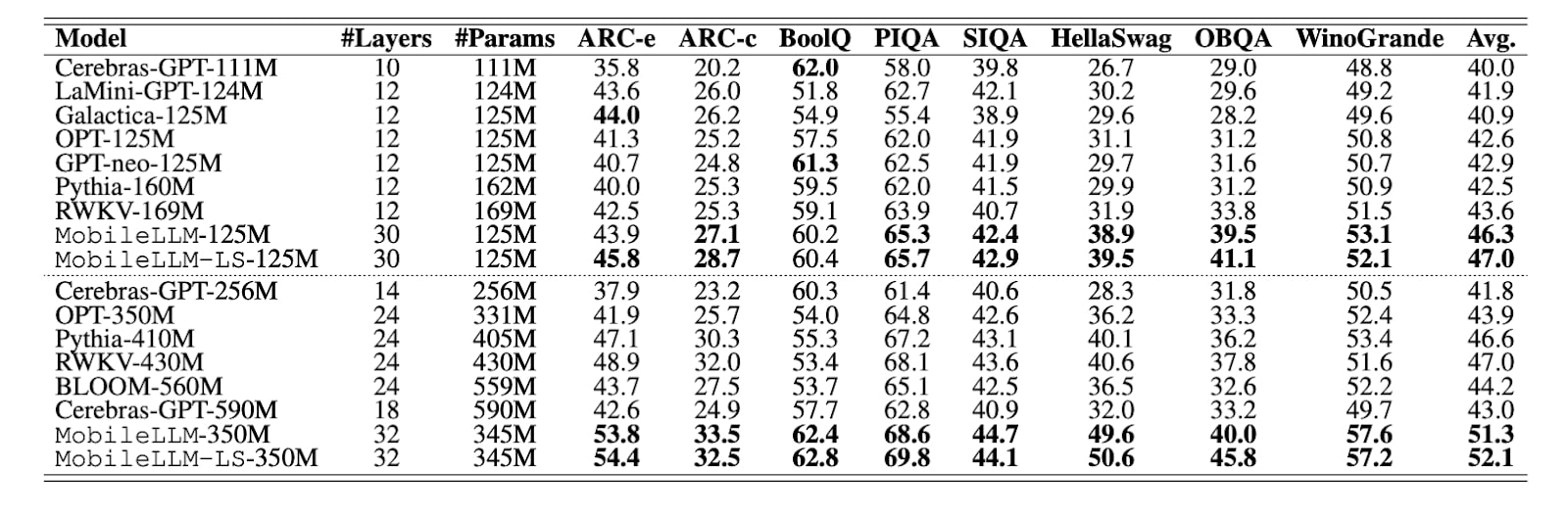

As shown in the table from the research, other models with 125M parameters typically have 10-12 layers, whereas MobileLLM has 30. MobileLLM outperforms the others on most benchmarks (with the benchmark leader highlighted in bold).

Image credit: MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

In their paper, Meta introduced the MobileLLM model in two versions — 125 million and 350 million parameters. They made the training code for MobileLLM publicly available on GitHub. Later, Meta also published 600 million, 1 billion, and 1.5 billion versions of the model.

These models showed impressive improvements in tasks like zero-shot commonsense reasoning, question answering, and reading comprehension, outperforming previous state-of-the-art methods. Moreover, fine-tuned versions of MobileLLM demonstrated their effectiveness in common on-device applications such as chat and API calls, making them particularly well-suited for the demands of mobile environments.

Meta’s message is clear: If we want models to work on mobile devices, they need to be created differently.

But this isn’t often the case. Take the most popular models in the AI world, like LLaMA3, Qwen2, or Gemma-2 — they don’t just have far more parameters; they also have fewer but much larger layers, which makes it practically very difficult to run these models on mobile devices.

Compressing existing LLMsMeta’s recent research shifts away from compressing existing neural networks and presents a new approach to designing models specifically for smartphones. However, millions of engineers worldwide who aren’t building models from scratch — and let’s face it, that’s most of them — still have to work with those wide, parameter-heavy models. Compression isn’t just an option; it’s a necessity for them.

Here’s the thing: while Meta’s findings are groundbreaking, the reality is that open-source models aren’t necessarily being built with these principles in mind. Most cutting-edge models, including Meta’s own LLaMA, are still designed for large servers with powerful GPUs. These models often have fewer but much wider layers. For example, LLaMA3 8B has nearly 65 times more parameters than MobileLLM-125M, even though both models have around 30 layers.

So, what’s the alternative? You could keep creating new models from scratch, tailoring them for mobile use. Or, you could compress existing ones.

When making these large, wide models more efficient for mobile devices, engineers often turn to a set of tried-and-true compression techniques. These methods are quantization, pruning, matrix decomposition, and knowledge distillation.

Quantization

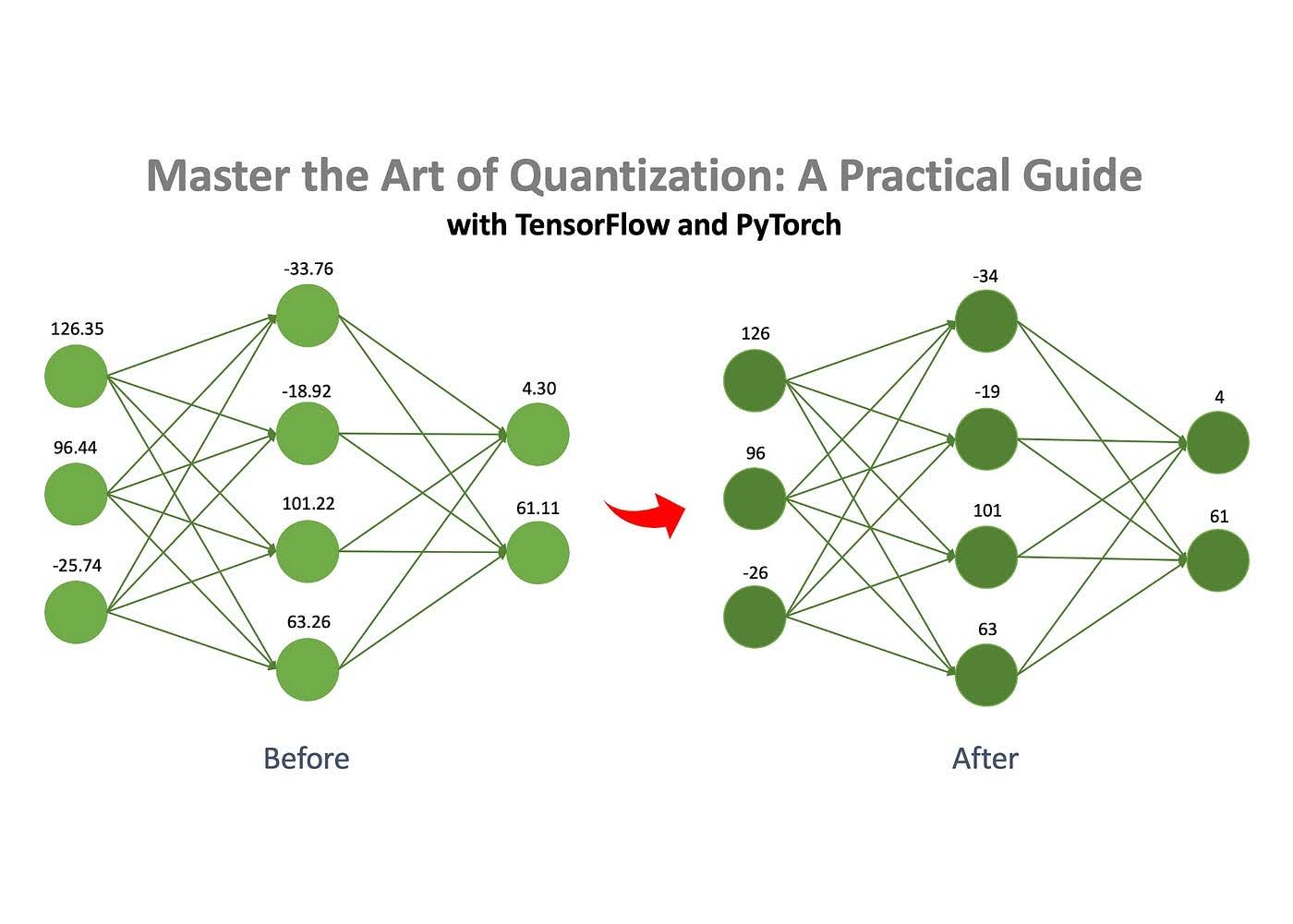

One of the most commonly used methods for neural network compression is quantization, which is known for being straightforward and effectively preserving performance.

Image credit: Jan Marcel Kezmann on Medium

The basic concept is that a neural network consists of numbers stored in matrices. These numbers can be stored in different formats, such as floating-point numbers or integers. You can drastically reduce the model’s size by converting these numbers from a more complex format, like float32, to a simpler one, like int8. For example, a model that initially took up 100MB could be compressed to just 25MB using quantization.

Pruning

As mentioned, a neural network consists of a set of matrices filled with numbers. Pruning is the process of removing “unimportant” numbers, known as “weights,” from these matrices.

By removing these unimportant weights, the model’s behavior is minimally affected, but the memory and computational requirements are reduced significantly.

Matrix decomposition

Matrix decomposition is another effective technique for compressing neural networks. The idea is to break down (or “decompose”) large matrices in the network into smaller, simpler ones. Instead of storing an entire matrix, it can be decomposed into two or multiple smaller matrices. When multiplied together, these smaller matrices produce a result that is the same or very close to the original. This allows us to replace a large matrix with smaller ones without altering the model’s behavior. However, this method isn’t flawless — sometimes, the decomposed matrices can’t perfectly replicate the original, resulting in a small approximation error. Still, the trade-off in terms of efficiency is often worth it.

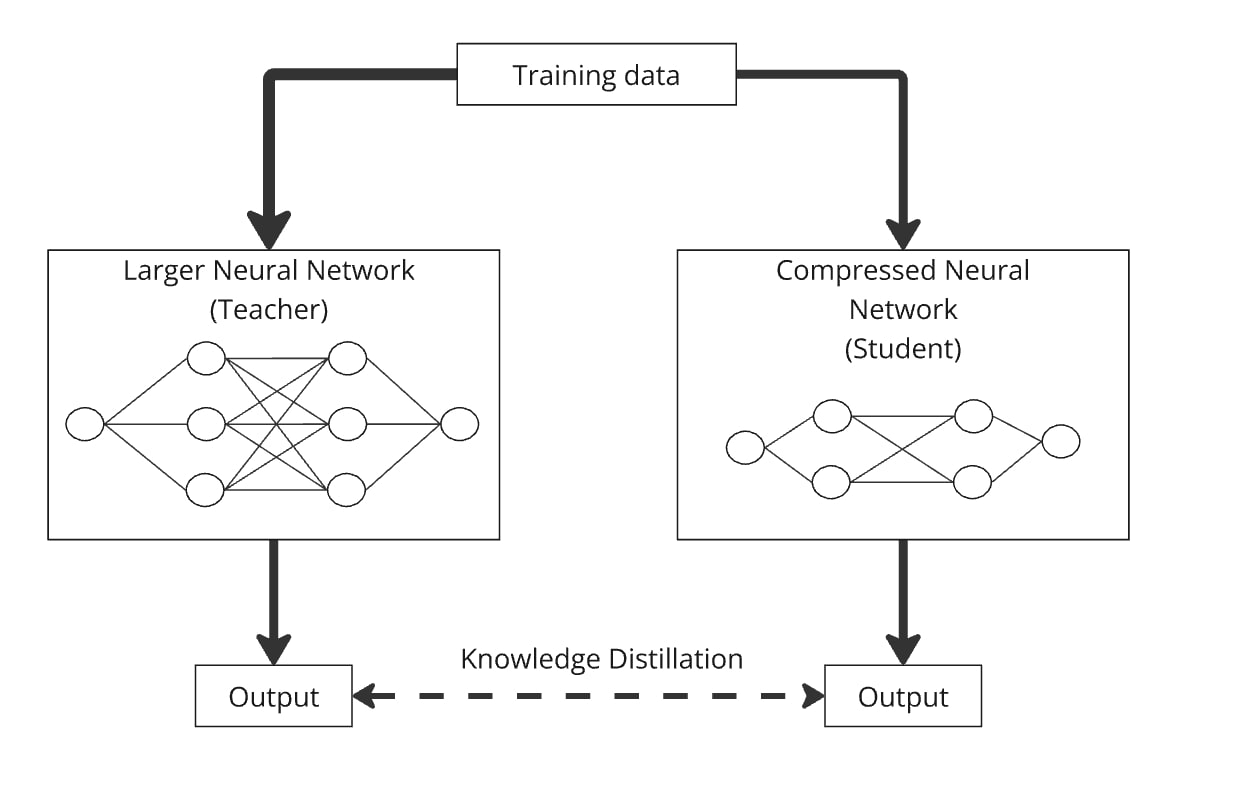

Knowledge distillation

Knowledge distillation, introduced by Hinton et al. in 2015, is a simple yet effective method for creating a smaller, more efficient model (the “student model”) by transferring knowledge from a pre-trained, larger model (the “teacher model”).

Using knowledge distillation, an arbitrarily designed smaller language model can be trained to mimic the behavior of a larger model. The process works by feeding both models the same data, and the smaller one learns to produce similar outputs to those of the larger model. Essentially, the student model is distilled with the knowledge of the teacher model, allowing it to perform similarly but with far fewer parameters.

One notable example is DistilBERT (Sanh et al. 2019), which successfully reduced the parameters of BERT by 40% while maintaining 97% of its performance and running 71% faster.

Distillation can be easily combined with quantization, pruning, and matrix decomposition, where the teacher model is the original version and the student is the compressed one. These combinations help refine the accuracy of the compressed model. For example, you could compress GPT-2 using matrix decomposition and then apply knowledge distillation to train the compressed model to mimic the original GPT-2.

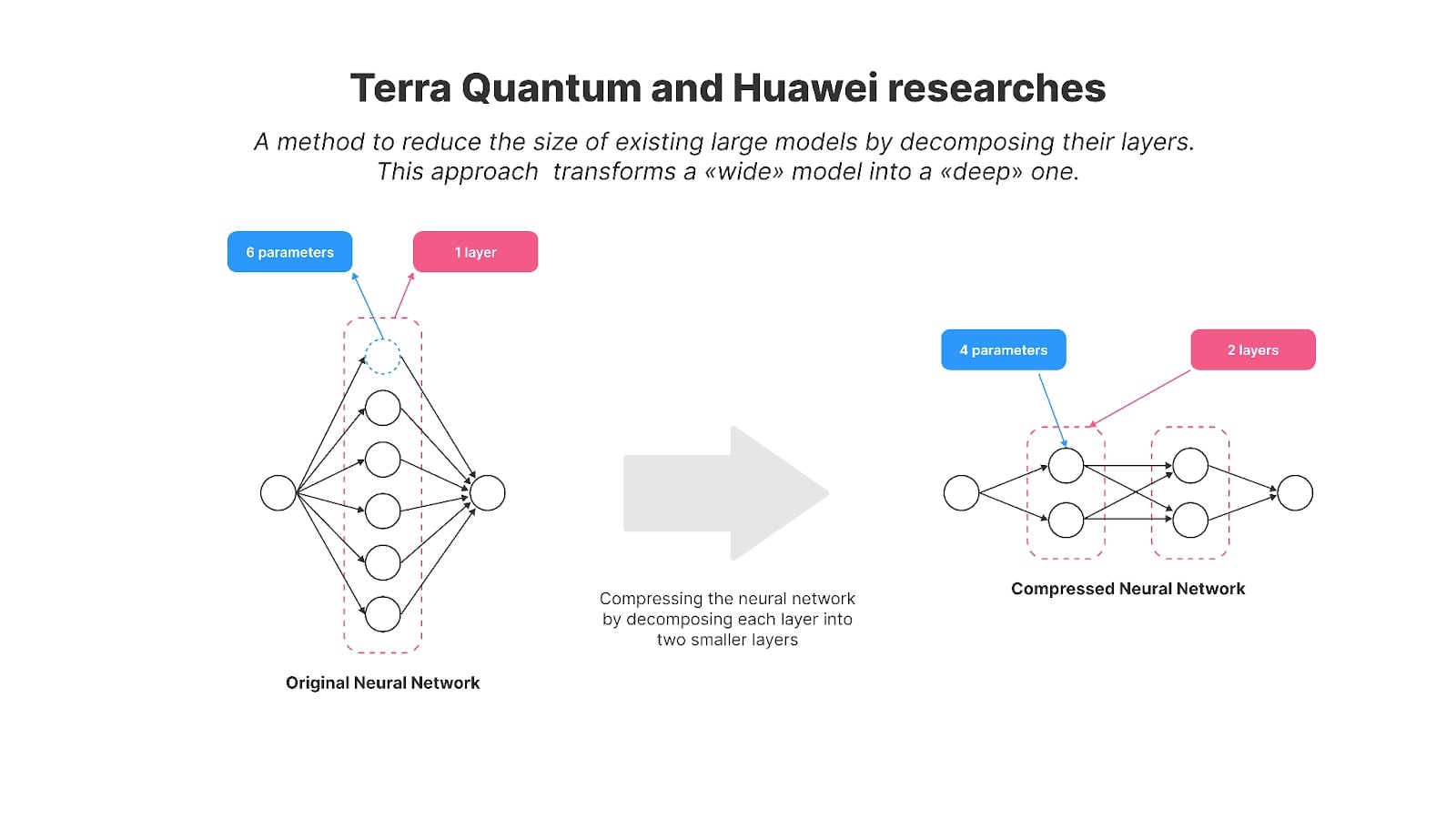

How to compress existing models for on-device AI use casesA few years ago, Huawei also focused on enabling on-device AI models and published research on compressing GPT-2. The researchers used a matrix decomposition method to reduce the size of the popular open-source GPT-2 model for more efficient on-device use.

Specifically, they used a technique called Kronecker decomposition, which is the basis for their paper titled “Kronecker Decomposition for GPT Compression.” As a result, GPT-2’s parameters were reduced from 125 million to 81 million.

To recover the model’s performance after compression, the authors employed knowledge distillation. The compressed version — dubbed KnGPT-2 — learned to mimic the original GPT-2’s behavior. They trained this distilled model using just 10% of the original dataset used to train GPT-2. In the end, the model size decreased by 35%, with a relatively small loss in performance.

This year, my colleagues and I published research on matrix decomposition methods, where we successfully compressed the GPT-2 model (with 125 million parameters) down to 81 million parameters. We named the resulting model TQCompressedGPT-2. This study further improved the method of Kronecker decomposition, and with this advancement, we managed to use just 3.1% of the original dataset during the knowledge distillation phase. It means that we reduced training time by about 33 times compared to using the full dataset and that developers looking to deploy models like LLaMA3 on smartphones will require 33 times less time to achieve a compressed version of LLaMA3 using our method.

The novelty of our work lies in a few key areas:

- Before applying compression, we introduced a new method: permutation of weight matrices. By rearranging the rows and columns of layer matrices before decomposition, we achieved higher accuracy in the compressed model.

- We applied compression iteratively, reducing the model layers one by one.

We’ve made our model and algorithm code open-source, allowing for further research and development.

Both studies bring us closer to the concept Meta introduced with their approach to Mobile LLMs. They demonstrate methods for transforming existing wide models into more compact, deeper versions using matrix decomposition techniques and restoring the compressed model’s performance with knowledge distillation.

Top-tier models like LLaMA, Mistral, and Qwen, which are significantly larger than 1 billion parameters, are designed for powerful cloud servers, not smartphones. The research conducted by Huawei and our team offers valuable techniques for adapting these large models for mobile use, aligning with Meta’s vision for the future of on-device AI.

Compressing AI models is more than a technical challenge — it’s a crucial step toward making advanced technology accessible to billions. As models grow more complex, the ability to run them efficiently on everyday devices like smartphones becomes essential. This isn’t just about saving resources; it’s about embedding AI into our daily lives in a sustainable way.

The industry’s progress in addressing this challenge is significant. Advances from Huawei and TQ in the compression of AI models are pushing AI toward a future where it can run seamlessly on smaller devices without sacrificing performance. These are critical steps toward sustainably adapting AI to real-world constraints and making it more accessible to everyone, laying a solid foundation for further research in this vital area of AI’s impact on humanity.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.