and the distribution of digital products.

DM Television

What's the Deal With Data Engineers Anyway?

\ Imagine you’re building a house. You have the structure — walls and a roof — but what’s the first thing you need to make it usable? Correct! Utilities — water, electricity, gas. Without them, the house is just an empty shell. Building and maintaining the plumbing to ensure everything flows smoothly is essential to making a house functional. This is the perfect analogy for the role of a data engineer.

\ I have some experience in data engineering through my years as a data analyst. Continuing the analogy, if data engineers are responsible for building and maintaining the utilities, data analysts are like the people who decide how to use them effectively — setting up smart thermostats, monitoring energy consumption, or planning renovations. Throughout my journey, I collaborated with data engineers and gained a high-level understanding of their responsibilities. Without their work, analysts like me would be stuck waiting for “water” (data) to arrive. Thanks to data engineers, I always had access to structured and up-to-date data.

\ Now, I’d like to take you through a basic learning project to dive into this domain a little deeper.

\ Disclaimer: In some organizations, especially smaller ones, the roles of data engineers and analysts may overlap.

Imagine you’re a junior data engineer working for an e-scooter service company. One of your tasks is to collect data from external sources, such as weather and flight information. You might wonder, “Why is this data even necessary?” Here’s why:

\

- When it rains, the demand for e-scooters drops significantly.

- Tourists often prefer using e-scooters to explore cities.

\ By analyzing patterns like these, weather and flight data can help predict usage trends and optimize the distribution of e-scooters. For example, if rain is forecasted in one area, or a large wave of tourists is expected due to flight arrivals, the company can proactively manage scooter locations to meet changing demand.

\ Your goal is to ensure this data is well-organized, updated daily, and easily accessible from a database.Let’s dive into the process of extracting, transforming, and loading this data.

To extract external data (or “water”), there are two common approaches:

- Pump from a river: Web scraping.

- Connect to a central water supply: APIs.

\ In this project, I used both methods.

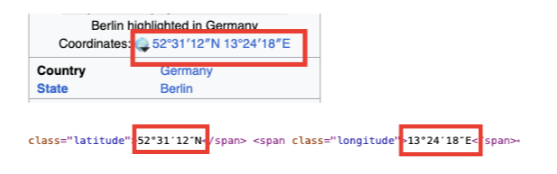

Web ScrapingWeb scraping involves extracting data directly from web pages. I scraped Wikipedia for city information, such as population and coordinates, using Python libraries like requests and BeautifulSoup. Here’s how it works:

\

- Requests: Fetches the HTML content of a web page.

- BeautifulSoup: Parses the HTML to extract specific elements, like population or latitude.

\ HTML (Hypertext Markup Language) is the standard language for structuring web pages. Data like population is often located within HTML elements like