and the distribution of digital products.

Understanding Parallel Programming: A Guide for Beginners

\ I am Boris Dobretsov - an experienced iOS developer. Although I don't hold a traditional degree in this field, I found some success in my career and even established myself as a mentor and a programming course curator. With this article and a forthcoming series on parallel programming, I hope to help readers understand that programming is not as difficult to understand as it may seem, at least its basics aren’t. My goal is to encourage more people interested in programming to explore this field even without access to university education. We won’t cover every aspect of parallel programming but we’ll discuss architecture models and threads, as well as other important comments like RunLopps.

\ Let’s get started!

\

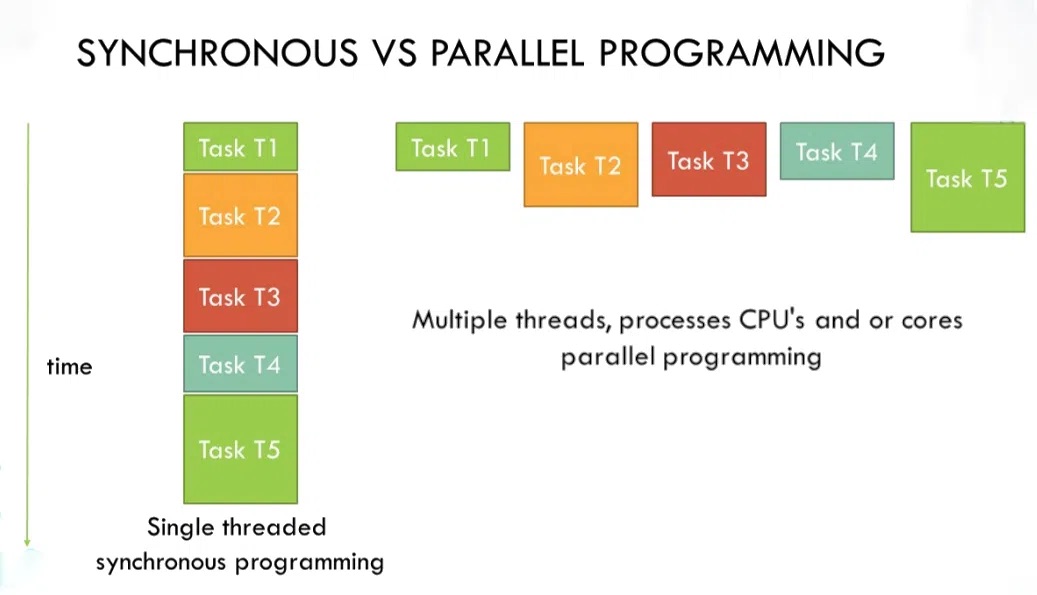

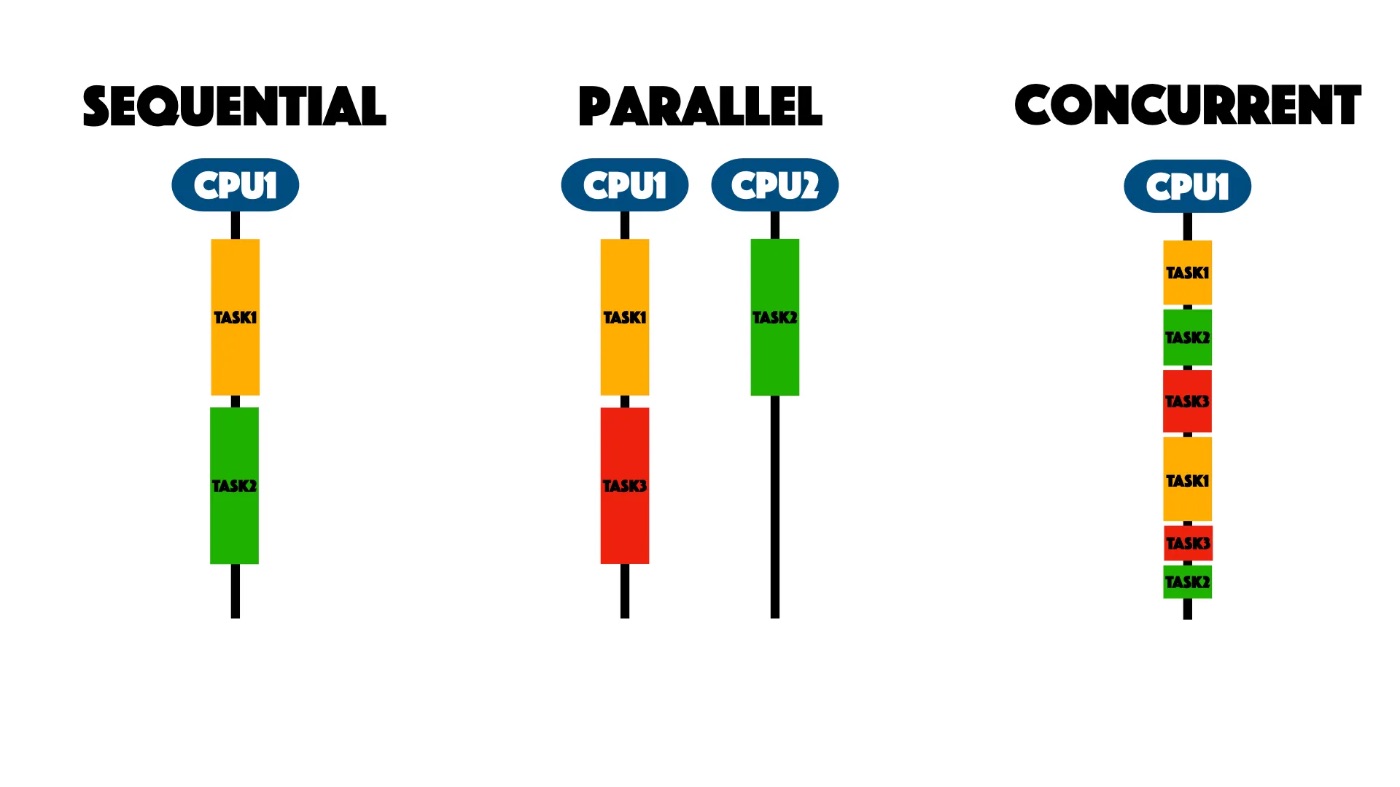

What is Parallel Programming?Parallel programming is a method of organising parallel, simultaneous computations within a program. In the traditional sequential model, code is executed step by step, and at any given moment, only one action can be processed.

\ The ability to execute many tasks concurrently by dividing the workload among numerous processors or cores is made possible by parallel programming, which greatly increases process efficiency.

\ For example, you can import data from the internet and scroll through a table at the same time. If you used a sequential technique, once the data loaded completely, your interface would freeze and stop responding to user inputs.

\

Before explaining to you what parallel processing is, let's understand what an application consists of and how it works. Regardless of the programming language or operating system you're writing an application for, it will always be executed by a processor. The processor can recognize the simplest commands - such as adding or comparing two values in memory. The work of a computer is built from a sequence of such commands.

\ The earliest computers could do little else - they didn’t have an operating system in the modern sense. Programming was simply the translation of mathematical actions into basic operations that the processor could perform. Essentially, it was just a large, fast calculator. An interesting fact is that the word “computer” comes from the English verb “to compute”, which means “to calculate”, literally translating to “calculator”.

\

\ As time went on, computers became faster, and they were taught not only to perform calculations but also to display data on a monitor, print it out, and read characters from a keyboard. Many programs emerged, requiring users to run them one after another, switch between them, and multitask. The original approach—where a computer followed a strict sequential set of instructions - became outdated.

\ This is when operating systems (OS) came into the lime light. These were regular programs, similar to the earliest software products. When an OS is launched, it loads into the device's memory, and the processor begins executing it. The OS can then run other programs within its context, pass their code to the processor, and manage their execution.

\ Initially, the OS helped to choose one program to run at a given time. To run another program, the current one had to finish first. While this may seem inconvenient now, it was a breakthrough at the time.

\ As progress continued and computing capabilities grew, simple operations (like playing music) were performed very quickly. This led to the development of operating systems that could alternate the execution of multiple programs, allowing users to edit texts while listening to music and periodically checking their email. However, a single-tasking processor still could not physically perform two commands simultaneously.

\ But processors became very fast: one operation took an incredibly short amount of time. For example, a laptop with a dual-core 2 GHz processor can perform 2 billion operations per second. This number is approximate, as many factors affect actual performance, but it gives an idea of the device's speed.

\ This is also when the pseudo-multitasking approach spreads - where the operating system alternates between executing commands from multiple programs on a single processor. Because it was happening extremely fast, it created a simultaneous impression (illusion). Nowadays, this approach is no longer relevant because modern multi-core processors can actually perform several commands at the same time - one per core.

\ The next key concept is the “process”. This is a running program within the operating system. In addition to a sequence of commands, a process contains many other resources: data in memory, input/output device descriptors, files on disk, and more. The operating system consists of a vast number of running processes. Some handle system functions, work with network devices and files and calculate the current time. Other processes represent programs launched by the user, such as a browser, media player, Skype, or text editor.

\ Each process is allocated its section of RAM, where it can store data and variables, and - when granted exclusive rights - write to a file. At the same time, access to other processes’ resources is closed. For instance, when you open a file for writing in one process, you automatically block it for others, as the variables of a specific process are only accessible to that process.

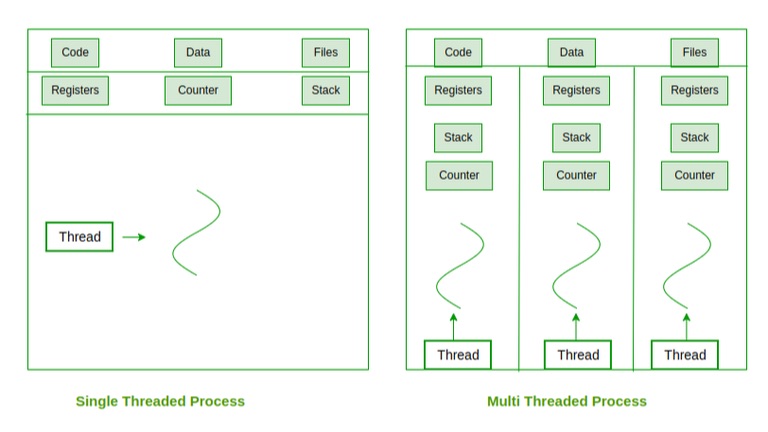

However, the process is not the smallest unit that can be executed. Processes consist of threads.

\

Thread or a thread of execution - it is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system (source)

\

\ For iOS application developers, knowledge about the processor, processes, and operating system is of a practical nature. It reveals the bigger picture of development and offers a deeper understanding of how programs and their environment work, thus helping developers write higher-quality, more efficient code. However, creating and managing threads in programming is a separate topic.

Architecture modelsThere are several ways to organize code:

Single-threaded — Your program contains one thread where all tasks are placed and executed sequentially.

Multi-threaded — Your program contains multiple threads, with tasks divided between them and executed in parallel (simultaneously).

Synchronous — Each task in a thread is executed from start to finish, and no other task can begin until the current one is fully completed.

Asynchronous — A task in a thread can be paused during execution. After pausing a task, another one can be started, and the previous one can be resumed later. Additionally, multiple tasks can be paused and resumed multiple times.

\

A task - it is a sequence of commands that must be executed together to produce a specific result (for instance, a single line of code performing an addition of two variables, or a large block of code reading data from disk, modifying it, and sending it to a server).

\ Further, there are several types of architecture as code organization methods can combine to form the following models:

Synchronous single-threaded

Asynchronous single-threaded

Synchronous multi-threaded

Asynchronous multi-threaded

\

When it comes to architectures involving concurrent (competing) code, the following can be considered:

Multi-threaded model — The program is divided into threads, but tasks within each thread are executed synchronously.

Asynchronous model — The program runs in a single thread, but tasks are executed asynchronously.

\

\ Concurrent (competing) code implies that tasks “compete” with each other for the right to be executed. This is why we do not consider synchronous and single-threaded architectures, as there is no such competition in those models.

\ You might wonder why the multi-threaded model is also considered concurrent (competing). With the asynchronous model, it's clear: one task can be paused, and another can be started in its place, so we see the competition between them. In the multi-threaded model, there seems to be no such competition, as tasks are executed simultaneously.

\ However, threads share common resources - such as variables or files that tasks from different threads may write to. These tasks might also need access to the device's camera, which cannot be accessed by multiple threads simultaneously. As you can see, in the multi-threaded model, the competition between tasks is not for the right to execute but for access to unique resources.

\ In iOS applications, an asynchronous multi-threaded model is used. Of course, how you write your code is up to you. You can write code that runs in a single thread and synchronously. However, many system frameworks (software platforms) operate at least asynchronously, and you will have to interact with them. Additionally, if you do not divide your program into threads, the application will become frustratingly slow and will often ignore user inputs - leading to lag.

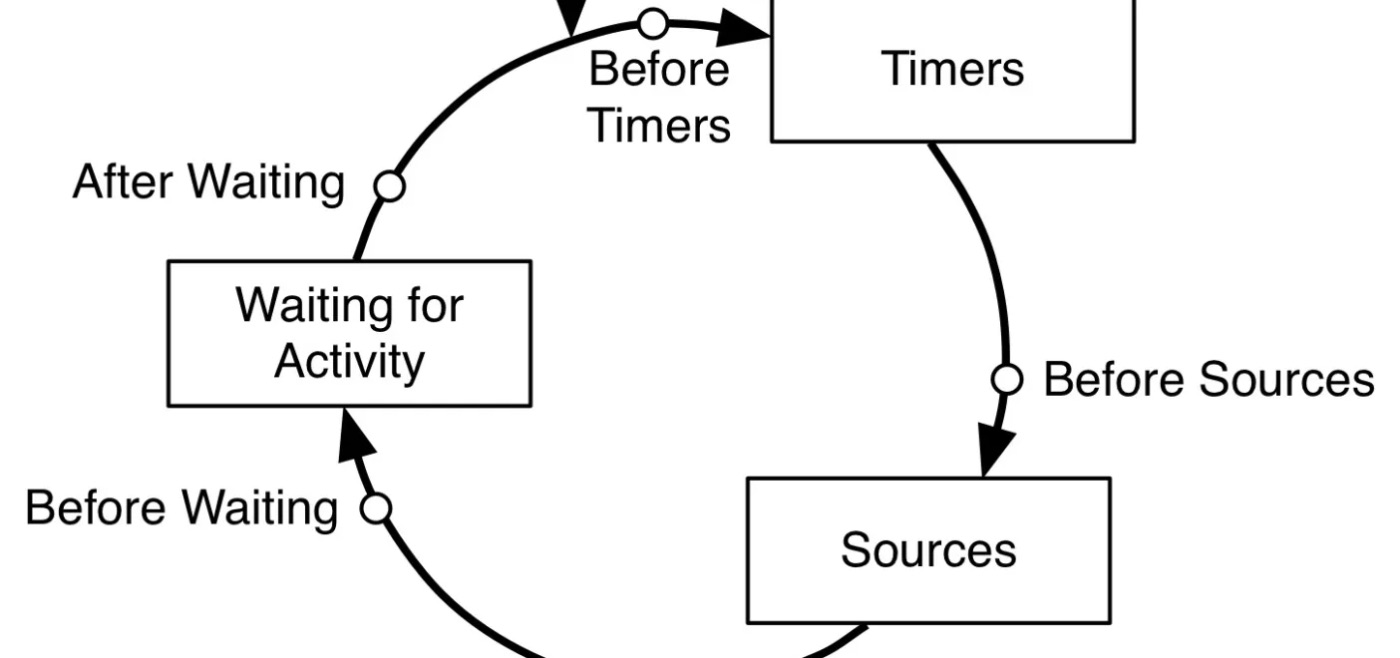

RunLoopIt is important to understand what RunLoop is and when you can rely on it. The RunLoop function does not simply make your code execute the command from start to finish, but instead, it allows you to keep the code waiting for user commands or other events. Runloop is an essential part of the infrastructure linked to threads. This is a cycle of event handling, which is used to plan work and coordinate incoming events. Its purpose is to keep the thread occupied when there is work and, at the same time, to make it sleep if there is no work.

Have you ever wondered what a running application is doing when it's not actively processing anything? Is it idle? How does it know when to act—whether a user taps the screen, a scheduled event occurs, or a server response arrives? The answer is simple: it waits.

\

\

RunLoop is an infinite cycle that waits for events. But where do these events come from?

Ports: Data can come from external sources via ports, such as user taps on the screen, system sensors (e.g., location data), internet data, or messages from other applications.

Custom sources: Programmatically created sources from another thread.

Selectors: calling a Cocoa selector from another thread.

Timers: Delayed events created by the thread for itself. For example, if you set a 10-second timer, it doesn’t tick continuously; instead, it resides in the event source. The RunLoop periodically checks if it’s time to fire the event. The timer can be either one-time or recurring.

\

In most situations, you don't need to worry about RunLoop, how it works, or to set it up manually. Normally, the main thread, which handles the user interface in every application, includes a RunLoop configured in advance. That is why many developers could even have no clue that it exists and have been building applications for years. However, my understanding is that it is still important to know how RunLoop works, and without this knowledge, some aspects of your application's logic will remain unclear. Because of its fundamentality, RunLoop has an impact on many functions of your app, such as timers, handling internet requests, and user inputs. All asynchronous code is based on RunLoop, and even memory management is connected to the event loop.

\ I will demonstrate the importance of RunLoop understanding via an example of a memory overflow issue in a loop, and I will provide the solution to this problem.

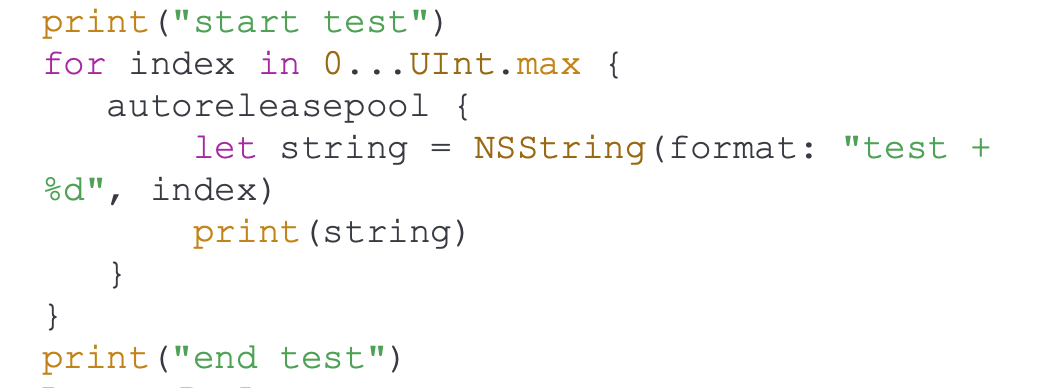

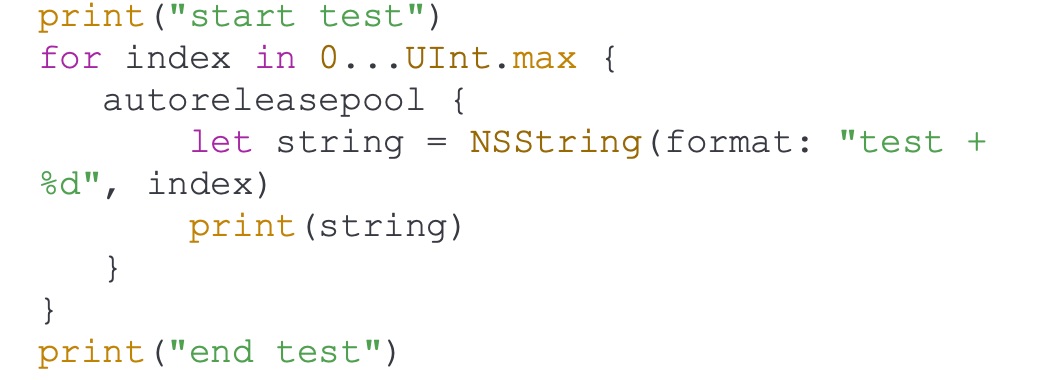

\ The following code presents a potential memory overflow issue. Try adding it to an application, run it, and observe how the memory consumption increases.

\

At first glance, it seems that the code is too simple and it has no memory leaks. We create an NSString in the loop and output it to the console. At the end of each loop iteration, the NSString should be destroyed from memory, since the only variable referencing it disappears.

\ NSString is an Objective-C type and follows the Automatic Reference Counting (ARC) rules of Objective-C. According to ARC, if the name of a method does not contain the keywords new, init, copy, or mutableCopy, the object is placed in a special memory management container called the autoreleasepool.

\ The autoreleasepool creates a scope, at the end of which the reference count for the objects placed in the pool is reduced. By default, the application has a global autoreleasepool, which is cleared at the end of each event loop iteration. However, in this case, the event loop never completes, and the memory doesn’t get released as expected during the loop execution.

To avoid this problem, you can create your own autoreleasepool that will be cleared after each iteration of the loop, ensuring that memory is released more frequently.

\ Here’s the corrected version of the code:

\

By wrapping each iteration of the loop inside an autoreleasepool, you force the memory used by NSString to be released at the end of each iteration, rather than waiting for the entire loop to complete or for the global autoreleasepool to be cleared at the end of the event loop.

ConclusionModern software development is impossible to imagine without parallel programming, especially nowadays when almost every task requires high performance and responsiveness. In iOS development, there are many useful tools and frameworks available to manage parallel programming and multithreading. Among them are Grand Central Dispatch (GCD) and Operation Queues, which simplify the process of handling concurrent tasks and enable developers to build smoother, more responsive applications.

\ In future discussions, we will explore these iOS-specific tools in greater detail to help you master parallel programming in this environment.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.