and the distribution of digital products.

Textbooks Are All You Need: Additional Examples for Section 3

:::info Authors:

(1) Suriya Gunasekar, Microsoft Research;

(2) Yi Zhang, Microsoft Research;

(3) Jyoti Aneja, Microsoft Research;

(4) Caio C´esar Teodoro Mendes, Microsoft Research;

(5) Allie Del Giorno, Microsoft Research;

(6) Sivakanth Gopi, Microsoft Research;

(7) Mojan Javaheripi, Microsoft Research;

(8) Piero Kauffmann, Microsoft Research;

(9) Gustavo de Rosa, Microsoft Research;

(10) Olli Saarikivi, Microsoft Research;

(11) Adil Salim, Microsoft Research;

(12) Shital Shah, Microsoft Research;

(13) Harkirat Singh Behl, Microsoft Research;

(14) Xin Wang, Microsoft Research;

(15) S´ebastien Bubeck, Microsoft Research;

(16) Ronen Eldan, Microsoft Research;

(17) Adam Tauman Kalai, Microsoft Research;

(18) Yin Tat Lee, Microsoft Research;

(19) Yuanzhi Li, Microsoft Research.

:::

Table of Links- Abstract and 1. Introduction

- 2 Training details and the importance of high-quality data

- 2.1 Filtering of existing code datasets using a transformer-based classifier

- 2.2 Creation of synthetic textbook-quality datasets

- 2.3 Model architecture and training

- 3 Spikes of model capability after finetuning on CodeExercises, 3.1 Finetuning improves the model’s understanding, and 3.2 Finetuning improves the model’s ability to use external libraries

- 4 Evaluation on unconventional problems with LLM grading

- 5 Data pruning for unbiased performance evaluation

- 5.1 N-gram overlap and 5.2 Embedding and syntax-based similarity analysis

- 6 Conclusion and References

- A Additional examples for Section 3

- B Limitation of phi-1

- C Examples for Section 5

In Section 3, we discussed with a few examples on how finetuned modes shows a substantial improvement over the base model in executing tasks that are not featured in the finetuning dataset. Here we provide additional examples and details to further illustrate improvements.

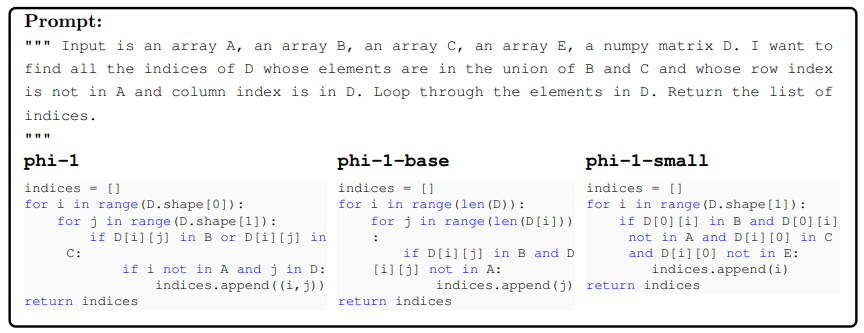

A.1 Finetuning improves the model’s understanding abilityThe example below is challenging due to the prevalence of various logical operators such as “and” and “not” that link numerous statements. Prior to finetuning, the model is confused by the logic embedded in the statement. It struggles to differentiate between “elements” and “indices”, and is unsure about which array to use. Conversely, the finetuned model is adept at accurately identifying all relevant arrays and indices, while correctly implementing the logical operators.

\

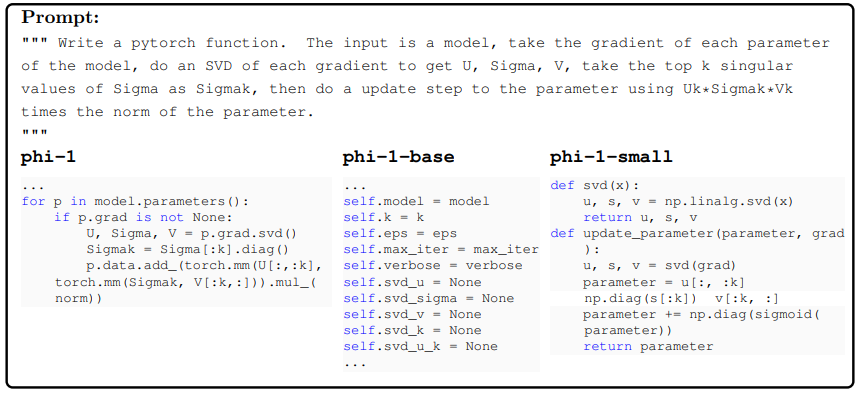

Pytorch example. In this PyTorch example, we ask the model to write a modified gradient update:

\

\ The code by phi-1-small reveals a lack api usage and limitations in understanding, leading to multiple significant missteps. First, it uses np.linalg.svd(x), which is incompatible with PyTorch tensors. Second, it erroneously assigns the SVD result directly to the parameter, deviating from the prompt’s instruction to add it. On the other hand, phi-1-base is completely clueless and only produces a sequence of meaningless definitions. This indicates that improving the model’s logical reasoning ability (even only on simple functions) can greatly enhance the model’s general coding ability

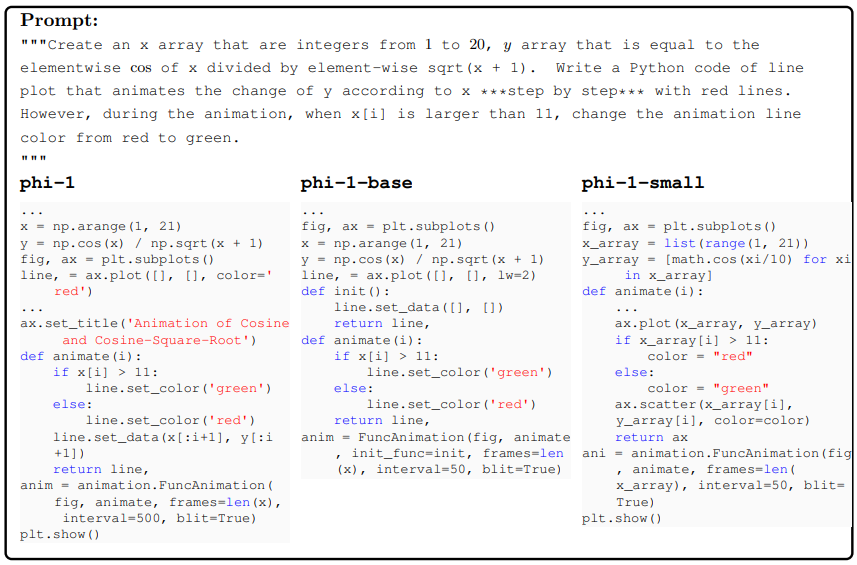

\ Pyplot example. The final API example is a Pyplot application. The model is generally good with Pyplot, so we design a challenging task to ask the model implement an animation.

\

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.