and the distribution of digital products.

State of Phala Q1 2025

- Phala Cloud launched in January 2025. By the end of the quarter, developers had deployed 1,084 Confidential Virtual Machines.

- Phala Network replaced its parachain architecture with an Ethereum rollup called Phala 2.0. The new design allows developers to integrate TEE-based computation directly into Ethereum applications.

- CARV deployed its decentralized AI agents on Phala Cloud using Confidential Virtual Machines. These CVMs provide tamper-proof execution and data confidentiality for privacy-preserving computation across CARV’s AI ecosystem.

- Phala Network partnered with more than a dozen projects in Q1 2025. These included Spore, GoPlus, NOTAI, Fairblock, and Exabits, each of which integrated Phala’s TEE infrastructure for secure agent execution or private AI compute.

- Phala’s benchmark study showed that SP1 zkVMs running inside a TEE on an NVIDIA H200 GPU incurred less than 20% overhead on long-running tasks. This result confirms that Phala’s infrastructure can support privacy-preserving workloads with high computational complexity.

Phala Network (PHA) is an AI coprocessor that enables smart contracts to control and interact with AI Agents. It uses a blockchain-TEE (trusted execution environment) hybrid design to incorporate offchain elements, such as AI, into blockchain-focused applications. This structure lets onchain smart contracts control offchain AI agents (run by Worker Nodes) while preserving security and user privacy. Phala consists of:

- Decentralized Cloud Computing Network: A scalable blockchain backend and TEE-based system for verifiable compute execution.

- TEE (SGX) Framework: Intel’s TEE architecture for secure, verifiable code execution within isolated hardware.

- Decentralized Root of Trust (DeRoT): A smart-contract-governed trust anchor that replaces unrecoverable hardware secrets with a verifiable software-based key management system.

- Key Management Protocol (KMS): A decentralized system that handles key generation, rotation, and sealing across applications, which enables secure migration and dynamic authorization for TEE workloads.

Phala launched as a Polkadot parachain in March 2022, then evolved into an AI coprocessor network in 2023–24. In January 2025, it introduced "OP-Succinct," an Ethereum Layer-2 rollup, and Phala Cloud, a confidential compute infrastructure for AI and DeFi applications. By removing dependence on hardware-vendor RoT and introducing a decentralized KMS, Phala significantly improves trustworthiness and recovery guarantees for confidential computing. For a full primer, see our Initiation of Coverage report.

Website / X (Twitter) / Discord

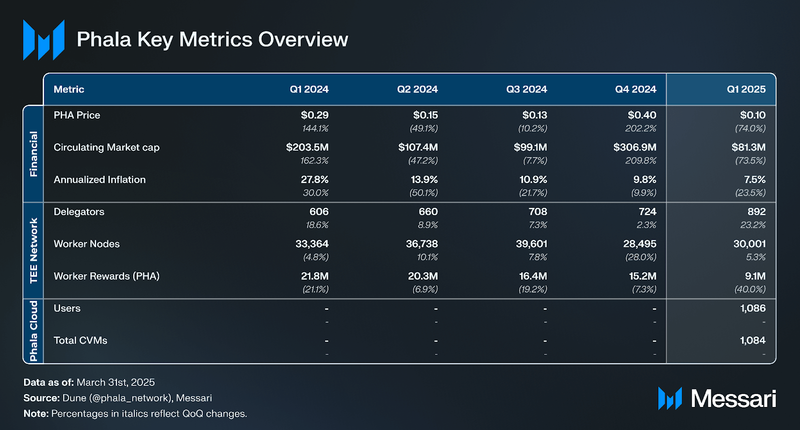

Key Metrics Phala Cloud

Phala CloudOn January 8th 2025, Phala introduced Phala Cloud, a secure, AI-ready platform for deploying privacy-preserving applications. Phala Cloud is designed to facilitate the development and deployment of AI agents and decentralized applications. The platform leverages trusted execution environments (TEEs) to ensure computations (e.g., processing data, running AI models) are performed in a secure, isolated environment. TEEs are secure hardware enclaves that create isolated areas within processors. These enclaves prevent unauthorized access to sensitive data during computation.

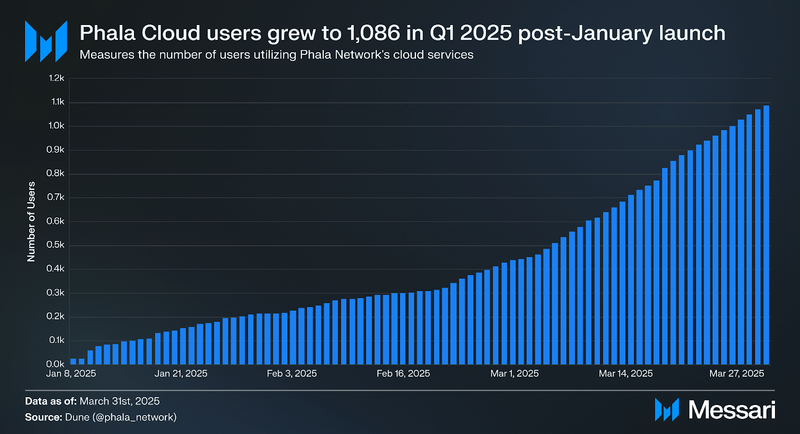

Phala Cloud users represent the number of accounts, developers, or teams deploying applications within its secure, TEE-based infrastructure. These users leverage Phala Cloud’s Confidential Virtual Machines (CVMs) to run programs that require privacy protections, such as certain AI agents and DeFi applications.

By the end of Q1 2025, Phala Cloud’s user base grew to 1,086. Key integrators during Q1 include:

- Primus: Primus uses Phala Cloud's TEE framework to improve the security of its zkTLS (zero-knowledge Transport Layer Security) solution. zkTLS enables verifiable and private communication between web servers and clients. The integration of hardware-backed security with cryptographic proofs creates a dependable base upon which applications can be built and operated.

- CARV: CARV uses Phala Cloud's TEEs to run its AI agents within isolated environments. Phala's tamper-proof execution and data confidentiality, verifiable through remote attestation, provides infrastructure for secure AI development on CARV's platform.

- Spore.fun/ Mind Network: Spore.fun, in partnership with Mind Network, utilizes Phala Cloud's TEEs to facilitate fully homomorphic encryption (FHE)-powered blind voting for its AI-FI governance system. Mind Network's FHE implementation on Phala Cloud guarantees that votes remain encrypted throughout the governance process, which prevents potential voting attacks.

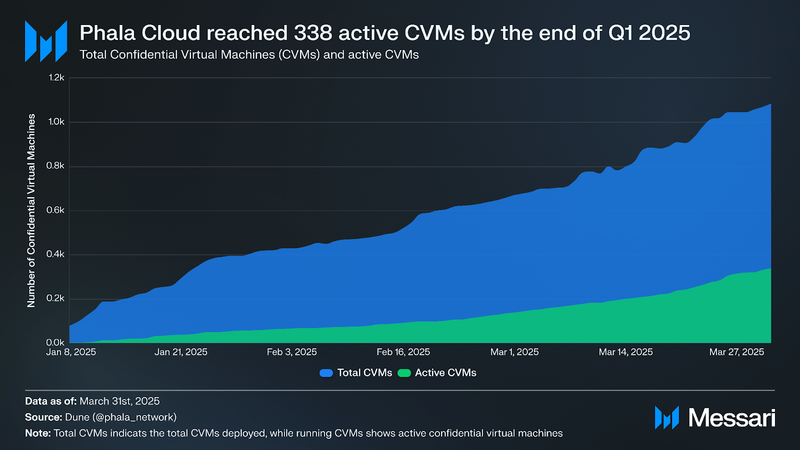

A virtual machine (VM) on Phala Cloud, referred to as a Confidential Virtual Machine (CVM), is a virtual computer operating inside a TEE to safeguard applications. CVMs use hardware-based encryption and attestation to ensure privacy for tasks like AI processing. By the end of Q1 2025, total CVMs reached 1,084.

Active CVMs, totaling 339 at Q1 2025’s close, are CVMs actively performing computations such as AI model training or DeFi transactions. This accounts for 31.3% of total CVMs, showing selective usage since launch. Many CVMs remain inactive, likely for testing or future scaling, encouraged by free credits and the starter plan. This mirrors broader cloud patterns, where users deploy VMs but run only what’s needed.

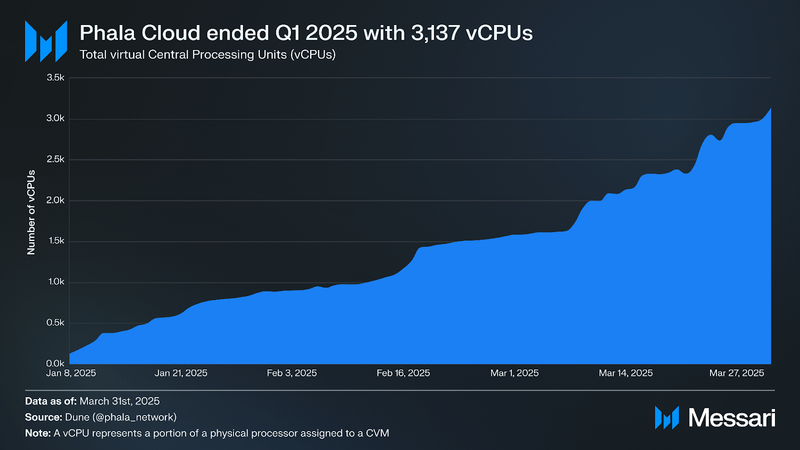

A vCPU (virtual Central Processing Unit) is a virtualized portion of a physical processor assigned to a CVM to handle computations. It represents the processing power given to a CVM to run applications within a TEE, like powering AI agents or DeFi protocols. For example, a CVM on Phala Cloud’s free starter plan gets 1 vCPU, meaning it has one unit of processing capability, while higher-tier CVMs might use multiple vCPUs for more demanding tasks.

The 3,137 vCPUs at the end of Q1 2025 reflect the total processing capacity allocated across all 1,084 Total CVMs. This suggests an average of about 2.9 vCPUs per CVM, though Active CVMs likely use more vCPUs for active tasks (e.g., AI computations). The high vCPU count shows Phala Cloud’s ability to support substantial workloads, with resources distributed to ensure efficient processing for users.

Phala Cloud DevelopmentsMCP server deployment represents a key addition to Phala Cloud’s capabilities. Introduced in March 2025, the platform now supports running Model Context Protocol (MCP) servers inside CVMs. MCP is an open standard that allows AI models to interact with external data sources and tools in a standardized way. Developers can now deploy Jupyter-based MCP servers to Phala Cloud, which creates secure bridges between AI agents and outside systems like APIs or code environments.

Running MCP servers on Phala Cloud inherits the platform’s confidentiality and attestation guarantees. Public endpoints are exposed for remote interaction, while attestation tools confirm that workloads are running in trusted, tamper-proof enclaves. This positions Phala Cloud as a secure backend for context-aware AI development and adds flexibility for developers who want to connect AI systems to dynamic data pipelines or toolchains.

CPU (TEE) Network

Worker Nodes provide compute resources to the network. They host AI Agent Contracts (offchain programs) inside the nodes’ TEEs. The TEE protects the workload for code integrity and privacy. To join the network, miners anywhere in the world can deploy Worker Node software clients inside their TEE-compatible hardware setups, getting them verified onchain.

The Phala mainnet initially operated on both the Khala and Phala blockchains. Khala, a parachain on Kusama, was retired on January 10, 2025, following the approval of Khala Referendum 128. This decision followed Intel's discontinuation of SGX-IAS support in April 2025, which rendered Khala Worker Nodes inoperable.

All Khala assets were migrated to Ethereum, which is compatible with Intel's TSX and Nvidia's Confidential Compute GPUs. This consolidation streamlined the ecosystem, reduced redundancy, and future-proofed Phala.

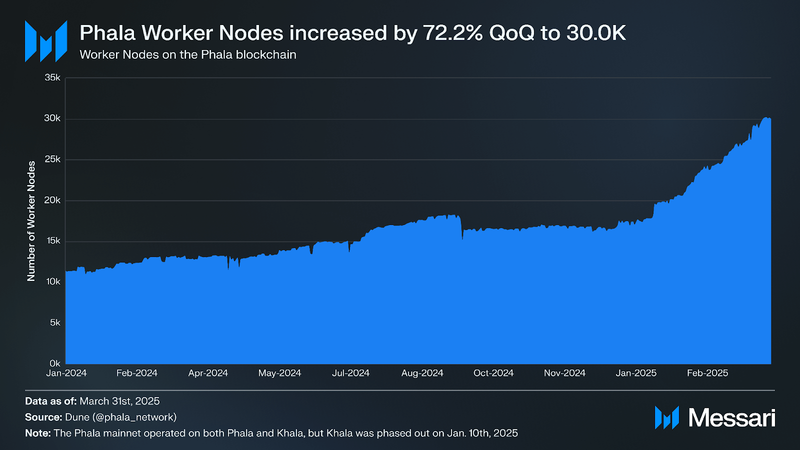

Concurrently, the Phala blockchain continued to expand. Total Phala Worker Nodes increased by 72.2% QoQ from 17,442 to 30,001, while Khala Worker Nodes decreased in preparation for the network's sunset. This migration aligns with the project's long-term strategy of consolidating operations on the Phala blockchain.

Phala has a staking mechanism that manages up to 1 million CPU cores from over 100,000 nodes. Stake delegation includes a feature called StakePool to connect Worker Nodes with PHA tokenholders. As such, PoolOwners can create and manage StakePools allowing groups of Worker Nodes to delegate their PHA to the pool.

Worker Nodes must have a certain amount of stake to perform network operations. Having more PHA staked/delegated increases worker capacity as each Worker Node must meet a minimum staking threshold to become active. The total amount of PHA staked directly determines how many Worker Nodes a StakePool can operate and how much computational capacity (CPU processing) it can offer. For example, if each Worker Node needs 2,000 PHA to become active, staking 10,000 PHA would power 5 Worker Nodes, while staking 10 million PHA would power 5,000 Worker Nodes.

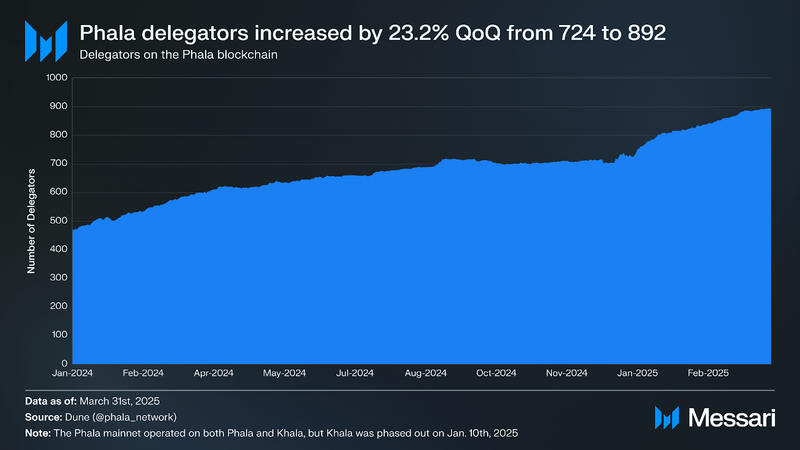

The number of delegators measures the number of PHA tokenholders contributing stake to the network. In Q1 2025, the number of delegators on the Phala blockchain increased by 23.2% QoQ, rising from 724 to 892. This continued growth points to steady engagement from Phala stakeholders.

Technological DevelopmentsPhala 2.0: OP-Succinct Layer-2 RollupOn Jan. 8, 2025, Phala Network launched Phala 2.0. The launch involved moving its infrastructure to Ethereum through the release of an OP-Succinct Layer-2 (L2) rollup. The new rollup was developed with Succinct Labs and Conduit and replaces Phala’s previous Polkadot parachain model. This allows Phala to integrate TEE-backed computation directly into Ethereum’s L2 ecosystem.

OP-Succinct extends the OP Stack by enabling chains to operate under a zero-knowledge proof (ZKP) rollup model. Instead of using a week-long fraud proof window, the network finalizes transactions with SP1-generated ZKPs. Early results show proving costs around $0.005 per transaction. Developers can write and customize rollup logic in Rust, which lowers the barrier to working with zero-knowledge infrastructure.

Phala 2.0 exposes its TEE-based services to Ethereum developers. Applications benefit from a multi-proof system that combines offchain computation using TEEs and onchain verification through ZKPs. The system is designed to accommodate high-throughput computing without depending on centralized cloud infrastructure. The architecture supports a broader set of use cases, including data privacy and AI model execution, within a verifiable and decentralized framework.

Measuring SP1 zkVM in a TEEOn March 21, 2025, Phala released a study measuring the performance of SP1 zkVM running inside a TEE on an NVIDIA H200 GPU. The experiment used Phala’s cloud computing platform and SDK to run SP1 without modifying the original code. The study tested whether zkVMs could operate in a TEE while maintaining privacy and verifiability. The results provide a reference point for evaluating TEE overhead and suitability for different workload types.

zkVMs allow developers to write programs in common languages while producing zero-knowledge proofs. These systems compile standard code into circuit representations suitable for proof generation. SP1, developed by Succinct, is one such zkVM, and was selected for the benchmark. The setup aimed to evaluate runtime characteristics and the impact of TEE constraints on performance.

The study found that most of the TEE overhead came from memory encryption, particularly during data transfers between CPU and GPU. For longer-running tasks, this overhead remained under 20% and decreased in relative terms as workloads became more complex. Short tasks experienced higher proportional overhead but may be optimized with techniques like pipelining. The findings suggest that TEE-enabled zkVMs are more efficient when used for computationally intensive applications.

Phala compared the H200 with AWS’s L4 GPU in terms of memory, compute power, and cost. The H200 provided roughly six times the resources at about three times the hourly price. Most current zkVM workloads did not fully utilize the H200’s memory or compute capacity. This indicates the potential to support larger or more complex applications using the same hardware.

The study positions TEE-capable GPUs as a possible approach to executing private, verifiable computation in resource-intensive environments. Future use cases may include zero-knowledge systems in domains that process sensitive data or require attestation. The evaluation focused on technical performance rather than application-layer design or deployment readiness.

Partnerships and IntegrationsPhala Network continued to expand its ecosystem through several technical partnerships and integrations in Q1 2025, with a focus on secure AI infrastructure, TEE-supported AI agents, and confidential computing.

FairblockIn March 2025, Phala collaborated with Fairblock to develop a hybrid TEE–MPC (Multi-Party Computation) system that addresses secure key persistence in confidential AI applications. The system uses Fairblock’s FairyRing MPC network and conditional decryption protocol to recover ephemeral TEE keys following failure events. Smart contracts monitor liveness and trigger recovery workflows, while TEEs perform verifiable computation bound to attestation reports.

AproIn March 2025, Apro integrated its AI Transfer Trust Protocols (ATTPs) with Phala Network to create a layered security model for AI agents. ATTPs provide verifiable data delivery, while Phala’s TEE infrastructure ensures secure execution. Together, they form a dual-layer framework: ATTPs validate incoming data, and Phala isolates computation.

AutonomysIn February 2025, Phala partnered with Autonomys Network to support secure, crosschain decentralized applications using AI agents. Autonomys’ Auto Agents Framework integrates with Phala Cloud’s confidential compute layer, which enables agents to execute in private environments while leveraging Autonomys’ distributed storage and modular execution stack.

ExabitsIn February 2025, Phala and Exabits collaborated to deliver GPU clusters with TEE support for privacy-preserving AI workloads. Exabits provides access to NVIDIA H200 GPUs equipped with TEE functionality, while Phala supplies the runtime and remote attestation infrastructure. The combined stack allows models like DeepSeek R1 to be run in isolated, confidential environments, addressing concerns around data leakage in public cloud deployments.

Phala Joins NVIDIA Inception ProgramIn January 2025, Phala Network joined the NVIDIA Inception program to advance its confidential AI computing initiatives. By integrating NVIDIA GPU TEE technology, Phala enhances its decentralized cloud infrastructure with secure model training, verifiable machine learning, and federated learning capabilities. Access to NVIDIA’s resources will help Phala scale privacy-preserving AI solutions for applications.

Holoworld and SporeIn January 2025, Spore, a project leveraging Phala Network, launched on Holoworld’s Agent Market during its private beta phase. This integration connects Spore’s autonomous AI agents with Holoworld’s no-code, composable agent infrastructure. The agents operate in TEE-secured environments hosted on Phala Cloud, with the first deployment starting via the AVA agent.

GoPlus SecurityIn January 2025, Phala entered a technical partnership with GoPlus Security to co-develop model analysis and auditing tools for AI systems running in TEE environments. The collaboration is focused on verifying agent behavior and improving tamper resistance in decentralized AI infrastructure. Both organizations contribute to the ElizaOS framework, with Phala providing secure execution infrastructure, and GoPlus delivering security intelligence and auditing capabilities.

xNomadAIIn January 2025, xNomadAI, an open-source platform for autonomous NFT agents, integrated Phala Cloud to secure its infrastructure. The platform transforms AI agents into transferable and independently operating NFTs. Through this collaboration, xNomadAI ensures its agents are verifiable and resistant to tampering by executing in confidential computing environments.

NOTAIIn January 2025, the NOTAI Agent was integrated Phala Cloud with full support for remote attestation using Phala’s RA Explorer. This allows users and developers to verify the trustworthiness of the NOTAI Agent’s execution within a TEE. The partnership aligns with both teams’ focus on enabling trustless AI applications. NOTAI’s deployment demonstrates how Phala’s infrastructure can support privacy-preserving agents at scale, while also providing user-facing transparency via real-time TEE proof verification.

Closing SummaryQ1 2025 was a foundational quarter for Phala Network, marked by the launch of Phala 2.0 on Ethereum and the rollout of Phala Cloud. These developments transitioned the network from a Polkadot parachain-based model to an Ethereum Layer-2 that enables access to TEE-based computation. With over 1,000 Confidential Virtual Machines deployed, Phala demonstrated early product-market fit among teams building AI agents and privacy-sensitive applications. The network also grew operationally, with a 72.2% increase in Phala Worker Nodes and a 23.2% rise in delegators following the sunset of Khala.

Partnership momentum continued across agent frameworks, security protocols, and GPU-backed computing. Notable integrations — including GoPlus, xNomadAI, NOTAI, and Fairblock — showcased how Phala’s TEE infrastructure can support verifiable offchain agents and private computation at scale. With the new Ethereum-based rollup and hardware-aligned architecture, Phala is positioned to serve as a foundational layer for confidential AI applications throughout 2025.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.