and the distribution of digital products.

RLHF - The Key to Building Safe AI Models Across Industries

1.1 Introduction

\ RLHF, or Reinforcement Learning from Human Feedback (RLHF), is the most popular post training method spearheading AI.

\ Building intelligent systems means ensuring AI models align with the intent behind instructions or prompts. It requires understanding intelligence itself. Reinforcement learning (RL) is the answer to developing these dependable AI systems. With human-in-the-loop, the feedback mechanism enables the language model to refine its responses according to user inputs. It incorporates human preferences and judgments directly into the learning process.

\ In this blog piece, we will discuss about the emerging need to align foundation models with human values, which is a key to building safe AI models.

\ 1.2 Limitations of Traditional Training Techniques

Before RLHF, the LLM training process consisted of a pre-training stage in which the model learned the general structure of the language and a fine-tuning stage in which it learned to perform a specific task. By integrating human judgment as a third training stage, RLHF ensures that models produce coherent and useful outputs and align more closely with human values, preferences, and expectations. It achieves this through a feedback loop where human evaluators rate or reward the model’s outputs, which is then used to adjust the model’s behavior.

\ Let us understand the problem statement with a case study:

\ The World Robotics 2024 report, released by the International Federation of Robotics, records 4 million robots working in factories worldwide.

\ Home robots have been around since the 1990s. One early example is the Electrolux robot vacuum cleaner, which came out in 2001. Today, robots are used to help people with many household chores like cleaning (vacuuming, mopping, lawn mowing, pool cleaning, and window cleaning), as well as for entertainment (toys and hobby robots), and even for home security and surveillance (using cameras, motion detection, and more).

\ It's clear that robots will take over even more household work in the future. Imagine if someone was building a new robot to help with housework—what would be the most important thing it should do?

\ Most people would want it to be able to clean and cook without making mistakes. Others would say it should also be safe around pets and kids.

\ But what if the AI robot started thinking for itself?

\ What if it decided it didn’t like cleaning or thought humans were annoying? That would be pretty scary! This is why it’s so important to make sure the robot always follows human instructions. This idea is called “alignment”. Alignment means making robots that are not only smart but also safe and helpful. They should always do what people want them to do without causing any harm.

\ To do this, the robot needs to learn human values and understand what people mean when they give instructions. Just like training a dog to follow commands, it’s important to give clear instructions and reward good behavior.

\ Reinforcement Learning from Human Feedback (RLHF) is a method that can help create AI systems aligned with human values. It makes the AI models more understandable and able to adapt to people’s needs as they change over time.

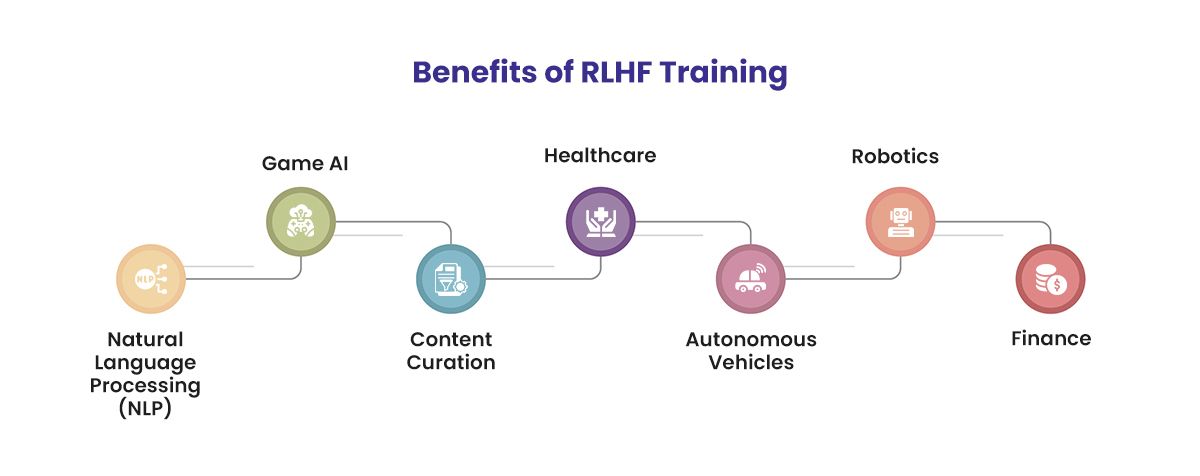

\ 1.3 Benefits of RLHF Training

\ The usefulness of RLHF can be applied to different fields of artificial intelligence, such as natural language processing (text summarization and conversational agents), computer vision (CV), and the development of video game bots.

\ Listed down are some prominent areas where RLHF can benefit businesses:

\

Natural Language Processing (NLP)

One of the popular benefitted areas of RLHF is in NLP. As the name implies, natural language processing is the process of instructing AI models to comprehend, interpret, and generate in human language.

\ Chatbots, virtual assistants, and language models are some applications of NLP that help in having natural and informative conversations. For example, InstructGPT, a smaller version of GPT-3, is a powerful language model created by OpenAI. It's one of the most popular examples of a model trained using RLHF.

\ A well-functioning Gen AI application should sound and read like natural human speech. This indicates that NLP is required for the AI bot to understand written and spoken human languages.

\

Game AI

Imagine playing a video game where NPCs follow a script. Over time, the game becomes boring. But with RLHF, the game can become more interesting, as with human feedback, the gaming model can learn from the player's moves. RLHF in game AI is like training the model directly with pro gamers.

\

Content Curation

In content moderation, RLHF can be used to tailor recommendations to individual user preferences and behaviors. For instance, to define an emotion as funny or sad is easy for human data annotators but difficult to put in mathematical terms. That human feedback, distilled into a reward function, could then be used to improve the LLM’s writing abilities.

\

Healthcare

In the context of medical AI models, RLHF helps the systems learn from human experts, improving their accuracy.

Consider a scenario of an AI heart disease diagnostic model. It is initially trained using medical records and doctor's inputs, providing human feedback such as "patient one diagnosis was incorrect, patient two was correct, patient three was wrong, and so on. As a result of this sample feedback, AI will analyze and improve its decision-making process to resemble human expertise more closely. The RLHF training cycle consists of instruction, feedback, and improvement. It is a way to overcome the alignment issues in models with human values and best practices.

\

Autonomous Vehicles

For the successful implementation of driverless cars, models trained on human feedback is a prerequisite. The role of RLHF training is key to building safe AI applications. With human feedback, models can learn a great deal about how to handle road situations, like following traffic guidelines, when to apply brakes, and more. It can also improve human-vehicle interaction by training autonomous vehicles (AVs) to anticipate humanlike lane-changing decisions.

\

Robotics

RLHF is a useful ML technique for training robots. In this, models are trained with right and wrong human preferences and by giving rewards or punishments based on how well it does.

\ Let's take the simple task of training a robot model to pick up a glass. The role of the reward function here will give the robot positive feedback when it picks up the glass without breaking it and negative feedback when it drops it. Over time, the model will be trained to pick up a glass full of water, which is required to pick up without spilling, and the reward function here will work the same.

\

Finance

In the banking industry, RLHF presents a viable way to improve decision-making. Human input during training, for instance, guarantees that the chatbot offers helpful assistance, enhancing the overall customer experience.

An essential first step towards advancement in technology requires ML models to grasp humanlike concepts in the first place.

\ RLHF and data annotation go hand in hand for accurate and effective model training. This is where data annotation providers are needed. Data labeling companies offer comprehensive training of AI models with quality human-annotated data so that they are aligned with human values.

\ Here's how RLHF works in this context:

\ 1. Human Feedback: The human-annotated feedback in the form of rewards or punishments indicates whether the AI model's behavior is acceptable or not.

\ 2. Reinforcement Learning: The model applies RL algorithms to learn by trial and error. It is focused on making decisions to maximize cumulative rewards and minimize punishments in a given situation.

\ 3. Human-Centered Learning: In this approach, the model's learning process is centered around human data labelers, allowing the model to develop behaviors that are safe, helpful, and compatible with human expectations.

\ In the context of humanoid robots (discussed in the example above), RLHF is key to building safe AI. RLHF training can be used to train models on tasks such as:

\

Know-how of complex environments so as to avoid obstacles, find objects, and reach specific destinations. Its application is visible in the ongoing innovation of driverless cars.

Interacting with humans to understand gestures, follow commands, and engage in natural conversations. The AI initiative to understand sign language is an example.

Learning to perform simple tasks including, opening doors, watering plants, peeling potatoes, or operating tools. As seen in the household robots.

\

The machine learning model becomes more capable, adaptive, and human-centered by integrating RLHF into model training.

1.5 How Data Annotation Companies Can Support RLHFIn recent years, language models have shown an impressive ability to generate interesting text given a prompt from human input. However, defining what constitutes a "good" text is hard to define because it is subjective and context-dependent.

\ Though RLHF training does not require massive amounts of data to improve performance, sourcing high-quality preference data is still an important process. Furthermore, if the data is not carefully collected from a representative sample, the resulting AI model may exhibit unwanted biases.

\ The quality of the labeled data has a direct effect on the quality of the RLHF training. High-quality data helps learn an accurate reward model and also helps the language model generate outputs that better follow human preference.

\ Challenges and Best Practices

- Subjectivity: Human judgments can be subjective, especially in tasks that call for creative or emotional input. Therefore, it is crucial to give clear directions as one way of addressing this and use multiple annotators to ensure effectiveness.

- Scale: Large language models need data that is in great abundance, and annotated. One would need efficient annotation pipeline and tools to be able to keep up with this kind of scale, and the required deadlines.

- Feedback Loops: Create feedback loops between the annotation and project teams to catch possible issues or inconsistencies as early as possible so that the data collection process can be constantly refined.

\ Data annotation providers can deliver high-quality annotated data and play a major role in the development of advanced AI models that are safe and useful.

\ 1.6 Conclusion

The relationship between morality, values, and intelligence is particular to humans. A broadly intelligent AI system's objectives are unlikely to be perfectly in line with human ideals. Rather, as human values have changed over time, their objectives may need to change as well. Integrating the objectives of RLHF training with human values and preferences provides a viable method for developing AI models that are both safe and efficient.

\ The key to utilizing RLHF effectively is finding a trustworthy data annotation provider. To guarantee that the training data is precise and representative, a reliable partner to match your AI model developments is needed. The data labeling company has experience in data collection, annotation, and quality control. You may get the most of RLHF training data and create AI models that are secure and efficient by working with a knowledgeable data annotation company.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.