and the distribution of digital products.

RAG: An Introduction for Beginners

\ In this blog post, we’ll explore:

- Problems with traditional LLMs

- What is Retrieval-Augmented Generation (RAG)?

- How RAG works

- Real-world implementations of RAG

https://youtu.be/zW1ELMo7D5A?embedable=true

\

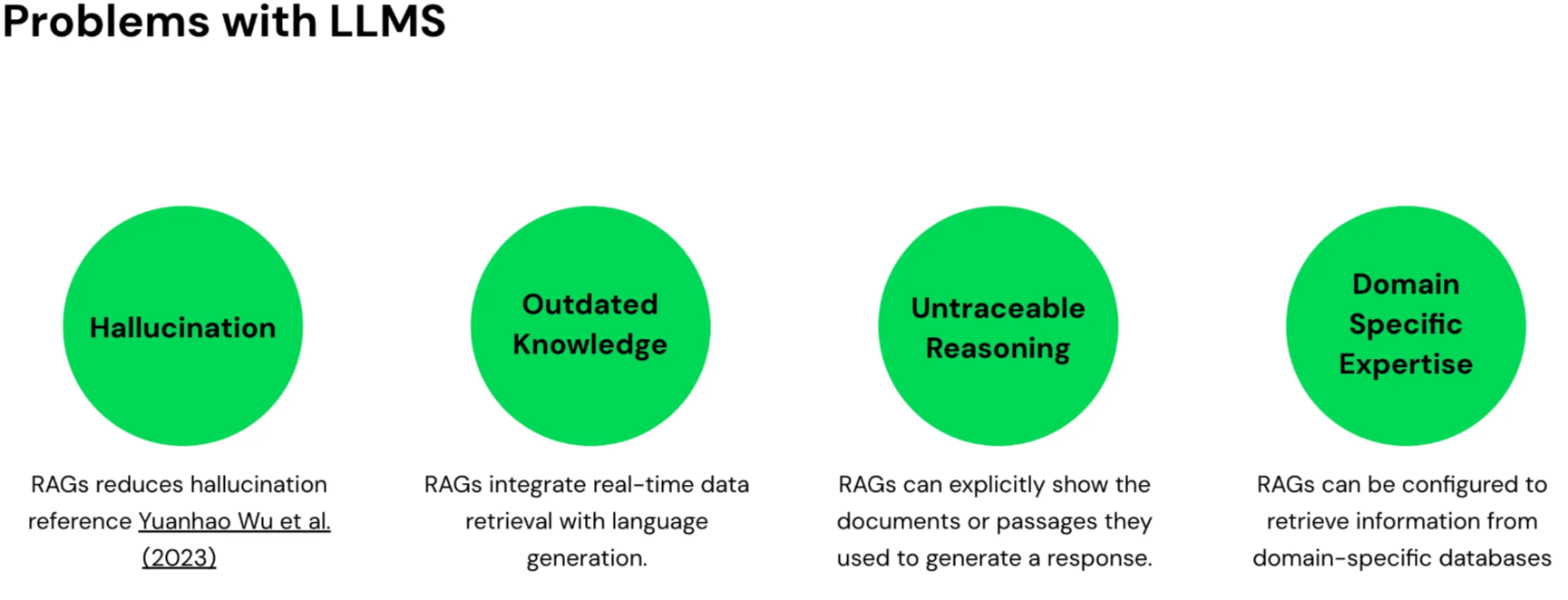

Problems With Traditional LLMsWhile LLMs have revolutionized the way we interact with technology, they come with some significant limitations:

- Hallucination: LLMs sometimes hallucinate, meaning they provide factually incorrect answers. This occurs because they generate responses based on patterns in the data they were trained on, not always on verified facts. Example: An AI might state that a historical event occurred in a year when it didn’t.

\

- Outdated Knowledge: Models like GPT-4 have a knowledge cutoff date (e.g., May 2024). They lack information on events or developments that occurred after this date. Implication: The AI cannot provide insights on recent advancements, news, or data.

\

- Untraceable Reasoning: LLMs often provide answers without clear sources, leading to untraceable reasoning. Transparency: Users don’t know where the information came from. Bias: The training data may contain biases, affecting the output. Accountability: Difficult to verify the accuracy of the response.

\

Lack of Domain-Specific Expertise: While LLMs are good at generating general responses, they often lack domain-specific expertise.

\

Outcome: Answers may be generic and not delve deep into specialized topics.

Imagine RAG as your personal assistant who can memorize thousands of pages of documents. You can later query this assistant to extract any information you need.

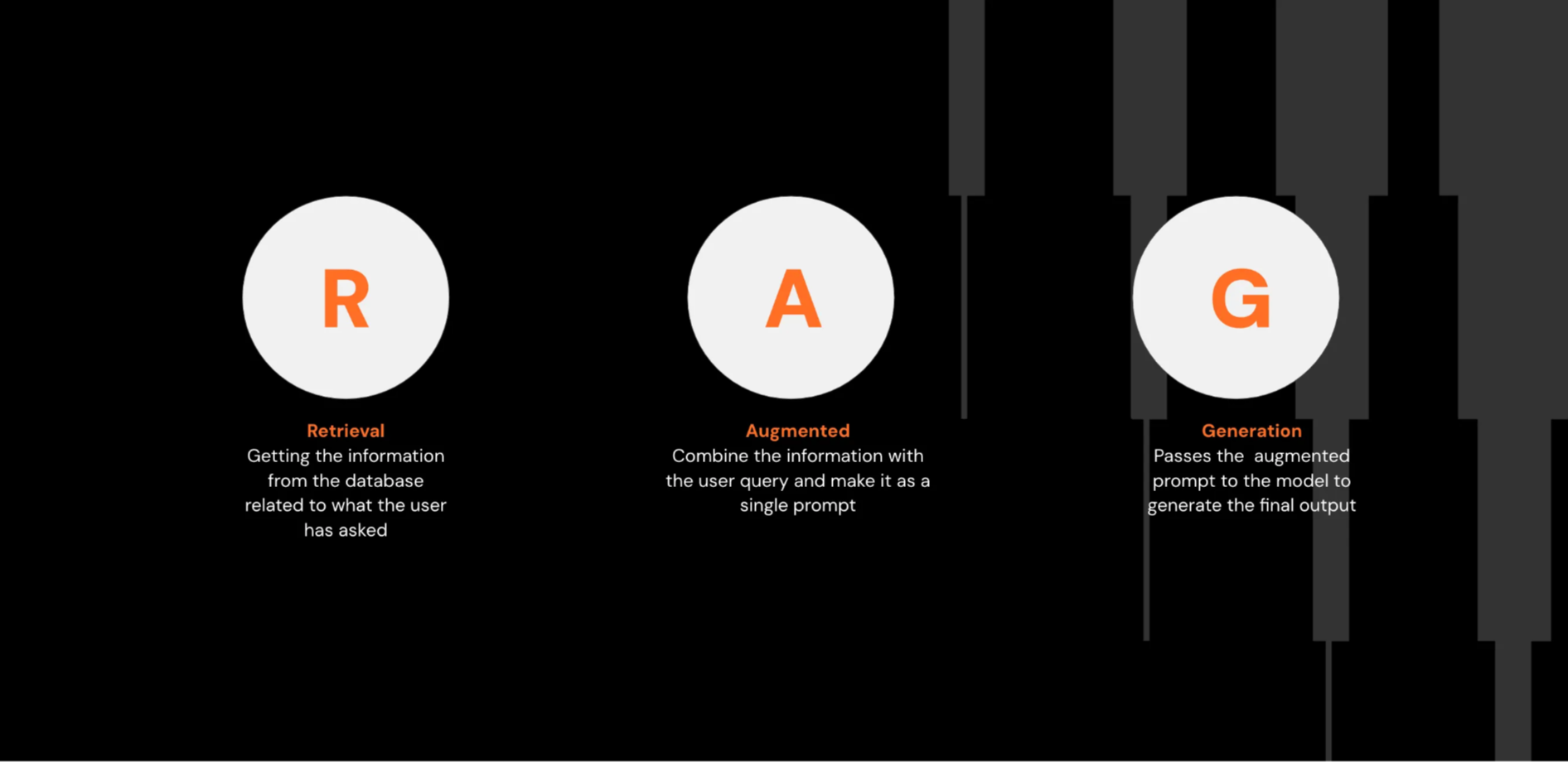

\ RAG stands for Retrieval-Augmented Generation, where:

- Retrieval: Fetches information from a database.

\

- Augmentation: Combines the retrieved information with the user’s prompt.

\

Generation: Produces the final answer using an LLM.

Traditional LLM Approach:

- A user asks a question.

\

- The LLM generates an answer based solely on its trained knowledge base.

\

- If the question is outside its knowledge base, it may provide incorrect or generic answers.

\ RAG Approach:

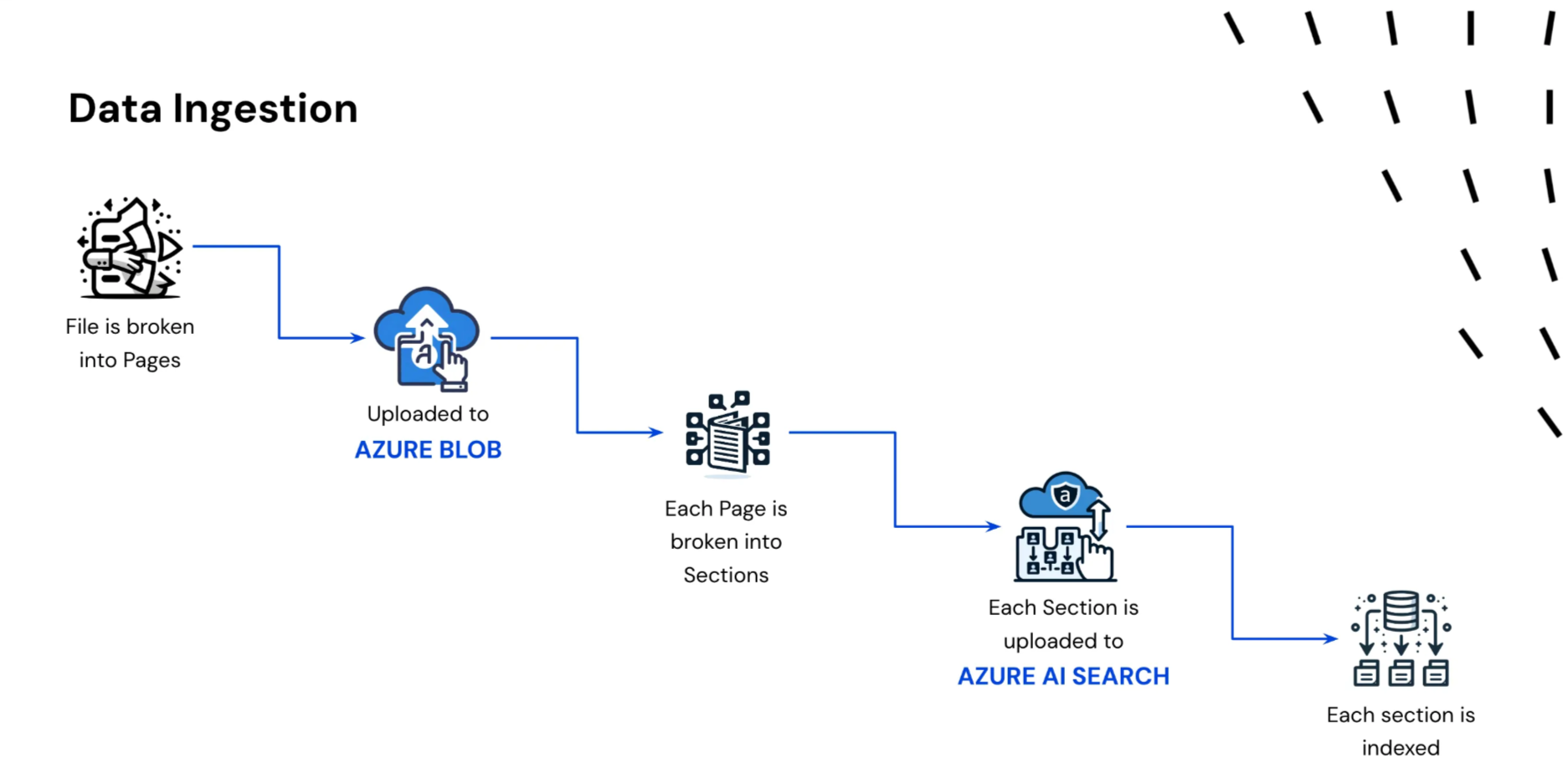

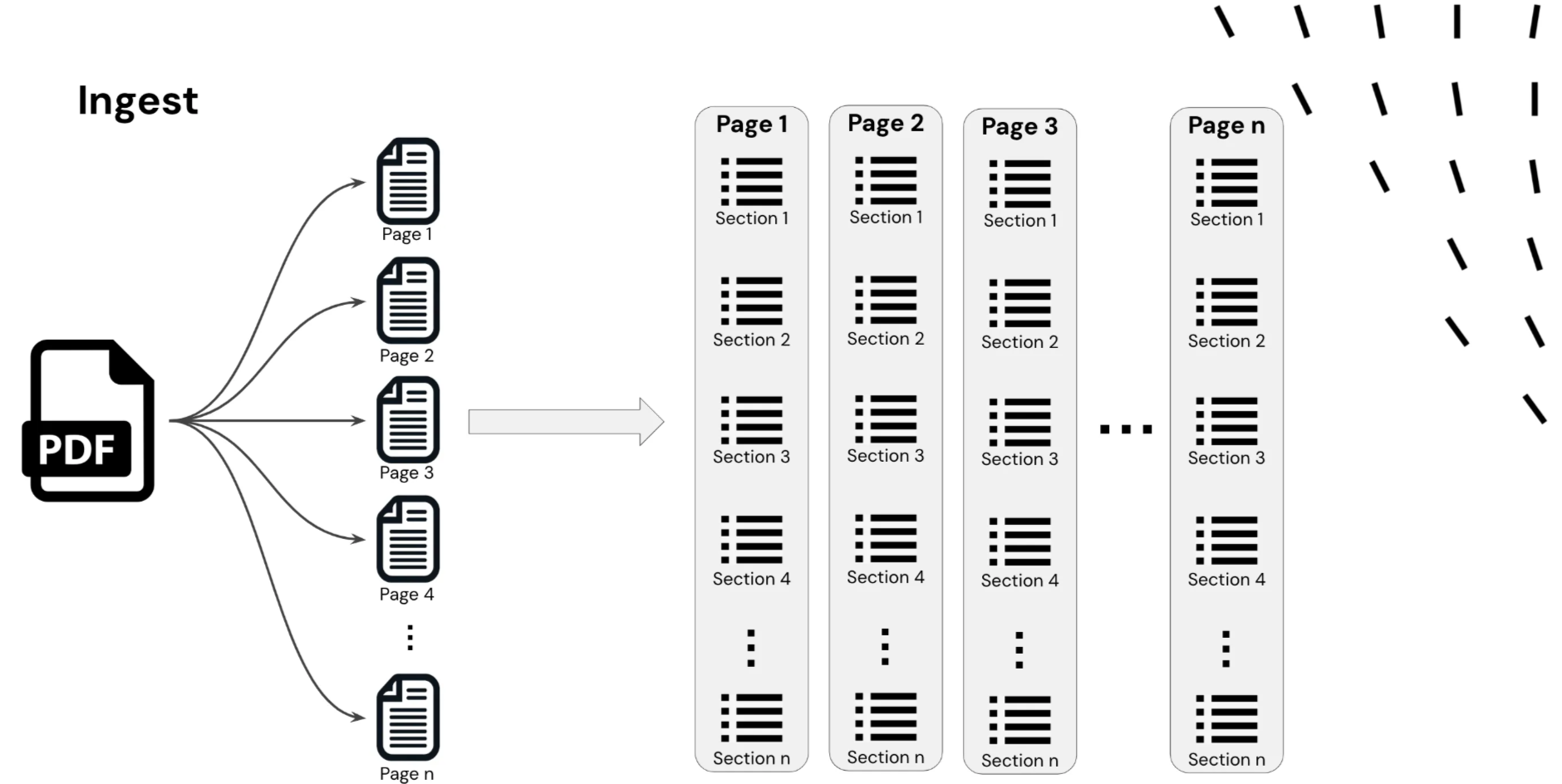

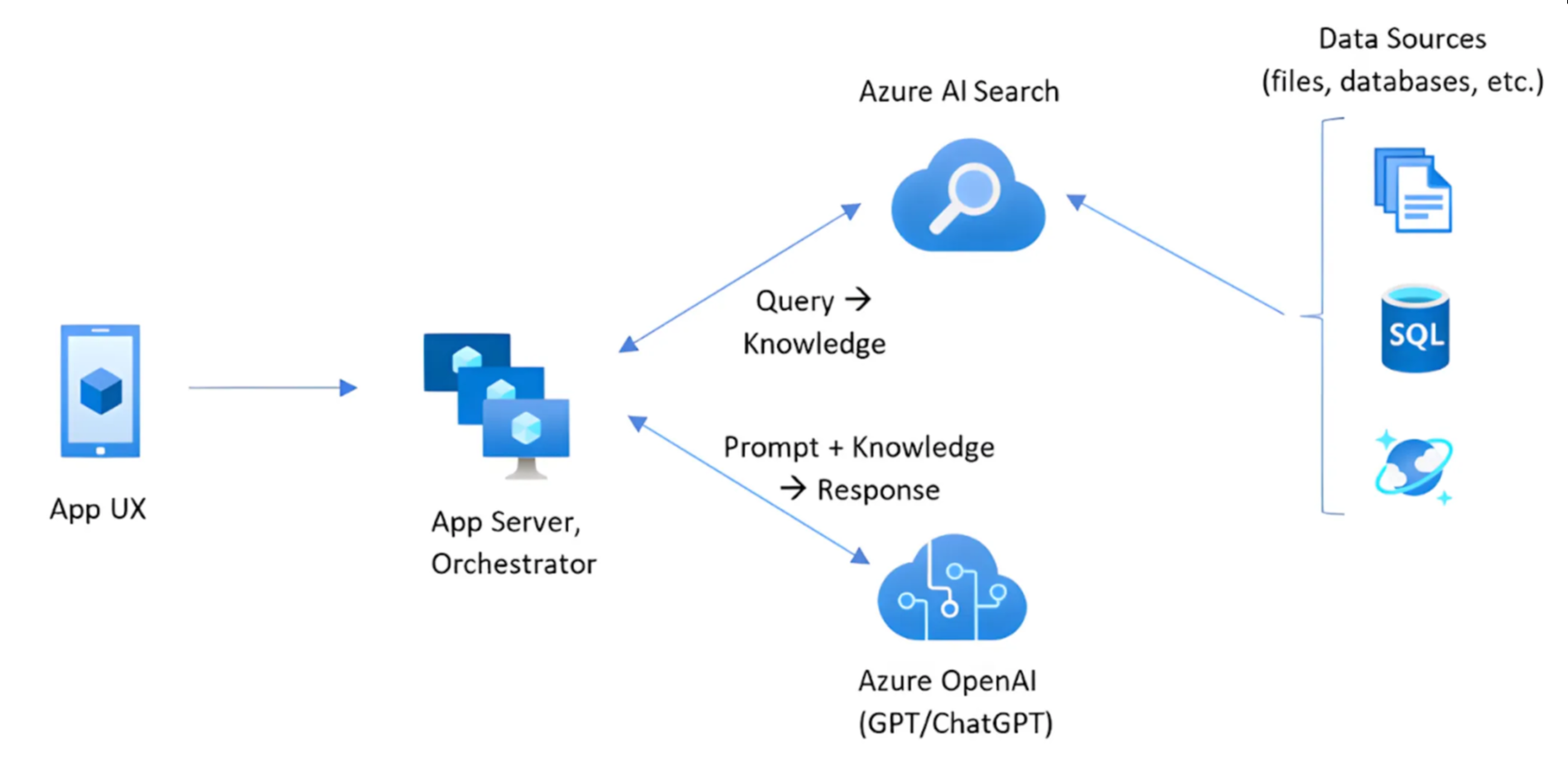

- Document Ingestion:

- A document is broken down into smaller chunks.

\

- These chunks are converted into embeddings (vector representations).

\

The embeddings are indexed and stored in a vector database.

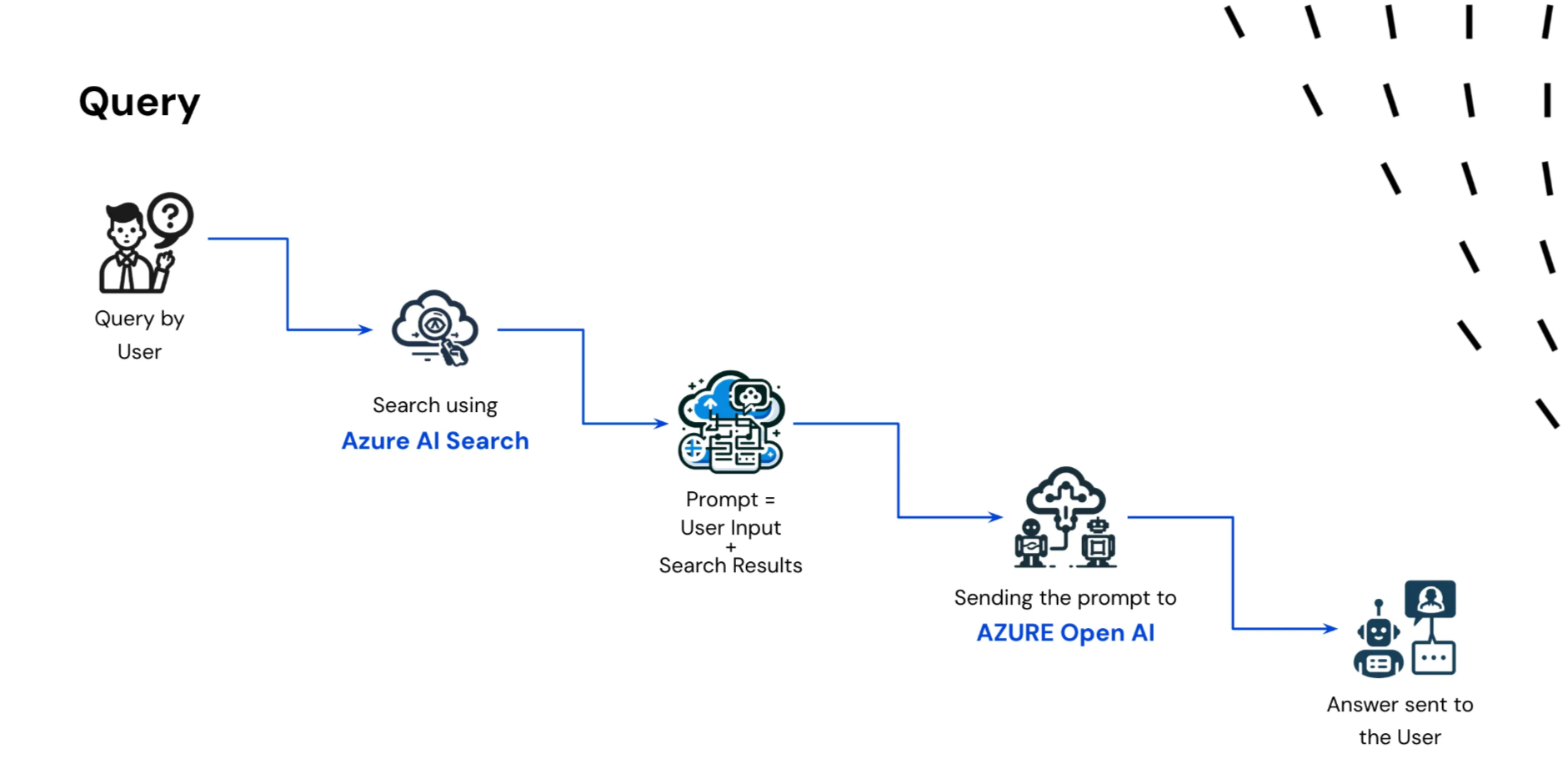

2. Query Processing:

- The user asks a question.

\

- The question is converted into an embedding using the same model.

\

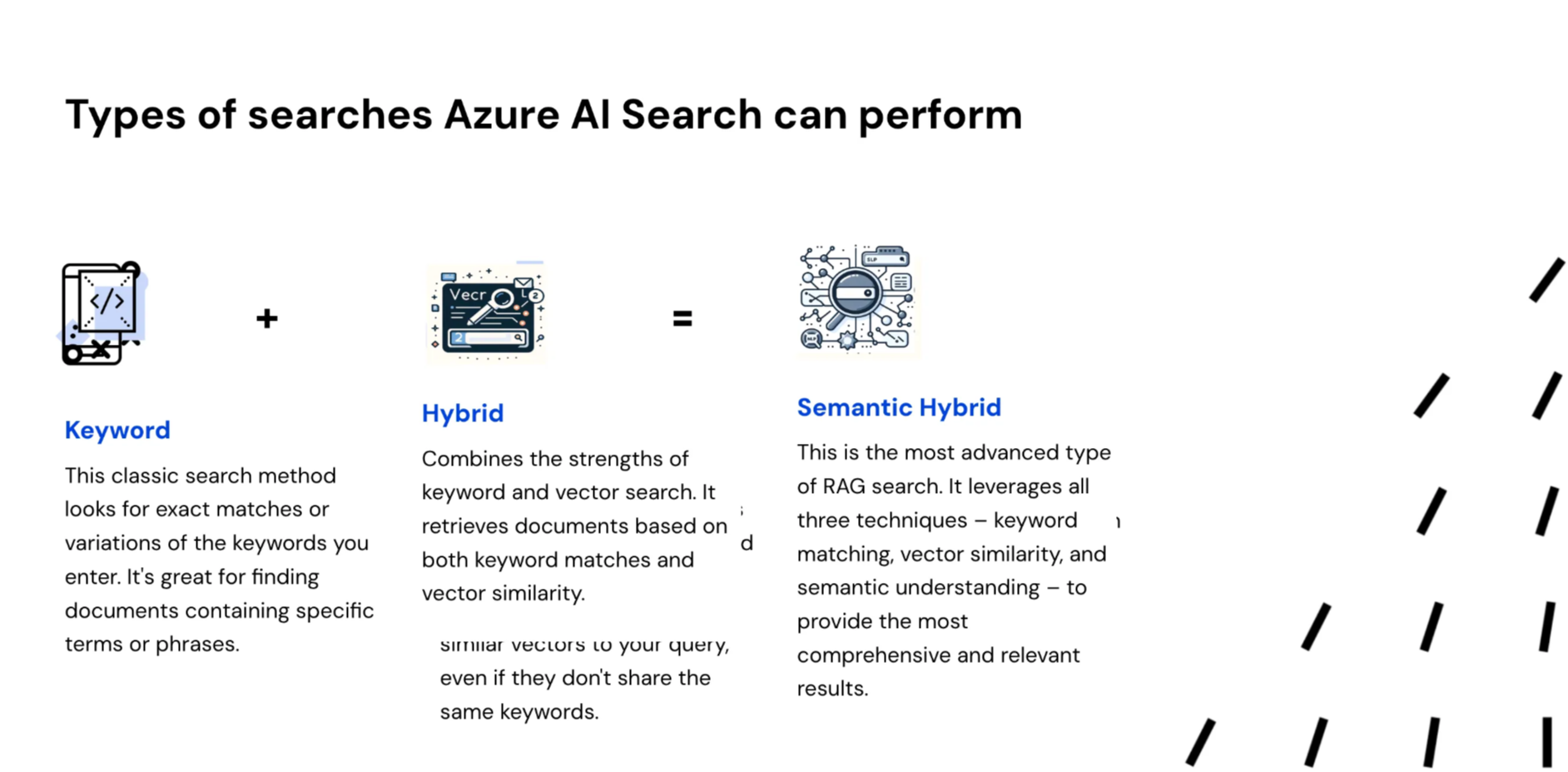

- A search engine queries the vector database to find the most relevant chunks.

\

- The top relevant results are retrieved.

\

3. Answer Generation:

- The retrieved information and the user’s question are combined.

\

- This combined input is passed to the LLM (like GPT-4 or LLaMA).

\

- The LLM generates a context-aware answer.

\

The answer is returned to the user.

\

Real-World Implementations of RAG General knowledge Retrieval:- Input extensive documents (hundreds or thousands of pages).

\

- Efficiently extract specific information when needed.

- RAG-powered chatbots can access real-time customer data.

\

- Provide accurate and personalized responses in sectors like finance, banking, or telecom.

\

- Improved first-response rates lead to higher customer satisfaction and loyalty.

- Assist in contract analysis, e-discoveries, or regulatory compliances.

\

- Streamline legal research and document review processes.

\ As Thomas Edison once said:

“Vision without execution is hallucination.”

\ In the context of AI:

“LLMs without RAG are hallucination.”

\

By integrating RAG, we can overcome many limitations of traditional LLMs, providing more accurate, up-to-date, and domain-specific answers.

\ In upcoming posts, we’ll explore more advanced topics on RAG and how to obtain even more relevant responses from it. Stay tuned!

\ Thank you for reading!

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.