and the distribution of digital products.

Over-Engineered Kubernetes Is a Trap

\

HelmHelm was designed to simplify Kubernetes application deployment, but it has become another abstraction layer that introduces unnecessary complexity. Helm charts often hide the underlying process with layers of Go templating and nested values.yaml files, making it difficult to understand what is actually being deployed. Debugging often requires navigating through these files, which can obscure the true configuration. This approach shifts from infrastructure-as-code to something less transparent, making it harder to manage and troubleshoot.

imagePullPolicy: {{ .Values.defaultBackend.image.pullPolicy }} {{- if .Values.defaultBackend.extraArgs }} args: {{- range $key, $value := .Values.defaultBackend.extraArgs }} {{- /* Accept keys without values or with false as value */}} {{- if eq ($value | quote | len) 2 }} - --{{ $key }} {{- else }} - --{{ $key }}={{ $value }} {{- end }} {{- end }} {{- end }}YAML itself isn’t inherently problematic, and with modern IDE support, schema validation, and linting tools, it can be a clear and effective configuration format. The issues arise when YAML is combined with Go templating, as seen in Helm. While each component is reasonable on its own, their combination creates complexity. Go templates in YAML introduce fragile constructs, where whitespace sensitivity and imperative logic make configurations difficult to read, maintain, and test. This blending of logic and data undermines transparency and predictability, which are crucial in infrastructure management.

Helm's dependency management also adds unnecessary complexity. Dependencies are fetched into a charts/ directory, but version pinning and overrides often become brittle. Instead of clean component reuse, Helm encourages nested charts with their own values.yaml, which complicates customization and requires understanding multiple charts to override a single value. In practice, Helm’s dependency management can feel like nesting shell scripts inside other shell scripts.

KustomzieKustomize offers a declarative approach to managing Kubernetes configurations, but its structure often blurs the line between declarative and imperative. Kustomize applies transformations to a base set of Kubernetes manifests, where users define overlays and patches that appear declarative, but are actually order-dependent and procedural.

It supports various patching mechanisms, which require a deep understanding of Kubernetes objects and can lead to verbose, hard-to-maintain configurations. Features like generators pulling values from files or environment variables introduce dynamic behavior, further complicating the system. When built-in functionality falls short, users can use KRM (Kubernetes Resource Model) functions for transformations, but these are still defined in structured data, leading to a complex layering of data-as-code that lacks clarity.

While Kustomize avoids explicit templating, it introduces a level of orchestration that can be just as opaque and requires extensive knowledge to ensure predictable results.

In many Kubernetes environments, the configuration pipeline has become a complex chain of tools and abstractions. What the Kubernetes API receives — plain YAML or JSON — is often the result of multiple intermediate stages, such as Helm charts, Helmsman, or GitOps systems like Flux or Argo CD. As these layers accumulate, they can obscure the final output, preventing engineers from easily accessing the fully rendered manifests.

This lack of visibility makes it hard to verify what will actually be deployed, leading to operational challenges and a loss of confidence in the system. When teams cannot inspect or reproduce the deployment artifacts, it becomes difficult to review changes or troubleshoot issues, ultimately turning a once-transparent process into a black box that complicates debugging and undermines reliability.

Other approachesApple’s pkl (short for "Pickle") is a configuration language designed to replace YAML, offering greater flexibility and dynamic capabilities. It includes features like classes, built-in packages, methods, and bindings for multiple languages, as well as IDE integrations, making it resemble a full programming language rather than a simple configuration format.

However, the complexity of pkl may be unnecessary. Its extensive documentation and wide range of features may be overkill for most use cases, especially when YAML itself can handle configuration management needs. If the issue is YAML’s repetitiveness, a simpler approach, such as sandboxed JavaScript, could generate clean YAML without the overhead of a new language.

KISSKubernetes configuration management is ultimately a string manipulation problem. Makefiles, combined with standard Unix tools, are ideal for solving this. Make provides a declarative way to define steps to generate Kubernetes manifests, with each step clearly outlined and only re-run when necessary. Tools like sed, awk, cat, and jq excel at text transformation and complement Make’s simplicity, allowing for quick manipulation of YAML or JSON files.

This approach is transparent — you can see exactly what each command does and debug easily when needed. Unlike more complex tools, which hide the underlying processes, Makefiles and Unix tools provide full control, making the configuration management process straightforward and maintainable.

https://github.com/avkcode/vault

HashiCorp Vault is a tool for managing secrets and sensitive data, offering features like encryption, access control, and secure storage. It was used as an example of critical infrastructure deployed on Kubernetes without Helm, emphasizing manual, customizable management of resources.

This Makefile automates Kubernetes deployment, Docker image builds, and Git operations. It handles environment-specific configurations, validates Kubernetes manifests, and manages Vault resources like Docker image builds, retrieving unseal/root keys, and interacting with Vault pods. It also facilitates Git operations such as creating tags, pushing releases, and generating archives or bundles. The file includes tasks for managing Kubernetes resources like services, statefulsets, and secrets, switching namespaces, and cleaning up generated files. Additionally, it supports interactive deployment sessions, variable listing, and manifest validation both client and server-side.

The rbac variable in the Makefile is defined using the define keyword to store a multi-line YAML configuration for Kubernetes RBAC, including a ServiceAccount and ClusterRoleBinding. The ${VAULT_NAMESPACE} placeholder is used for dynamic substitution. The variable is exported with export rbac and then included in the manifests variable. This allows the YAML to be templated with environment variables and reused in targets like template and apply for Kubernetes deployment.

define rbac --- apiVersion: v1 kind: ServiceAccount metadata: name: vault-service-account namespace: ${VAULT_NAMESPACE} --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: vault-server-binding roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: vault-service-account namespace: ${VAULT_NAMESPACE} endef export rbac manifests += $${rbac} manifests += $${configmap} manifests += $${services} manifests += $${statefulset} .PHONY: template apply delete template: @$(foreach manifest,$(manifests),echo "$(manifest)";) apply: create-release @$(foreach manifest,$(manifests),echo "$(manifest)" | kubectl apply -f - ;) delete: remove-release @$(foreach manifest,$(manifests),echo "$(manifest)" | kubectl delete -f - ;) validate-%: @echo "$$$*" | yq eval -P '.' - print-%: @echo "$$$*"The manifests array holds the multi-line YAML templates for Kubernetes resources, including RBAC, ConfigMap, Services, and StatefulSet. In the apply target, each manifest is processed and passed to kubectl apply to deploy them to the Kubernetes cluster. This approach uses foreach to iterate over the manifests array, applying each resource one by one. Similarly, the delete target uses kubectl delete to remove the resources defined in the manifests.

Using Make with tools like curl is a super flexible way to handle Kubernetes deployments, and it can easily replace some of the things Helm does. For example, instead of using Helm charts to manage releases, we’re just using kubectl in a Makefile to create and delete Kubernetes secrets. By running simple shell commands and using kubectl, we can manage things like versioning and configuration directly in Kubernetes without all the complexity of Helm. This approach gives us more control and is lighter weight, which is perfect for projects where you want simplicity and flexibility without the overhead of managing full Helm charts.

.PHONY: create-release create-release: @echo "Creating Kubernetes secret with VERSION set to Git commit SHA..." @SECRET_NAME="app-version-secret"; \ JSON_DATA="{\"VERSION\":\"$(GIT_COMMIT)\"}"; \ kubectl create secret generic $$SECRET_NAME \ --from-literal=version.json="$$JSON_DATA" \ --dry-run=client -o yaml | kubectl apply -f - @echo "Secret created successfully: app-version-secret" .PHONY: remove-release remove-release: @echo "Deleting Kubernetes secret: app-version-secret..." @SECRET_NAME="app-version-secret"; \ kubectl delete secret $$SECRET_NAME 2>/dev/null || true @echo "Secret deleted successfully: app-version-secret"Since the manifests array contains all the Kubernetes resource definitions, we can easily dump them into both YAML and JSON formats. The dump-manifests target runs make template to generate the YAML output and make convert-to-json to convert the same output into JSON. By redirecting the output to manifest.yaml and manifest.json, you're able to keep both versions of the resources for further use. It’s a simple and efficient way to generate multiple formats from the same set of manifests.

.PHONY: dump-manifests dump-manifests: template convert-to-json @echo "Dumping manifests to manifest.yaml and manifest.json..." @make template > manifest.yaml @make convert-to-json > manifest.json @echo "Manifests successfully dumped to manifest.yaml and manifest.json."With the validate-% target, you can easily validate any specific manifest by piping it through yq to check the structure or content in a readable format. This leverages external tools like yq to validate and process YAML directly within the Makefile, without needing to write complex scripts. Similarly, the print-% target allows you to quickly print the value of any Makefile variable, giving you an easy way to inspect variables or outputs. By using external tools like yq, you can enhance the flexibility of your Makefile, making it easy to validate, process, and manipulate manifests directly.

# Validates a specific manifest using `yq`. validate-%: @echo "$$$*" | yq eval -P '.' - # Prints the value of a specific variable. print-%: @echo "$$$*"With Makefile and simple Bash scripting, you can easily implement auxiliary functions like getting Vault keys. In this case, the get-vault-keys target lists available Vault pods, prompts for the pod name, and retrieves the Vault unseal key and root token by executing commands on the chosen pod. The approach uses basic tools like kubectl, jq, and Bash, making it much more flexible than dealing with Helm’s syntax or other complex tools. It simplifies the process and gives you full control over your deployment logic without having to rely on heavyweight tools or charts.

.PHONY: get-vault-keys get-vault-keys: @echo "Available Vault pods:" @PODS=$$(kubectl get pods -l app.kubernetes.io/name=vault -o jsonpath='{.items[*].metadata.name}'); \ echo "$$PODS"; \ read -p "Enter the Vault pod name (e.g., vault-0): " POD_NAME; \ if echo "$$PODS" | grep -qw "$$POD_NAME"; then \ kubectl exec $$POD_NAME -- vault operator init -key-shares=1 -key-threshold=1 -format=json > keys.json; \ VAULT_UNSEAL_KEY=$$(cat keys_$$POD_NAME.json | jq -r ".unseal_keys_b64[]"); \ echo "Unseal Key: $$VAULT_UNSEAL_KEY"; \ VAULT_ROOT_KEY=$$(cat keys.json | jq -r ".root_token"); \ echo "Root Token: $$VAULT_ROOT_KEY"; \ else \ echo "Error: Pod '$$POD_NAME' not found."; \ fi ConstrainsWhen managing complex workflows, especially in DevOps or Kubernetes environments, constraints play a vital role in ensuring consistency, preventing errors, and maintaining control over the build process. In Makefiles, constraints can be implemented to validate inputs, restrict environment configurations, and enforce best practices. Let’s explore how this works with a practical example.

What Are Constraints in Makefiles?

Constraints are rules or conditions that ensure only valid inputs or configurations are accepted during execution. For instance, you might want to limit the environments (dev, sit, uat, prod) where your application can be deployed, or validate parameter files before proceeding with Kubernetes manifest generation.

Example: Restricting Environment Configurations

Consider the following snippet from the provided Makefile:

ENV ?= dev ALLOWED_ENVS := global dev sit uat prod ifeq ($(filter $(ENV),$(ALLOWED_ENVS)),) $(error Invalid ENV value '$(ENV)'. Allowed values are: $(ALLOWED_ENVS)) endifHere’s how this works:

Default Value : The ENV variable defaults to dev if not explicitly set. Allowed Values : The ALLOWEDENVS variable defines a list of valid environments. Validation Check : The ifeq block checks if the provided ENV value exists in the ALLOWEDENVS list. If not, it throws an error and stops execution. For example:

Running make apply ENV=test will fail because test is not in the allowed list. Running make apply ENV=prod will proceed as prod is valid.

This snippet validates that the MEMORYREQUEST and MEMORYLIMIT values are within the acceptable range of 128Mi to 4096Mi. It extracts the numeric value, converts units (e.g., Gi to Mi), and checks if the values fall within the specified bounds. If not, it raises an error to prevent invalid configurations from being applied.

# Validate memory ranges (e.g., 128Mi <= MEMORY_REQUEST <= 4096Mi) MEMORY_REQUEST_VALUE := $(subst Mi,,$(subst Gi,,$(MEMORY_REQUEST))) MEMORY_REQUEST_UNIT := $(suffix $(MEMORY_REQUEST)) ifeq ($(MEMORY_REQUEST_UNIT),Gi) MEMORY_REQUEST_VALUE := $(shell echo $$(($(MEMORY_REQUEST_VALUE) * 1024))) endif ifeq ($(shell [ $(MEMORY_REQUEST_VALUE) -ge 128 ] && [ $(MEMORY_REQUEST_VALUE) -le 4096 ] && echo true),) $(error Invalid MEMORY_REQUEST value '$(MEMORY_REQUEST)'. It must be between 128Mi and 4096Mi.) endif MEMORY_LIMIT_VALUE := $(subst Mi,,$(subst Gi,,$(MEMORY_LIMIT))) MEMORY_LIMIT_UNIT := $(suffix $(MEMORY_LIMIT)) ifeq ($(MEMORY_LIMIT_UNIT),Gi) MEMORY_LIMIT_VALUE := $(shell echo $$(($(MEMORY_LIMIT_VALUE) * 1024))) endif ifeq ($(shell [ $(MEMORY_LIMIT_VALUE) -ge 128 ] && [ $(MEMORY_LIMIT_VALUE) -le 4096 ] && echo true),) $(error Invalid MEMORY_LIMIT value '$(MEMORY_LIMIT)'. It must be between 128Mi and 4096Mi.) endif diffAdvanced diff capabilities to compare Kubernetes manifests across different states: live cluster vs generated, previous vs current, git revisions, and environments.

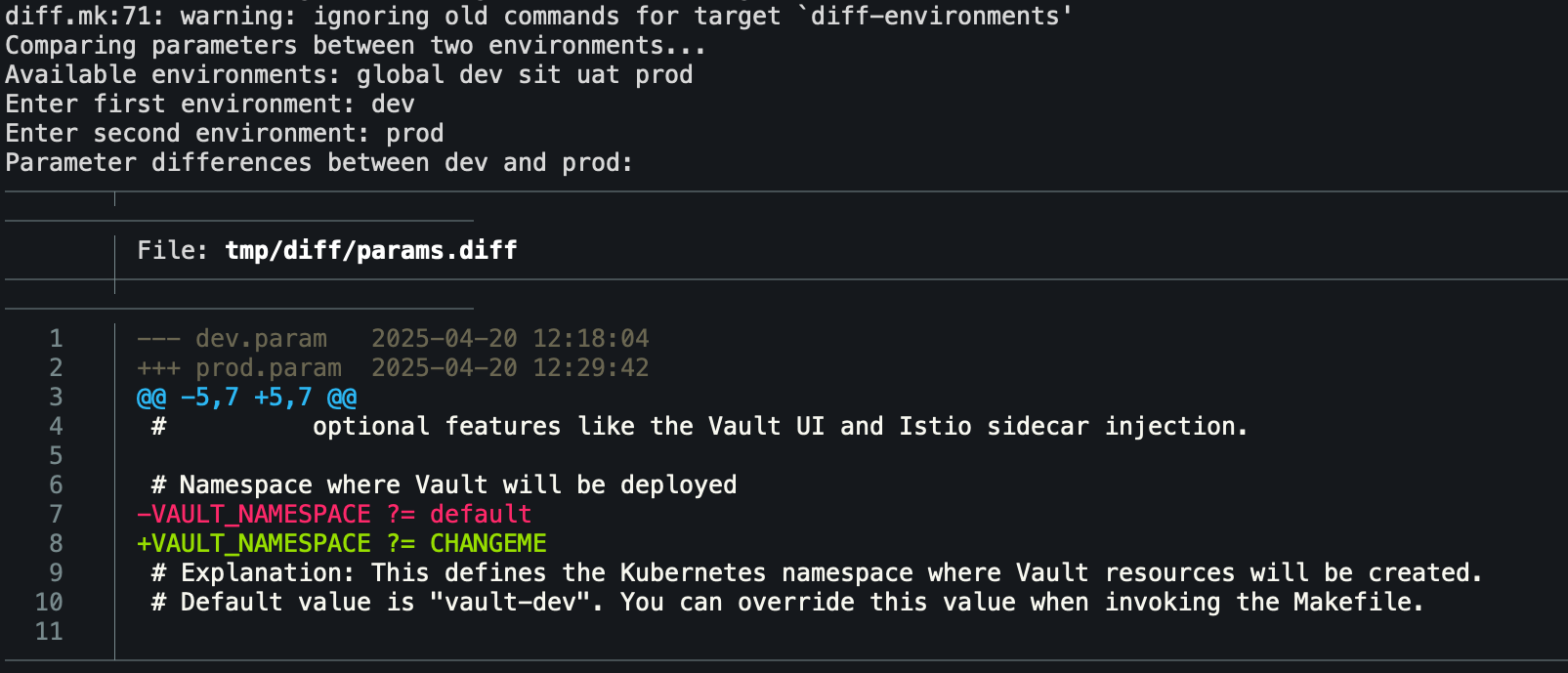

diff - Interactive diff menu diff-live - Compare live cluster vs generated manifests diff-previous - Compare previous vs current manifests diff-revisions - Compare between git revisions diff-environments - Compare manifests across environments diff-params - Compare parameter files between environments

LabelsUsing Make for Kubernetes deployments offers greater flexibility compared to Helm, as it allows for fully customizable workflows without enforcing a rigid structure. While Helm is designed specifically for Kubernetes and provides a standardized templating system, it can feel restrictive for non-standard or highly dynamic use cases. With Make, you can dynamically generate lists of labels and annotations programmatically, avoiding the need to manually define them in manifests. This approach ensures consistency and reduces repetitive work by leveraging variables, environment data, and tools like Git metadata. Additionally, Make integrates seamlessly with external scripts and tools, enabling more complex logic and automation that Helm’s opinionated framework might not easily support.

Example:

# Extract Git metadata GIT_BRANCH := $(shell git rev-parse --abbrev-ref HEAD) GIT_COMMIT := $(shell git rev-parse --short HEAD) GIT_REPO_URL := $(shell git config --get remote.origin.url || echo "local-repo") ENV_LABEL := app.environment:$(ENV) GIT_BRANCH_LABEL := app.git/branch:$(GIT_BRANCH) GIT_COMMIT_LABEL := app.git/commit:$(GIT_COMMIT) GIT_REPO_LABEL := app.git/repo:$(GIT_REPO_URL) TEAM_LABEL := app.team:devops OWNER_LABEL := app.owner:engineering DEPLOYMENT_LABEL := app.deployment:nginx-controller VERSION_LABEL := app.version:v1.23.0 BUILD_TIMESTAMP_LABEL := app.build-timestamp:$(shell date -u +"%Y-%m-%dT%H:%M:%SZ") RELEASE_LABEL := app.release:stable REGION_LABEL := app.region:us-west-2 ZONE_LABEL := app.zone:a CLUSTER_LABEL := app.cluster:eks-prod SERVICE_TYPE_LABEL := app.service-type:LoadBalancer INSTANCE_LABEL := app.instance:nginx-controller-instance # Combine all labels into a single list LABELS := \ $(ENV_LABEL) \ $(GIT_BRANCH_LABEL) \ $(GIT_COMMIT_LABEL) \ $(GIT_REPO_LABEL) \ $(TEAM_LABEL) \ $(OWNER_LABEL) \ $(DEPLOYMENT_LABEL) \ $(VERSION_LABEL) \ $(BUILD_TIMESTAMP_LABEL) \ $(RELEASE_LABEL) \ $(REGION_LABEL) \ $(ZONE_LABEL) \ $(CLUSTER_LABEL) \ $(SERVICE_TYPE_LABEL) \ $(INSTANCE_LABEL) \ $(DESCRIPTION_LABEL) define generate_labels $(shell printf " %s\n" $(patsubst %:,%: ,$(LABELS))) endef # Example target to print the labels in YAML format print-labels: @echo "metadata:" @echo " labels:" @$(foreach label,$(LABELS),echo " $(shell echo $(label) | sed 's/:/=/; s/=/:\ /')";)This will output:

make -f test.mk metadata: labels: app.environment: app.git/branch: main app.git/commit: 0158245 app.git/repo: https://github.com/avkcode/vault.git app.team: devops app.owner: engineering app.deployment: nginx-controller app.version: v1.23.0 app.build-timestamp: 2025-04-22T12:38:58Z app.release: stable app.region: us-west-2 app.zone: a app.cluster: eks-prod app.service-type: LoadBalancer app.instance: nginx-controller-instanceUsing with manifests:

efine deployment --- apiVersion: apps/v1 kind: Deployment metadata: labels: $(call generate_labels) name: release-name-ingress-nginx-controller namespace: default spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: release-name app.kubernetes.io/component: controller replicas: 1 revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: $(call generate_labels) spec: dnsPolicy: ClusterFirst containers: - name: controller image: registry.k8s.io/ingress-nginx/controller:v1.12.1@sha256:d2fbc4ec70d8aa2050dd91a91506e998765e86c96f32cffb56c503c9c34eed5b imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --publish-service=$(POD_NAMESPACE)/release-name-ingress-nginx-controller - --election-id=release-name-ingress-nginx-leader - --controller-class=k8s.io/ingress-nginx - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/release-name-ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: runAsNonRoot: true runAsUser: 101 runAsGroup: 82 allowPrivilegeEscalation: false seccompProfile: type: RuntimeDefault capabilities: drop: - ALL add: - NET_BIND_SERVICE readOnlyRootFilesystem: false env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: release-name-ingress-nginx automountServiceAccountToken: true terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: release-name-ingress-nginx-admission endef export deploymentWith general-purpose coding, dynamically generating labels based on parameters like target environment (DEV/PROD/STAGE) is straightforward—just define rules and inject values at runtime. Tools aren't needed for such simple string manipulation.

make validate-configmap --- apiVersion: v1 kind: ConfigMap metadata: labels: app.environment:dev app.git/branch:main app.git/commit:af4eae9 app.git/repo:https://github.com/avkcode/nginx.git app.team:devops app.critical:false app.sla:best-effort name: release-name-ingress-nginx-controller namespace: defaultBy default DEV dev app.team:devops app.critical:false app.sla:best-effort

Adds app.team:devops app.critical:true app.sla:tier-1

ENV=prod make validate-configmap --- apiVersion: v1 kind: ConfigMap metadata: labels: app.environment:prod app.git/branch:main app.git/commit:af4eae9 app.git/repo:https://github.com/avkcode/nginx.git app.team:devops app.critical:true app.sla:tier-1 name: release-name-ingress-nginx-controller namespace: defaultCode:

# Validate and set environment VALID_ENVS := dev sit uat prod ENV ?= dev ifeq ($(filter $(ENV),$(VALID_ENVS)),) $(error Invalid ENV. Valid values are: $(VALID_ENVS)) endif # Environment-specific settings ifeq ($(ENV),prod) APP_PREFIX := prod EXTRA_LABELS := app.critical:true app.sla:tier-1 else ifeq ($(ENV),uat) APP_PREFIX := uat EXTRA_LABELS := app.critical:false app.sla:tier-2 else ifeq ($(ENV),sit) APP_PREFIX := sit EXTRA_LABELS := app.critical:false app.sla:tier-3 else APP_PREFIX := dev EXTRA_LABELS := app.critical:false app.sla:best-effort endif # Extract Git metadata GIT_BRANCH := $(shell git rev-parse --abbrev-ref HEAD) GIT_COMMIT := $(shell git rev-parse --short HEAD) GIT_REPO := $(shell git config --get remote.origin.url) ENV_LABEL := app.environment:$(ENV) GIT_BRANCH_LABEL := app.git/branch:$(GIT_BRANCH) GIT_COMMIT_LABEL := app.git/commit:$(GIT_COMMIT) GIT_REPO_LABEL := app.git/repo:$(GIT_REPO) TEAM_LABEL := app.team:devops LABELS := \ $(ENV_LABEL) \ $(GIT_BRANCH_LABEL) \ $(GIT_COMMIT_LABEL) \ $(GIT_REPO_LABEL) \ $(TEAM_LABEL) \ $(EXTRA_LABELS) define generate_labels $(shell printf " %s\n" $(patsubst %:,%: ,$(LABELS))) endef HelmIf you’re absolutely required to distribute a Helm chart but don’t have one pre-made, no worries—it’s totally possible to generate one from the manifests produced by this Makefile. The genhelmchart.py script (referenced via include helm.mk) automates this process. It takes the Kubernetes manifests generated by the Makefile and packages them into a Helm chart. This way, you can still meet the requirement for a Helm chart while leveraging the existing templates and workflows in the Makefile.

Sometimes, the simplest way of using just Unix tools is the best way. By relying on basic utilities like kubectl, jq, yq, and Make, you can create powerful, customizable workflows without the need for heavyweight tools like Helm. These simple, straightforward scripts offer greater control and flexibility. Plus, with LLMs (large language models) like this one, generating and refining code has become inexpensive and easy, making automation accessible. However, when things go wrong, debugging complex tools like Helm can become exponentially more expensive in terms of time and effort. Using minimal tools lets you stay in control, reduce complexity, and make it easier to fix issues when they arise. Sometimes, less really is more.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.