and the distribution of digital products.

DM Television

OpenAI bets on AMD, plans custom AI chips by 2026

OpenAI, the company behind ChatGPT, is expanding its efforts to secure reliable and cost-effective computing power for its AI models. By developing custom silicon, OpenAI aims to reduce dependence on external suppliers like NVIDIA, whose GPUs dominate the AI chip market. According to Reuters OpenAI has partnered with Broadcom and secured manufacturing capacity with Taiwan Semiconductor Manufacturing Company (TSMC), while incorporating AMD chips into its Microsoft Azure setup.

OpenAI to build custom AI chips with Broadcom and TSMCOpenAI’s journey towards developing its own AI chips started with assembling a team of about 20 people, including top engineers who previously worked on Google’s Tensor Processing Units (TPUs). This in-house chip team, led by experienced engineers like Thomas Norrie and Richard Ho, is working closely with Broadcom to design and produce custom silicon that will focus on inference workloads. The chips are expected to be manufactured by TSMC, the world’s largest semiconductor foundry, starting in 2026.

The goal behind developing in-house silicon is twofold: to secure a stable supply of high-performance chips and to manage escalating costs associated with AI workloads. While demand for training chips is currently higher, industry experts anticipate that the need for inference chips will surpass training chips as more AI applications reach the deployment stage. Broadcom’s expertise in helping fine-tune chip designs for mass production and providing components that optimize data movement makes it an ideal partner for this ambitious project.

OpenAI had previously considered building its own chip foundries but ultimately decided to abandon those plans due to the immense costs and time required. Instead, OpenAI is focusing on designing custom chips while relying on TSMC for manufacturing.

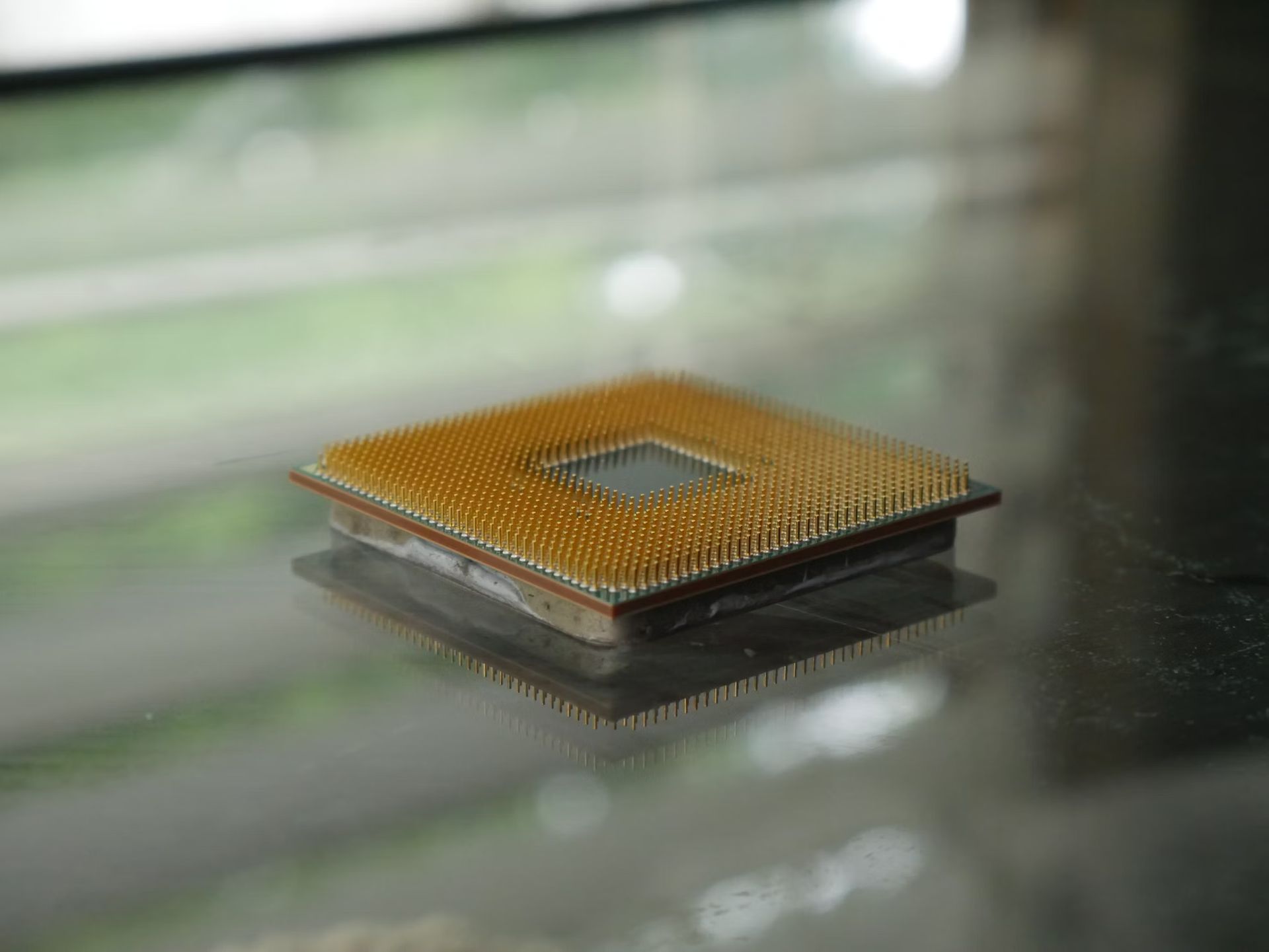

The goal behind developing in-house silicon is twofold: to secure a stable supply of high-performance chips (Image credit)

Incorporating AMD chips for diversification

The goal behind developing in-house silicon is twofold: to secure a stable supply of high-performance chips (Image credit)

Incorporating AMD chips for diversification

Alongside its partnership with Broadcom, OpenAI is also incorporating AMD’s new MI300X chips into its Microsoft Azure setup. AMD introduced these chips last year as part of its data center expansion strategy, aiming to capture some of the market share currently held by NVIDIA. The inclusion of AMD chips will allow OpenAI to diversify its chip supply, reducing its dependence on a single supplier and helping to manage costs more effectively.

AMD’s MI300X chips are part of its push to compete with NVIDIA, which currently holds over 80% of the market share in AI hardware. The MI300X chips are designed to support AI workloads, particularly in inference and model training. By adding AMD chips into its infrastructure, OpenAI is hoping to alleviate some of the supply constraints it has faced with NVIDIA GPUs, which have been in high demand and subject to shortages.

This strategic move is also a response to rising compute costs, which have become a major challenge for OpenAI. The company has been dealing with high expenses for hardware, electricity, and cloud services, leading to projected losses of $5 billion this year. Reducing its reliance on a single supplier like NVIDIA, which has been increasing its prices, could help OpenAI better manage these costs and continue developing its AI models without significant delays or interruptions.

The road aheadDespite the ambitious plan to develop custom chips, there are significant challenges ahead for OpenAI. Building an in-house silicon solution takes time and money, and the first custom-designed chips are not expected to be in production until 2026. This timeline puts OpenAI behind some of its larger competitors like Google, Microsoft, and Amazon, who have already made substantial progress in developing their own custom AI hardware.

The partnership with Broadcom and TSMC represents an important step forward, but it also highlights the difficulties faced by companies trying to break into the chip market. Manufacturing high-performance AI chips requires substantial expertise, advanced production facilities, and considerable investment. TSMC, as the manufacturing partner, will play a key role in determining the success of this venture. The timeline for chip production could still change, depending on factors like design complexity and manufacturing capacity.

Another challenge lies in talent acquisition. OpenAI is cautious about poaching talent from NVIDIA, as it wants to maintain a good relationship with the chipmaker, especially since it still relies heavily on NVIDIA for its current-generation AI models. NVIDIA’s Blackwell chips are expected to be crucial for upcoming AI projects, and maintaining a positive relationship is essential for OpenAI’s ongoing access to these cutting-edge GPUs.

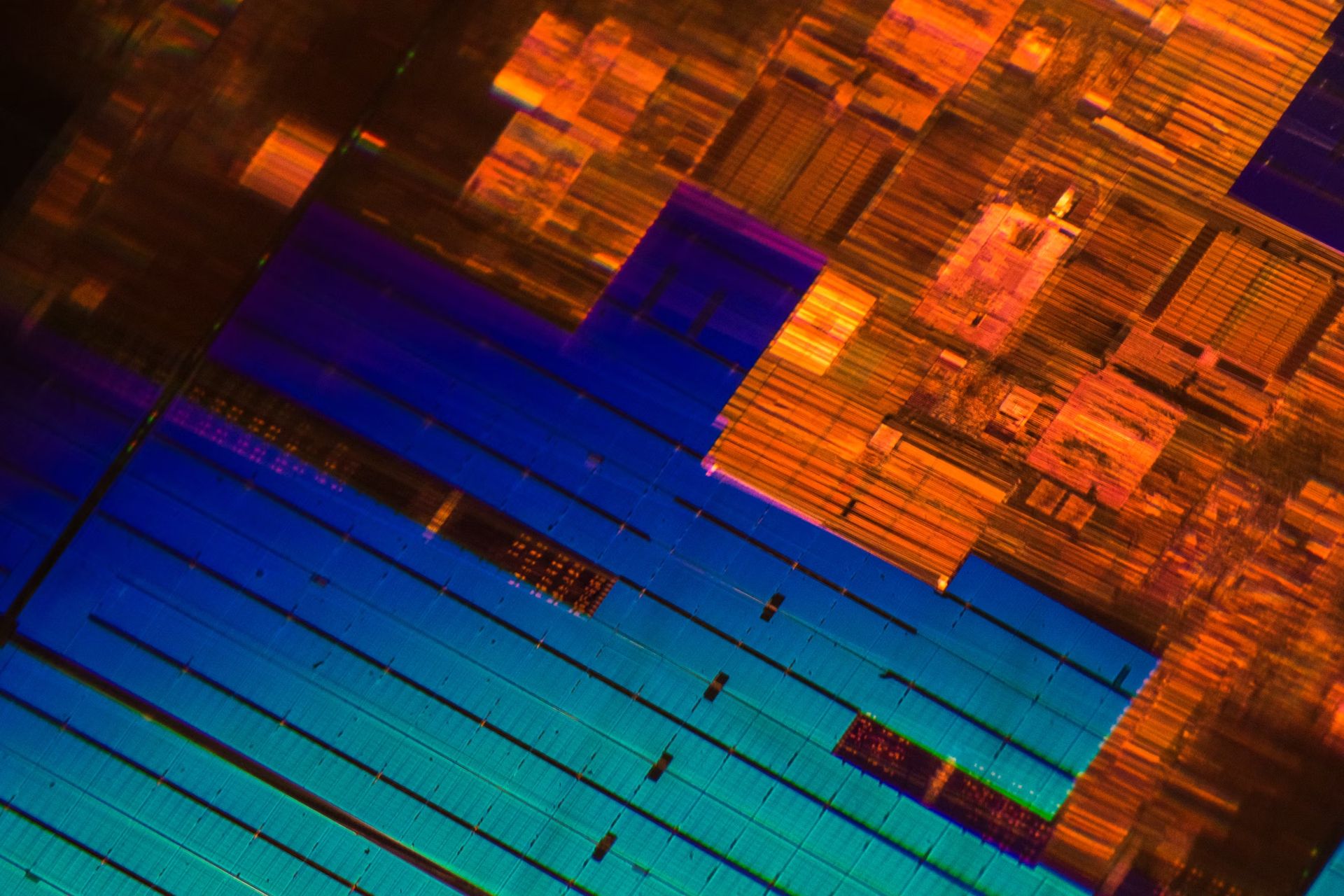

The main driver behind OpenAI’s custom chip initiative is cost (Image credit)

Why OpenAI needs custom silicon

The main driver behind OpenAI’s custom chip initiative is cost (Image credit)

Why OpenAI needs custom silicon

The main driver behind OpenAI’s custom chip initiative is cost. Training and deploying large AI models like GPT-4 require massive computing power, which translates to high infrastructure expenses. OpenAI’s annual compute costs are projected to be one of its largest expenses, with the company expecting a $5 billion loss this year despite generating $3.7 billion in revenue. By developing its own chips, OpenAI hopes to bring these costs under control, giving it a competitive edge in the crowded AI market.

Custom silicon also offers performance benefits. By tailoring chips specifically for the needs of AI inference, OpenAI can optimize performance, improve efficiency, and reduce latency. This is particularly important for delivering high-quality, real-time responses in products like ChatGPT. While NVIDIA’s GPUs are highly capable, custom-designed hardware can provide more targeted optimization, potentially leading to significant gains in performance and cost efficiency.

The approach of blending internal and external chip solutions provides OpenAI with greater flexibility in how it scales its infrastructure. By working with Broadcom on custom designs while also incorporating AMD and NVIDIA GPUs, OpenAI is positioning itself to better navigate the challenges of high demand and supply chain limitations. This diversified approach will help the company adapt to changing market conditions and ensure that it has the computing resources necessary to continue pushing the boundaries of AI.

Featured image credit: Andrew Neel/Unsplash

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.