and the distribution of digital products.

DM Television

New Prompting Technique Claims to Help AI Think Like Humans

\

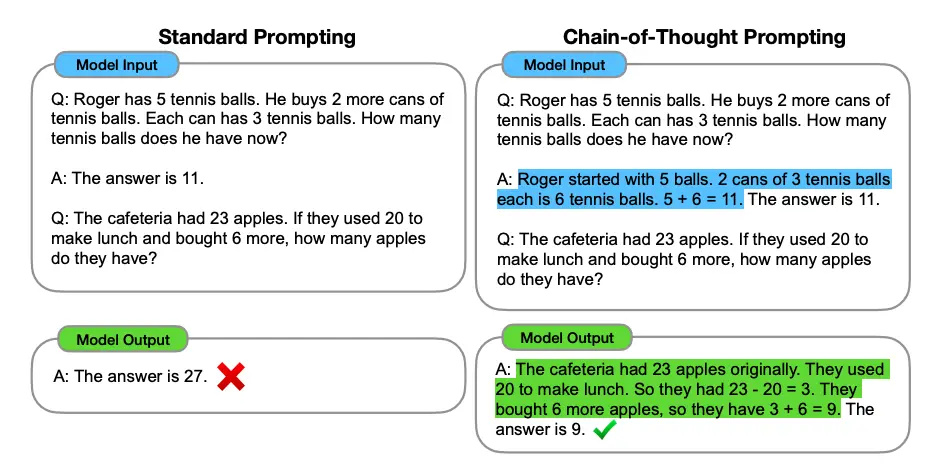

Ever wondered how to make AI think more like a human? While Large Language Models (LLMs) excel at predicting the next word in a sequence, they often stumble when faced with problems requiring methodical thinking. Enter Chain-of-Thought prompting – a game-changing technique that's revolutionizing how we interact with AI.

Breaking Down Chain-of-Thought PromptingImagine teaching a child to solve a complex puzzle. Instead of showing them the final picture, you guide them through each step. That's essentially what Chain-of-Thought (CoT) prompting does for LLMs. By providing examples that showcase step-by-step reasoning, we help these models arrive at accurate solutions through logical progression.

\

\

The Magic Formula: Why It WorksTraditional prompting methods often lead to hit-or-miss results. CoT prompting, however, breaks down complex problems into digestible chunks, allowing the model to tackle each component systematically. This approach has proven so successful that cutting-edge models like OpenAI's latest offerings have incorporated it into their core functionality.

\

Real-World Application: Solving Mathematical PuzzlesLet's dive into a practical example. Imagine you're helping an AI solve this equation

5x - 4 = 16

\ Here's how CoT prompting guides the model:

- First step: Add 4 to both sides

- 5x - 4 + 4 = 16 + 4

- 5x = 20

- Second step: Divide both sides by 5

5x/5 = 20/5

x = 4

\

This structured approach ensures accuracy and demonstrates the reasoning process—something particularly valuable when dealing with complex mathematical operations.

\

Chain-of-Thought vs. Few-Shot Prompting: Understanding the DistinctionBoth of these techniques might seem similar at first glance but they serve a different purpose:

\

Few-Shot Prompting: Provides examples showing input and output, like a multiple-choice answer key.

Chain-of-Thought Prompting: Demonstrates the complete reasoning process, like showing all work in a math problem.

\

Few-Shot Prompting would look like this:

Example 1: Problem: If a store sells books for $5 each and markers for $3 each, how much would 2 books and 4 markers cost? Answer: $22 Example 2: Problem: If a store sells notebooks for $4 each and pens for $2 each, how much would 3 notebooks and 5 pens cost? Answer: $22\ Chain-of-Thought Prompting would look like this:

Problem: If a store sells books for $5 each and markers for $3 each, how much would 2 books and 4 markers cost? Thinking: 1. Calculate cost of books: 2 books × $5 = $10 2. Calculate cost of markers: 4 markers × $3 = $12 3. Total cost = Cost of books + Cost of markers = $10 + $12 = $22 Answer: $22 Problem: If a store sells notebooks for $4 each and pens for $2 each, how much would 3 notebooks and 5 pens cost? Thinking: 1. Calculate cost of notebooks: 3 notebooks × $4 = $12 2. Calculate cost of pens: 5 pens × $2 = $10 3. Total cost = Cost of notebooks + Cost of pens = $12 + $10 = $22 Answer: $22 Zero-Shot Chain-of-ThoughtUsing the simple yet powerful phrase of "Let's think step by step," Zero-shot CoT prompting is used in circumstances when you don't have example cases handy. For example, "Let's think step by step: Explain quantum physics."

When should you utilize CoT prompting?CoT prompting shines when dealing with:

- Complicated arithmetic

- Multiple-step logical reasoning

- Common sense

- Symbolic Manipulation

\

:::tip The most recent benchmarks from Claude showcased that methods using 3-shot or 5-shot CoT prompting are doing well for reasoning-heavy tasks: GPQA and MMLU.

:::

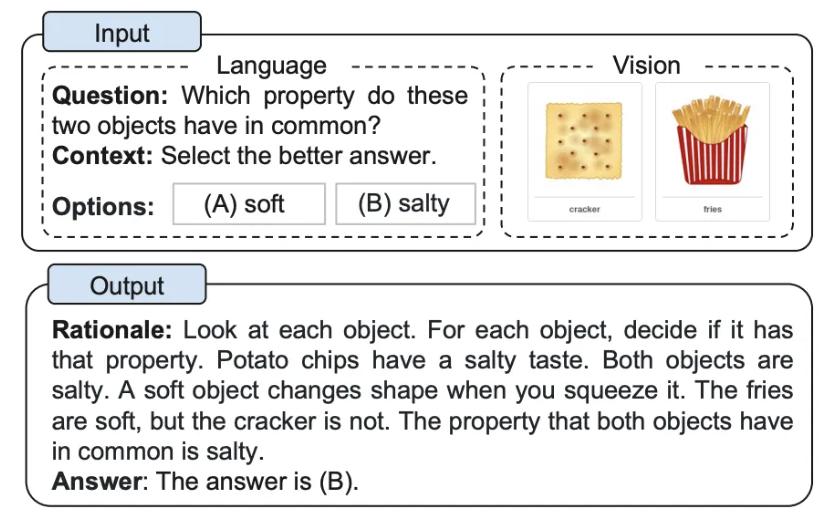

The Future: Multimodal Chain of ThoughtThe most recent advancement in AI reasoning integrates visual and textual components in cognitive processes. Envision an AI diagnosing a bicycle repair, comprehending the manual, and providing visual and audio guidance throughout each stage of the process. The multimodal approach signifies the forthcoming advancement in AI support.

\

While the chain-of-thought prompting is powerful, it operates with certain limitations. The path of reasoning the model performs isn't guaranteed to be fault-free and is marginally varied in the results.

\ Here are some tips to have better results:

- Thoroughly test with different approaches.

- Consider combining CoT prompting with other prompting strategies.

- Use larger models with over 100 billion parameters, if possible.

Given how AI has progressed, it is evident that Chain-of-Thought (CoT) prompting is an effective technique for harnessing more advanced reasoning abilities. It is important to note that employing CoT prompting for the development of applications or for achieving more optimal results in AI engagements greatly heightens the chances of achieving success.

\ The intention isn't only on attaining the accurate response, but also on comprehending the procedure undertaken to do so, and that is the beauty of Chain-of-Thought prompting.

Footnotes- Wei, J., Wang, X., Schuurmans, D., Bosma, M., Ichter, B., Xia, F., Chi, E., Le, Q., & Zhou, D. (2022). Chain of Thought Prompting Elicits Reasoning in Large Language Models.

- Kojima, T., Gu, S. S., Reid, M., Matsuo, Y., & Iwasawa, Y. (2022). Large Language Models are Zero-Shot Reasoners.

- Zhang, Zhuosheng, et al. "Multimodal chain-of-thought reasoning in language models." arXiv preprint arXiv:2302.00923(2023). \n

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright 2025, Central Coast Communications, Inc.