and the distribution of digital products.

The Missing Layer in AI Infrastructure: Aggregating Agentic Traffic

As AI agents evolve from passive responders to autonomous actors, they are increasingly chaining tool calls and initiating outbound API traffic at runtime — often without direct human oversight.

This type of behavior, referred to as “agentic traffic,” is on the rise as developers adopt orchestration frameworks and emerging standards like the Model Context Protocol (MCP), which allows agents to interface with tools and data dynamically.

The challenge? Most infrastructure is still built to monitor what comes in, not what goes out — leaving a critical blind spot in today’s AI systems.

The Risks of Moving Agents Into ProductionAgentic traffic introduces a fundamentally different operational model. Agents are now programmatic consumers of APIs, interacting through tool usage, orchestration frameworks like LangChain and ReAct, and increasingly, through open standards like MCP (Model Context Protocol) and A2A (Agent2Agent). For example, ChatGPT plugins enable agents to autonomously book travel or retrieve financial data — actions that appear as outbound requests.

These interactions often bypass traditional infrastructure layers, exposing organizations to new risks. One is cost overruns. Agents can spiral into runaway call loops or overconsume LLM tokens — especially during retries or recursive tasks. Without token-level quotas or budget enforcement, even a single misbehaving agent can cause significant cost spikes. A Dell report outlines the need for runtime safeguards.

Another risk is a lack of observability. Autonomous agents often operate as black boxes. Without central logging or telemetry, it’s hard to trace actions or diagnose failures. Sawmills.ai highlights the growing gap between agent complexity and current monitoring tools.

There are also security risks to consider. Beyond specific incidents like the GitHub MCP exploit, agents are exposed to a broader range of vulnerabilities. The OWASP Top 10 for LLMs lists excessive agency, prompt injection, unbounded consumption, and output manipulation as persistent risks. LLMs struggle with enforcing safe outputs, and over-permissioned agents can leak data or trigger downstream failures. Mitigation requires runtime policies, scoped credentials, output validation, and behavioral controls — all enforceable via a governance layer.

Why Existing Infrastructure Falls ShortMost organizations use API gateways to secure inbound traffic and service meshes to manage microservice communication. But neither was designed to govern outbound traffic initiated autonomously by AI agents.

When agents use standards like MCP to connect to tools or A2A to delegate tasks across agents, their requests are often regular HTTP calls, meaning they can bypass enforcement points entirely.

While these standards streamline agent development, they also introduce complexity: MCP allows shared access to tools across agents but lacks granular permissioning by default. A2A enables multi-agent orchestration but risks cascading failures if not monitored closely.

In this environment, there’s no aggregation point to enforce global policies, throttle usage, or provide unified visibility. That’s why existing layers fall short — they were never built to manage the runtime behavior of autonomous API consumers.

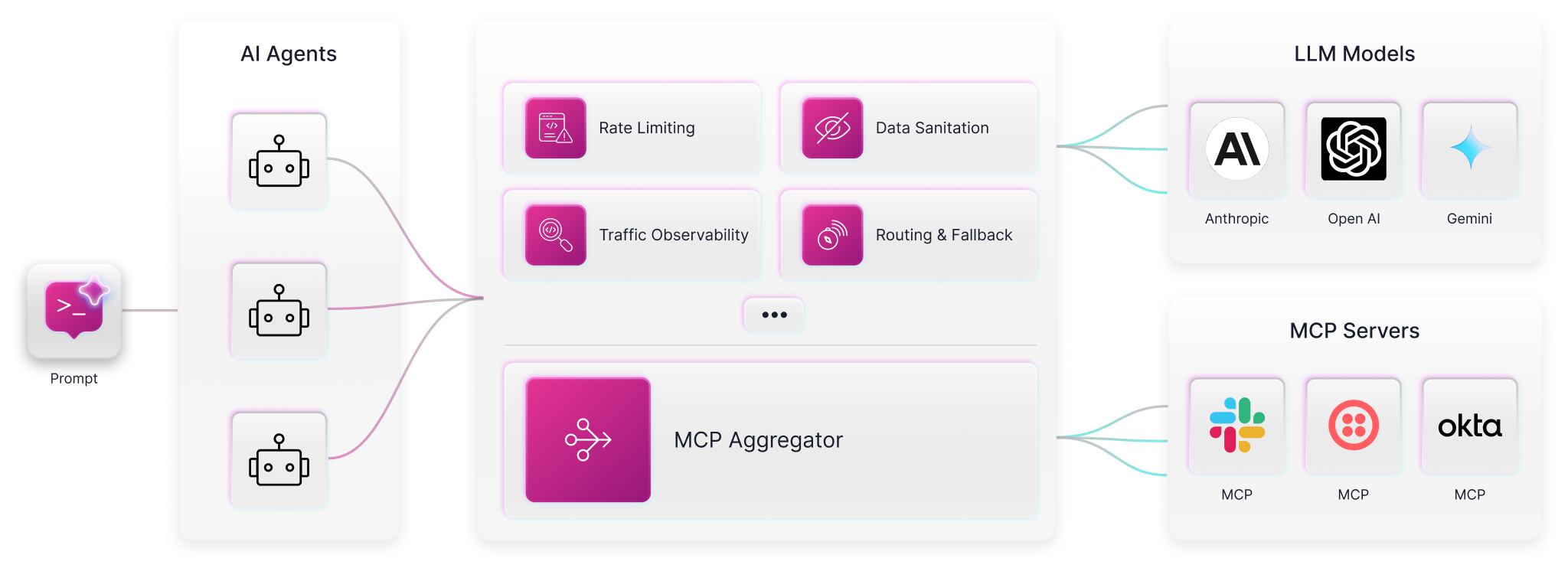

The Case for AI GatewaysTo close this gap, engineering leaders are turning to a new architectural pattern: the AI gateway. Yet, AI gateways must evolve to reflect a broader mandate.

Originally created to manage LLM-specific traffic — such as token enforcement and model routing — AI gateways are now being called on to handle a much wider range of agentic behaviors. This includes not just traffic from LLMs but also outbound calls to tools via MCP and collaborative agent workflows via A2A.

As these standards mature and agents become more autonomous, the role of the AI gateway must expand from a narrow enforcement point to a full-fledged runtime policy layer — capable of mediating, securing, and shaping traffic across every surface where agents act. Gartner’s 2024 Hype Cycle underscores this shift, positioning AI gateways as central to taming the growing complexity of AI infrastructure.

What Does An AI Gateway Do?

What Does An AI Gateway Do?

An AI gateway doesn’t replace your API gateway — it complements it. While API gateways manage inbound traffic, AI gateways govern outbound, autonomous traffic, particularly traffic shaped by agent standards like MCP and A2A.

Key capabilities of an AI gateway include:

- Credential shielding: Hiding and managing secrets, rotating keys, and isolating tokens

- Rate limiting and quotas: Enforcing usage boundaries and preventing cost explosions

- Multi-provider routing: Load balancing across LLMs or optimizing for cost and latency

- Prompt-aware filtering: Scanning and blocking malicious requests or unsafe responses

- Audit logging: Maintaining full traceability of who called what, when, and why

- Data sanitation and AI guardrails: Cleaning inputs and outputs, enforcing usage constraints, and ensuring compliant agent behavior

That said, even without a full-blown platform, you can begin mitigating agentic risk by actively logging outbound traffic, even with a simple reverse proxy. Avoid scopes that provide agents broad use and use short-lived access tokens to prevent persistent access.

Consider applying limits, such as retry caps, timeouts, and budgets, to control runaway agents. Defining AI policies will help here. Codify what agents are allowed to do, especially in regulated or production environments.

In terms of tooling, lightweight wrappers or proxies can help centralize control, whereas commercial gateways can provide more drop-in solutions.

Final ThoughtsThe rise of autonomous agents is reshaping software architecture. But new power brings new complexity. Agentic traffic — initiated by AI, routed via MCP, and delegated via A2A — demands governance.

Just as companies adopted API gateways to manage external exposure and service meshes to tame microservices, they’ll now need AI gateways to manage the agentic era.

You wouldn’t expose your internal APIs to the internet without a gateway. Don’t expose your tools to your agents without one either. Start early. Start safe. Govern your agents like any other piece of infrastructure.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.