and the distribution of digital products.

How Ensemble Strategies Impact Adversarial Robustness in Multi-Exit Networks

:::info Authors:

(1) Seokil Ham, KAIST;

(2) Jungwuk Park, KAIST;

(3) Dong-Jun Han, Purdue University;

(4) Jaekyun Moon, KAIST.

:::

Table of Links3. Proposed NEO-KD Algorithm and 3.1 Problem Setup: Adversarial Training in Multi-Exit Networks

4. Experiments and 4.1 Experimental Setup

4.2. Main Experimental Results

4.3. Ablation Studies and Discussions

5. Conclusion, Acknowledgement and References

B. Clean Test Accuracy and C. Adversarial Training via Average Attack

E. Discussions on Performance Degradation at Later Exits

F. Comparison with Recent Defense Methods for Single-Exit Networks

G. Comparison with SKD and ARD and H. Implementations of Stronger Attacker Algorithms

4.3 Ablation Studies and DiscussionsEffect of each component of NEO-KD. In Table 6, we observe the effects of our individual components, NKD and EOKD. It shows that combining NKD and EOKD boosts up the performance beyond the sum of their original gains. Given different roles, the combination of NKD and EOKD enables multi-exit networks to achieve the state-of-the-art performance under adversarial attacks.

\ Effect of the type of ensembles in NKD. In the proposed NKD, we consider only the neighbor exits to distill the knowledge of clean data. What if we consider fewer or more exits than neighboring exits? If the number of ensembles is too small, the scheme does not distill high-quality features. If the number of ensembles is too large, the dependencies among submodels increase, resulting in high adversarial transferability. To see this effect, in Table 7, we measure adversarial test accuracy of three types of ensembling methods depending on the number of exits used for constructing ensembles: no ensembling, ensemble neighbors (NKD), and ensemble all exits. In no enesmbling approach, we distill the knowledge of each exit from clean data to the output at the same position of exit for adversarial examples. In contrast, the ensemble all exits scheme averages the knowledge of all exits from clean data and provides it to all exits of adversarial examples. The ensemble neighbors approach corresponds to our NKD. The results show that the proposed NEO-KD with neighbor ensembling

\

\ enables to distill high-quality features while lowering dependencies among submodels, confirming our intuition.

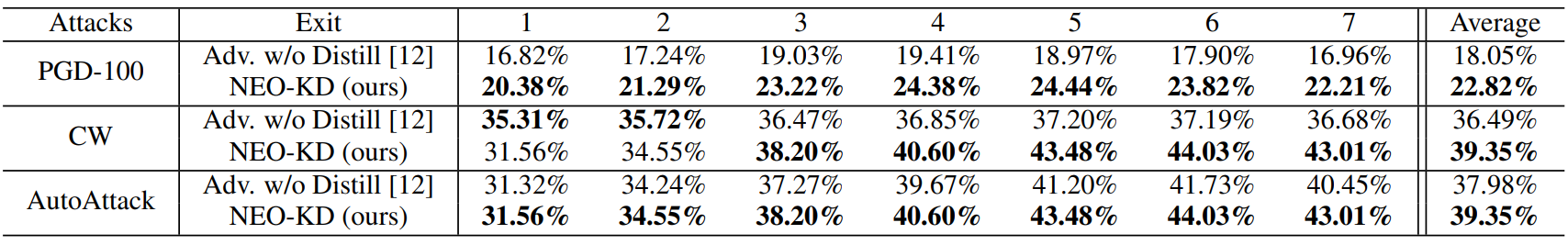

\ Robustness against stronger adversarial attack. We evaluate NEO-KD against stronger adversarial attacks; we perform average attack based on PGD-100 [21], Carlini and Wagner (CW) [2], and AutoAttack [5]. Table 8 shows that NEO-KD achieves higher adversarial test accuracy than Adv. w/o Distill [12] in most of cases. Typically, CW attack and AutoAttack are stronger attacks than the PGD attack in single-exit networks. However, in the context of multi-exit networks, these attacks become weaker than the PGD attack when taking all exits into account. Details for generating stronger adversarial attacks are described in Appendix.

\ Additional results. Other results including clean test accuracy, results with average attack based adversarial training, results with varying hyperparameters, and results with another baseline used in single-exit network, are provided in Appendix.

\

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.