and the distribution of digital products.

How to AutomateGoogle Cloud Security Audits with Terraform and Python

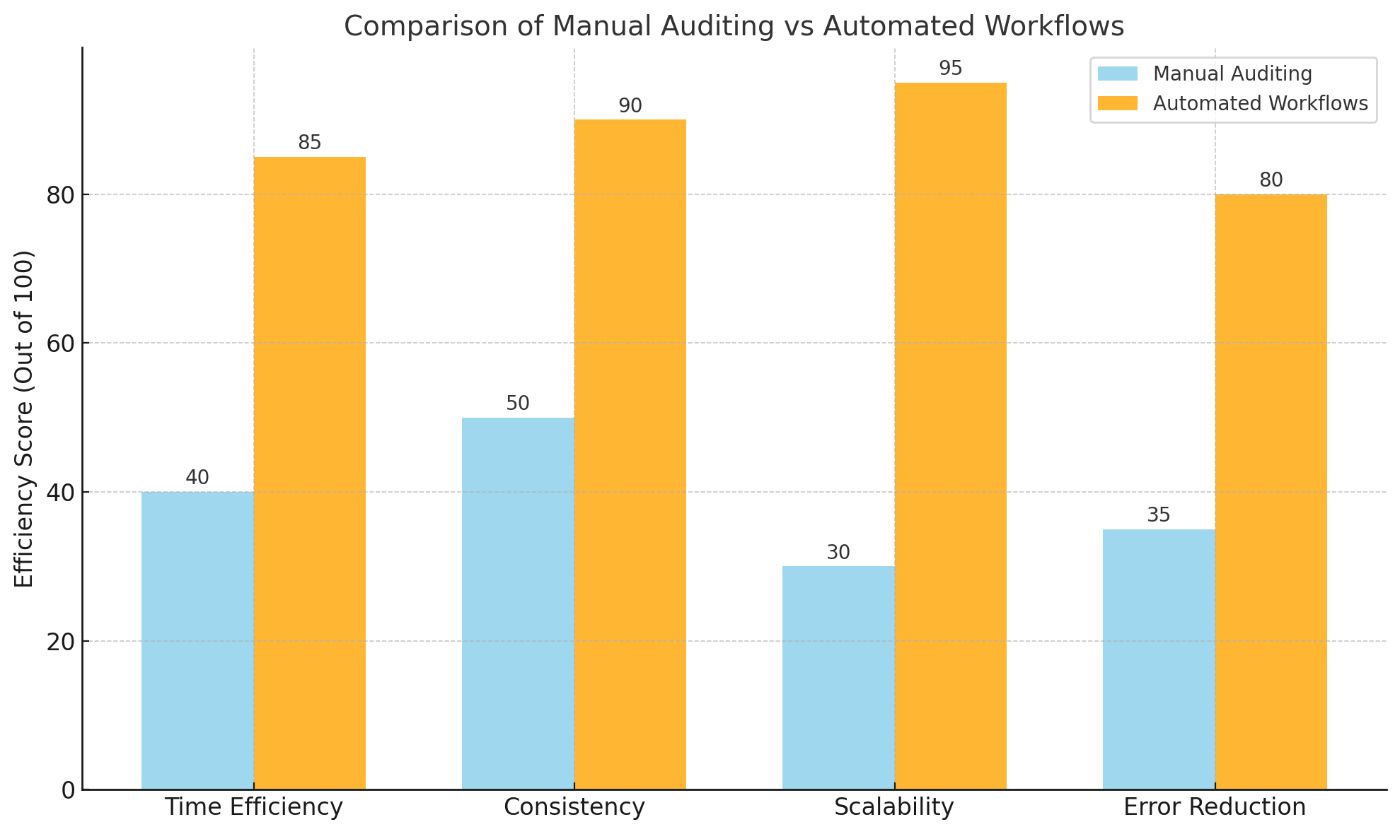

Security in cloud infrastructure is of utmost priority in today's cloud-driven world. GCP in itself is a powerful tool with its own set of functionalities but, like all its competitors, suffers from inconsistencies due to the scale and complexities of the cloud environment. Furthermore, security audits are highly necessary to identify any misconfigurations or vulnerabilities in the configuration, when done manually, these become pretty time-consuming and error-prone processes. \n \n Terraform and Python form a perfect combination for this automation of audits. Terraform is anIaaC - Infrastructure As A Code tool that allows declarative management of GCP resources while baking in security best practices. Python has extensive libraries and GCP API support for easy scripting on custom audit checks and automation workflows. We can integrate these tools to build a scalable, efficient, proactive security auditing system for GCP. \n \n The aim of this article is to show, programatically, with real-life examples and code snippets, how one could automate GCP security audits by using Terraform and Python. In this article, I will show you how to provision secured infrastructure and trigger automated security alerts in ways that will help with cloud security management.

Setting Up Your EnvironmentBefore we provision any resources and create the required infrastructure for this article, we need to set up the Google Cloud environment. I will briefly explain and list the prerequisites, tools, and configurations needed in this section to get up and running.

Prerequisites- An account on the Google Cloud Platform with administrative privileges.

- Basic knowledge of Google Cloud Platform services, Terraform, and Python.

- We would need Terraform and Python installed and running on your local machine or development environment.

- Install Terraform by downloading the Terraform binary from HashiCorp's official site. \n You can also follow the instructionshere for the other OS.

- Verify terraform installation:

- We need Python 3.X installed. If not, download it from python.org. Use pip to install the required libraries:

To interact with Google Cloud, we need appropriate permissions and a service user account for automation. Follow these steps:

- Create a Service Account

Go to the Google Cloud Console.

Navigate to IAM & Admin > Service Accounts > Create Service Account.

Assign roles like Owner or Security Admin.

Download the JSON key file and save it securely on your local machine.

\

- Authenticate Terraform

- Set up Terraform to use your service account credentials:

Add this to your .bashrc or .zshrc profile.

\

Authenticate Python Scripts

We need to configure the service account credentials in the Python script as well

Terraform is an infrastructure as code tool that lets you build, change, and version infrastructure safely and efficiently by defining it as code. In the below section, I’ll walk you through creating secure infrastructure with Terraform.

Step 1: Creating a Terraform ConfigurationLet’s begin by defining a secure Cloud Storage bucket, which will store all the user’s data. You can read more about Cloud Storage bucket here.

\

Create a Terraform Configuration File:

Save the following code in a file named main.tf

\

Define Input Variables:

Create a variables.tf file to hold configurable variables:

\

Add a Terraform State Backend (Optional)

To ensure state management, configure a remote backend like Google Cloud Storage. This step is optional which avoids any conflict creating or updating the resources if you’re working in a team environment. Read this article on the terraform state conflicts.

- Initialize Terraform by running the following command to download the necessary providers and modules

\

- Validate the Configuration by ensuring the Terraform code has no syntax errors

\

- Apply the Configuration to provision the resources

\ Terraform will display the plan and prompt you for confirmation. Enter yes or y to proceed.

Step 3: Reviewing the OutputAfter applying the configuration, you’ll see outputs similar to:

google_storage_bucket.storage_bucket: Creation complete after 3s [id=storage-bucket-audit--xyz123]\ Verify the bucket in the Google Cloud Console under Cloud Storage. It will have:

- Versioning is enabled and supports recovering deleted and overwritten objects.

- Logging enabled to track activities of access.

- Lifecycle policies that manage object retention.

- Use the least privileged IAM roles for your service accounts.

- Enable encryption at rest for all storage buckets.

- Audit sensitive information exposure in Terraform state files regularly.

While Terraform helps provision secure resources, Python handles it by automating security audits to ensure continuous compliance. In this section, I will explain and show how to use Python scripts to identify security misconfigurations in Google Cloud Platform.

\

Install Required Libraries

Ensure the necessary Python libraries are installed:

\

Authenticate Using Service Account

Use the service account credentials to interact with GCP APIs:

Audit IAM Policies for Overly Permissive Roles:

This script identifies Cloud Storage buckets with overly permissive IAM policies, such as granting "allUsers" or "allAuthenticatedUsers" access:

\

\

Check for Missing Logging Configurations:

This snippet verifies if access logging is enabled for Cloud Storage buckets or not

Execute the Python script using the below command:

python perform_gcp_audit.pyThe output of the above script will print the buckets with oepn IAM roles or missing logging configurations, asking you to take the required actions.

Step 4: Automating the ProcessTo continuously run the above script, we can schedule the script using cron or can integrate it with a CI/CD pipeline.

Example of a cron job to run the script daily:

0 0 * * * /usr/bin/python3 /path/to/perform_gcp_audit.py Best Practices- Log audit results to a secure location for traceability.

- Combine findings with GCP’s Security Command Center for a holistic security view.

- Regularly update the script to include checks for new services or configurations.

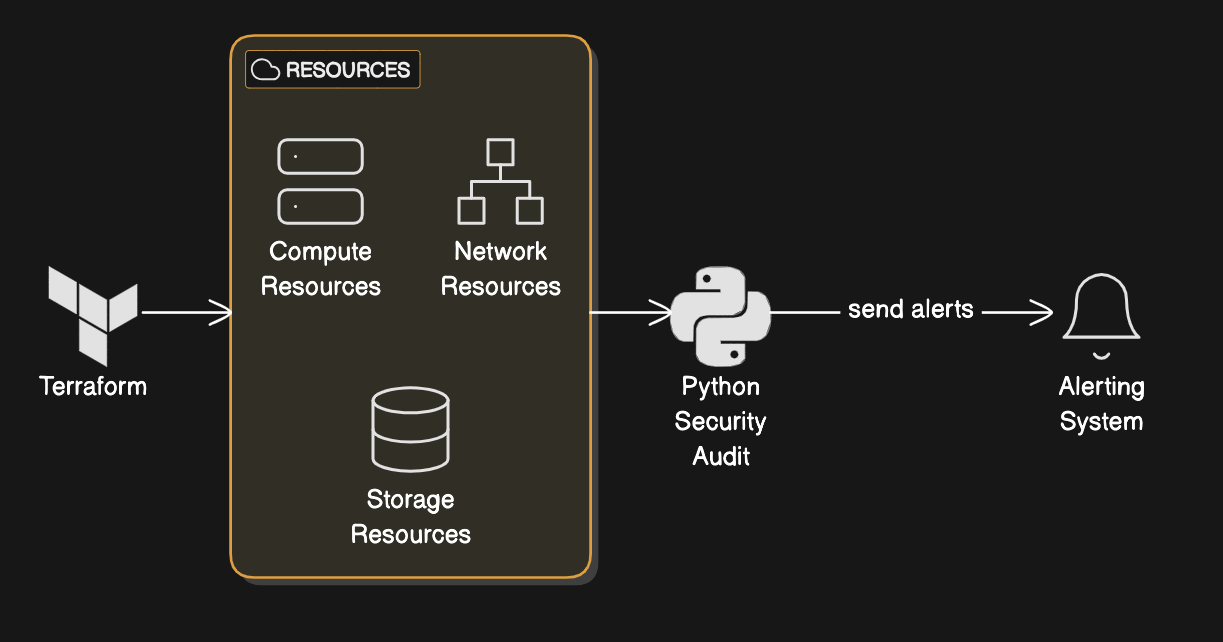

We will combine Python and Terraform to create an automated workflow for provisioning infrastructure and automating performing the Securtity Audits. I will walk through on how to integrate Python and Terraform to build a proactive security system.

\

Terraform allows to export information about the resources it creates. These outputs can be ingested by Python scripts for security audits.

\

- Specify Outputs in Terraform by adding an outputs.tf file to your Terraform configuration:

This exports the names of all storage buckets created by Terraform.

\

- Apply the Terraform Configuration by running the following commands to update and retrieve the outputs:

Use the Terraform outputs to audit only the resources provisioned by Terraform which makes the audit more targeted.

\

- Load Terraform Outputs in Python

\

Integrate Outputs with Audit Scripts

Modify the previous IAM audit script to use these bucket names:

To create a fully automated workflow, we need to create a CI/CD pipeline and integrate Terraform resources provisioning and Python auditing scripts in it.

\

- Create a Shell Script to Orchestrate the Process

\

Integrate with CI/CD using tools like GitHub Actions or Google Cloud Build to automate the script.

Below is the example of GitHub Actions configuration:

Add notifications for audit results:

- Python script to send emails for critical findings:

\

- We can use monitoring platforms like Google Cloud Monitoring or Wavefront for visualizing these Audit Results.

- Should use CI/CD pipelines to implement security checks after every infrastructure change.

- Shoulds regularly update Terraform configurations and Python scripts to align with the latest security standards and patches.

- Should store Terraform state files and Python logs securely in the Cloud Storage Buckets.

We implemented some pieces of the puzzle above. Now let’s explore a real-world scenario where Terraform and Python automate security for a web application hosted on Google Cloud Platform. I will walk you through the scenario of how you would provision secure resources, audit the resources, and introduce proactive monitoring workflows.

Scenario OverviewA company hosts their e-commerce website on the Google Cloud Platform using:

- A Compute VM instance for the application server.

- A Cloud SQL VM for the database.

- A Cloud Storage bucket for data uploaded by users.

\ Our goal is to:

- Provision these above-mentioned resources securely using Terraform.

- Audit the common security issues using Python in the above infrastructure we created.

- Monitor the infrastructure in real time and trigger an alert on any misconfiguration found.

Terraform Configuration:

Here’s how the infrastructure is defined in main.tf

\

- Apply the Configuration

Auditing IAM Roles for Compute Engine

Check if any instance has overly permissive IAM roles:

\ Example Output

Checking instances in zone: us-west1-a Instance 'advait-patel-1' in zone 'us-west1-a' has overly permissive IAM permissions: {'role': 'roles/compute.viewer', 'members': ['allUsers']} Checking instances in zone: us-west1-b Could not find the overly permissive IAM permissions. ...\

Auditing SQL Instance SSL Settings:

Verify if SSL connections are enforced for the Cloud SQL instance or not

\ Example Output

SQL Instance 'advait-test' enforces SSL connections. WARNING: SQL Instance 'advait-patel-test' does NOT enforce SSL connections. Could not find Cloud SQL instances in the project 'replace-your-project-id'. Step 3: Continuous Monitoring and Alerts- Setup Monitoring for GCP Resources: Use Google Cloud Monitoring to set up alerts for critical configurations:

Log-Based Alerts: Monitor IAM changes for Compute Engine and Storage buckets.

Uptime Checks: Ensure the web server is accessible.

\

- Schedule Automated Security Audits: Combine Terraform and Python into a cron job or CI/CD pipeline to ensure regular security checks:

\

- Email Alerts for Misconfigurations: Send alerts for critical issues detected during audits:

- Deploy the infrastructure using Terraform.

- Run the Python audit script to check for security issues.

- Fix identified issues (e.g., enable SSL for Cloud SQL, correct IAM permissions).

- Test monitoring and alerting to ensure quick response to future issues.

In this article, we looked at how to automate security audits of Google Cloud using Terraform and Python. By combining the powers of both, you will end up with a solid proactive security workflow.

Key takeaways include: \n \n ==Infrastructure as a Code-IaaC for Secure Deployments==: Terraform simplifies the resource provisioning process while maintaining security best practices, including IAM role restrictions and bucket-level access controls. \n \n ==Python for Continuous Audits==: Python scripts can be easily integrated with GCP APIs to run automated security checks, such as the detection of misconfigured IAM policies, enabling logging, and enforcing SSL connections. \n \n ==Integration for Scalability==: Terraform with Python sets up a powerful pipeline not limited to resource provisioning and auditing but includes continuous monitoring of resources as well. Therefore, this is the end-to-end security solution. \n \n ==Real-World Application==: The tools were applied to a real-world use case, where it showed how an e-commerce application can be secured, practical workflows and coding examples were shown for CI/CD integrations to attain continuous compliance. That said, this approach is highly scalable and adaptable for any kind of cloud environment, making sure that as growth happens, the security of your infrastructure is maintained. More importantly, integrating these workflows with monitoring and alerting systems allows teams to quickly respond to security and, hence minimize risks.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.