and the distribution of digital products.

DM Television

An Efficient One-Round Adaptive Algorithm In Equivalence Testing

:::info Authors:

(1) Diptarka Chakraborty, National University of Singapore, Singapore

(2) Sourav Chakraborty, Indian Statistical Institute, Kolkata;

(3) Gunjan Kumar, National University of Singapore, Singapore;

(4) Kuldeep S. Meel, University of Toronto, Toronto.

:::

Table of Links4 An Efficient One-Round Adaptive Algorithm and 4.1 High-Level Overview

6 Acknowledgements and References

B An O(log log n)-query fully adaptive algorithm

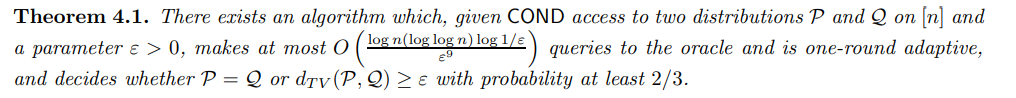

4 An Efficient One-Round Adaptive AlgorithmOur main contribution is a one-round adaptive tester for the equivalence testing problem in the COND model that makes at most O˜(log n) queries.

\

\ In the remaining part of this paper, we will prove the above theorem. We start with a high-level idea behind our algorithm, and then we provide a formal description of the algorithm with a detailed analysis.

4.1 High-Level OverviewThe first attempt to design an equivalence tester is to pick a sample, say i, from P and compare the probability mass P(i) and Q(i) in P and Q respectively. If P and Q are far (in total variation distance), then with enough probability, the probability mass of i in both distributions is significantly different. However, the issue is that estimating the probability mass of the element i can be very expensive. To bypass this issue, the idea is to sample a subset S of the domain, hoping the following:

\ • the probability mass of S in P is comparable to that of the mass of S in Q, and

\ • the probability mass of i (in P) is similar to the probability mass of S.

\ Assuming that all the above two statements hold, one can use conditional sampling (conditioned on S ∪i) to compare P(i)/P(S ∪ i) and Q(i)/Q(S ∪ i). Since, we assumed P(S) is similar to Q(S) so with high enough probability P(i)/P(S ∪ i) and Q(i)/Q(S ∪ i) will be different enough. And since we assumed that P(i) is comparable to P(S), one can estimate P(i)/P(S ∪ i) and can upper bound Q(i)/Q(S ∪ i) using only a few samples, which should be sufficient to distinguish P from Q if the the two distributions are far. But the issue is how to take care of the two assumptions.

\

\ • (Case 2) There is large “tail probability,” because of which concentration is not possible. (The notion of tail probability is formalized in Section 4.3).

\

\

:::info This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.