and the distribution of digital products.

DM Television

Coin3D Delivers Fast, Precise 3D Generation with Strong User Approval

3 Method and 3.1 Proxy-Guided 3D Conditioning for Diffusion

3.2 Interactive Generation Workflow and 3.3 Volume Conditioned Reconstruction

4 Experiment and 4.1 Comparison on Proxy-based and Image-based 3D Generation

5 Conclusions, Acknowledgments, and References

\ SUPPLEMENTARY MATERIAL

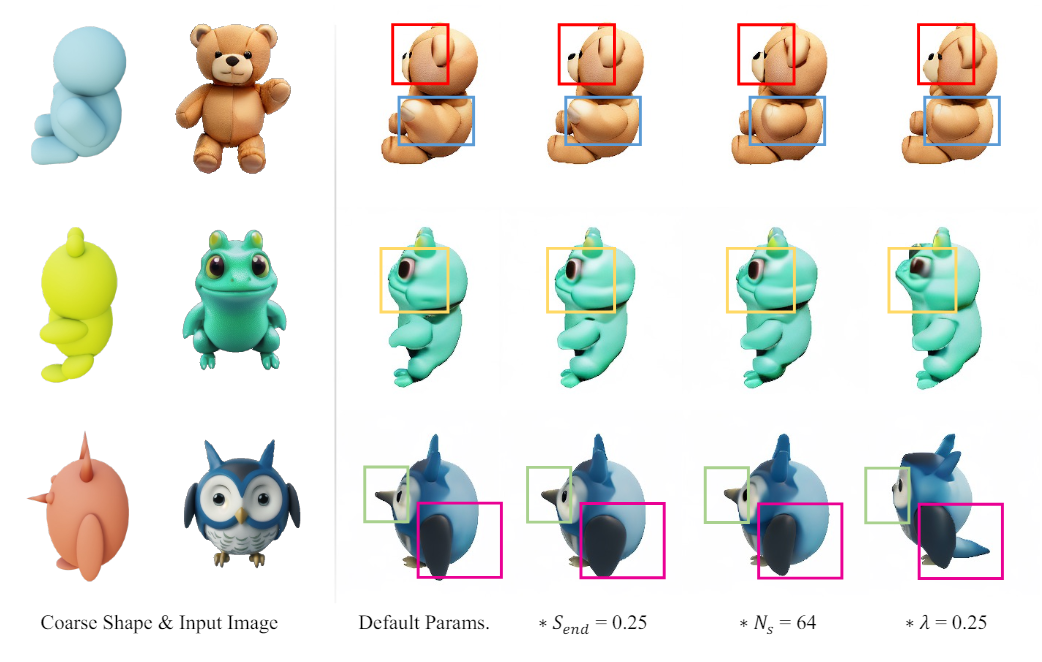

C MORE EXPERIMENTS C.1 Runtime EvaluationAs shown in Fig. L, given a 3D proxy and text prompt, Coin3D first takes 2s to generate candidate images and 6s to generate 3Daware conditioned multiview images. During previewing, Coin3D

\

\ responds to the camera rotating instantly (<0.3s) and takes 2s for convergence (baseline without volume-cache requires 6s). For interactive editing, Coin3D takes 3s to update the 2D condition and 9s to update the feature volume, which is then ready for previewing. As a comparison, existing editable 3D generation methods take much longer for feedback, e.g., ~1h for Progressive3D and FocalDreamer, ~0.5h for Fantasia3D. Finally, Coin3D takes 4~5m (600 iterations) to reconstruct and export the textured mesh (see Fig. 1 and Fig. 4 from the main paper for "proxies vs. textured meshes").

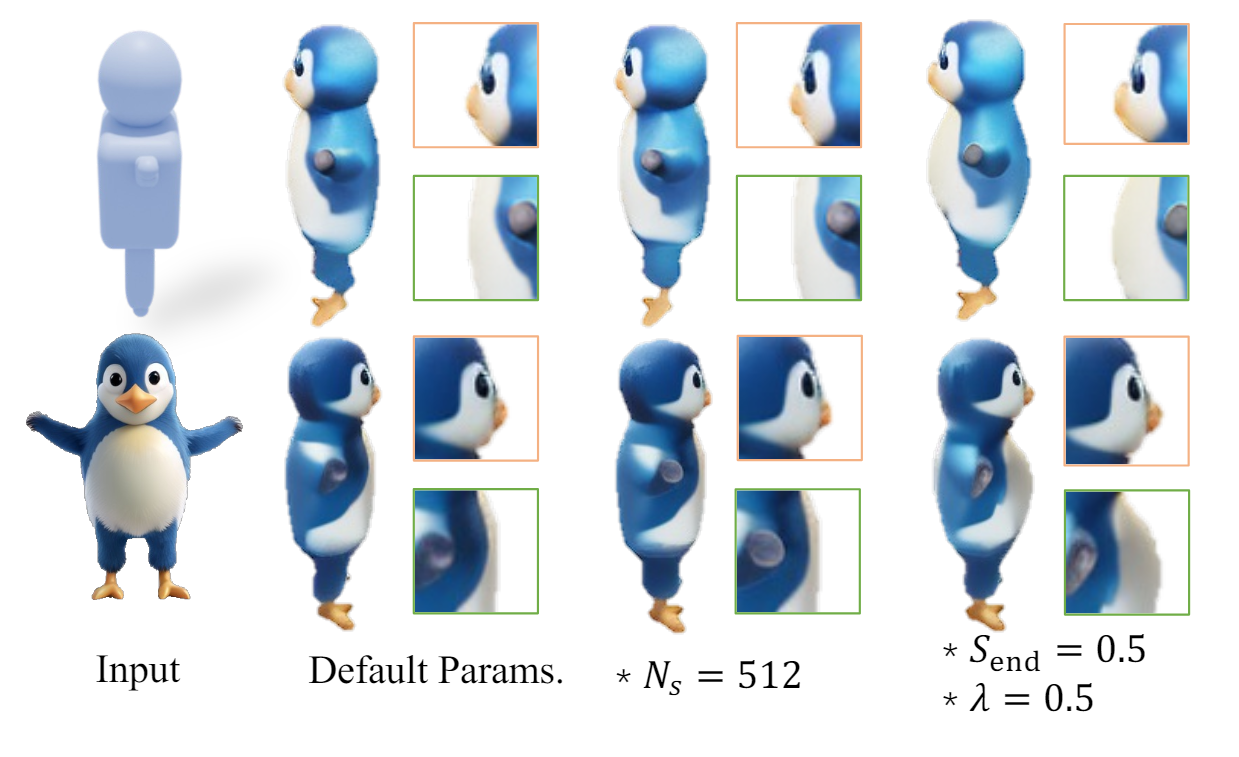

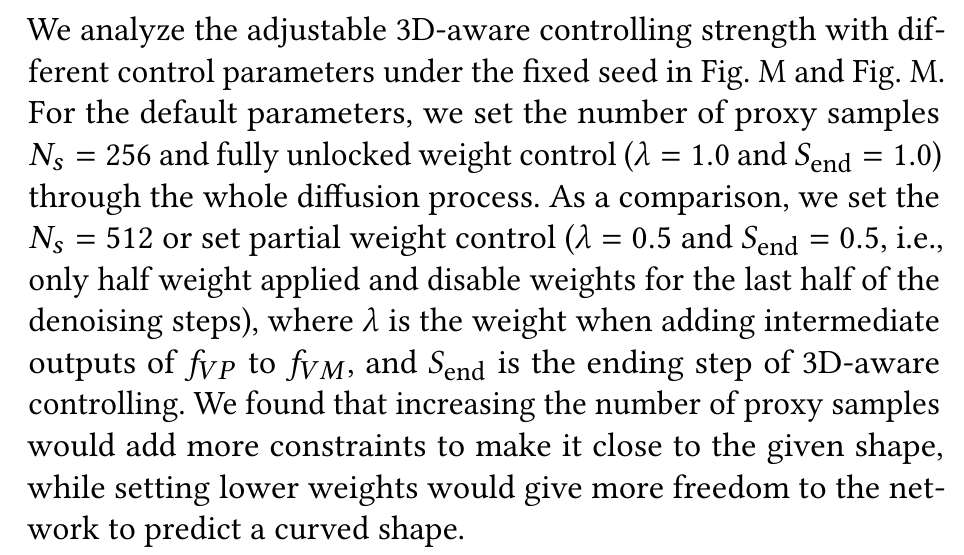

C.2 3D-Aware Controlling Strength

Since Fantasia3D supports fine-tuning on the pre-trained mesh, it can also conduct interactive editing by updating text prompts. Therefore, we compare our interactive editing method with Fantasia3D fine-tuning. We use the user-guided generation method mentioned in Fantasia3D. The first stage performs geometry optimization and texture optimization based on the initial shape, and the second stage uses the first stage’s optimized object but modifies the text prompt during optimization. During the experiment, we first use a flat cylinder as the initial input shape, and use text

\

\ prompt “a birthday cake base” to guide the optimization. Second, we add candles by modifying the proxy shape and updating the text prompt to “a birthday cake with three candles on top” and As shown in Fig. J, our method successfully generates appealing cakes with candles, while Fantasia3D fails to achieve a reasonable result (e.g., almost no complete candle on the top).

C.4 Proxy-Based 3D Generation vs. Texture SynthesisWe also compare our method with texture synthesis work TEXTure [Richardson et al. 2023], which generates UV textures given the corresponding geometry. As shown in Fig. I, when given the same coarse proxy for generation, TEXTure tends to generate tightly bounded textures on the given mesh, resulting in blurry appearances and invisible facial features of the dinosaur. In contrast, our method allows a certain degree of freedom during the generation, which gracefully synthesizes the dinosaur with vivid facial details. The experiment demonstrates that the proxy-based 3D generation is far beyond the texture synthesis task, as it requires the method to generate more details upon the coarse proxy shape.

C.5 Proxy-Based 3D Generation vs. Depth-Controlled 3D GenerationWe compare our proxy-based 3D generation with the depth-controlled 3D generation pipeline from Zero123++ [Shi et al. 2023a], where we feed the Zero123++ with the multiview depth maps rendered from the coarse shape proxy. As shown in Fig. K, even only given a coarse shape of the animal with no ears, our method still generates cute animal ears upon the simple shape, while Zero123++ can only synthesize novel views of the object that tightly fit to the coarse shape proxy with poor facial details. This demonstrates that simply using 2D depth control as a condition in multiview generation cannot achieve the ideal coarse 3D control ability like ours, which further proves the value of adding 3D-aware control in a 3D manner.

C.6 User Studies of Ablation StudiesWe selected 10 examples of the ablation studies in Sec. 4.4 and asked 24 users to judge whether the proposed strategies improve the results. The statistic shows 78% of users believe volume-SDS improves the quality, 75% of users think 3D mask dilation makes editing more natural, 96% of users find the proxy helpful in maintaining shape integrity.

C.7 User Study of 3D Interaction of Proxy-based 3D GenerationWe also conducted a user study of proxy-based 3D generation. We show users the process of making coarse shapes in Blender, as well as the generated multi-view images and 3D reconstruction results. We asked each participant to rate three questions: (a) the difficulty of using 3D modeling tools; (b) overall satisfaction with the effectiveness of our approach; (c) willingness to use our methods, on a scale of 1 to 5, where 1 in (a) means easy to use, 5 in (b) means satisfied with the effectiveness, and 5 in (c) means willing to use. The score of (a) is 2.38, (b) is 4.62, and (c) 4.46, which indicates that most of the participants consider the difficulty of coarse shape modeling is acceptable and are willing to use our method.

\

\

\

:::info Authors:

(1) Wenqi Dong, from Zhejiang University, and conducted this work during his internship at PICO, ByteDance;

(2) Bangbang Yang, from ByteDance contributed equally to this work together with Wenqi Dong;

(3) Lin Ma, ByteDance;

(4) Xiao Liu, ByteDance;

(5) Liyuan Cui, Zhejiang University;

(6) Hujun Bao, Zhejiang University;

(7) Yuewen Ma, ByteDance;

(8) Zhaopeng Cui, a Corresponding author from Zhejiang University.

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.