and the distribution of digital products.

AI is Actually Good at Understanding Documents

Whenever some new tech shows up, it gets drowned in hyperbole. My Twitter is full of “influencers” that claim to have built a full website with a single prompt, but anyone who’s out there trying to build websites knows they’re currently about good enough to implement small functions and go off the deep end on any long-range task with an entire code repository as context.

\ Remember when we were promised self-driving cars tomorrow about ten years ago? Self-driving is a solved problem, said Elon Musk, the ultimate hype meister, 8 years ago.

\ While we were waiting for Teslas to start doing donuts on their own, less glamorous efforts were well underway. Mobileye built a sensor that goes beep when you’re about to run into something. They saved countless lives, and reduced insurance claims by about 90%. They built a $17B company.

\ I believe that document understanding is the Mobileye technology for LLMs. Understanding financial tables, tabulating insurance claims and inferring medical codes from a Doctor’s notes seems modest compared to the lofty dreams. But if you double-click on this problem, you’ll find it was previously unsolved and that it unlocks a lot of value.

BackstoryA decade ago, I worked for LinkedIn’s illustrious data standardization team. We were trying to crack one deceptively simple problem: how do you make sense of a résumé, no matter where it comes from, and map its titles to a small set of recognized titles?

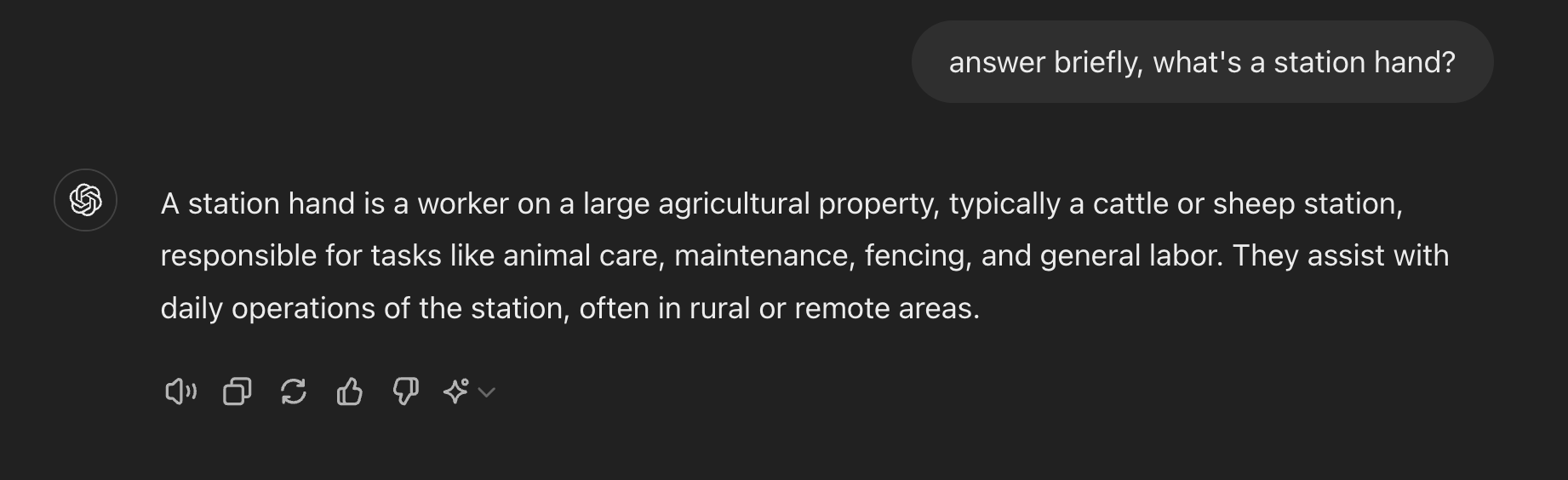

\ You’d think this would be easy. I mean, “software engineer” is a pretty straightforward title, right? But what if someone writes “associate”? They could be stocking shelves or pulling in a six-figure salary at a law firm. What’s a Station Hand (Aussie Cowboy), what’s a consultant (could mean advisor/freelance, but it could mean Doctor if you’re British and have the right background for it)? If you’re trying to fit job titles into a list of recognized items so you can index for search, sales, etc - how would you build a model that knows the nuance of all languages and cultures, and not mistake “Executive Assistant” to be an executive, while Assistant Regional Manager is indeed a deputy to the regional manager?

\

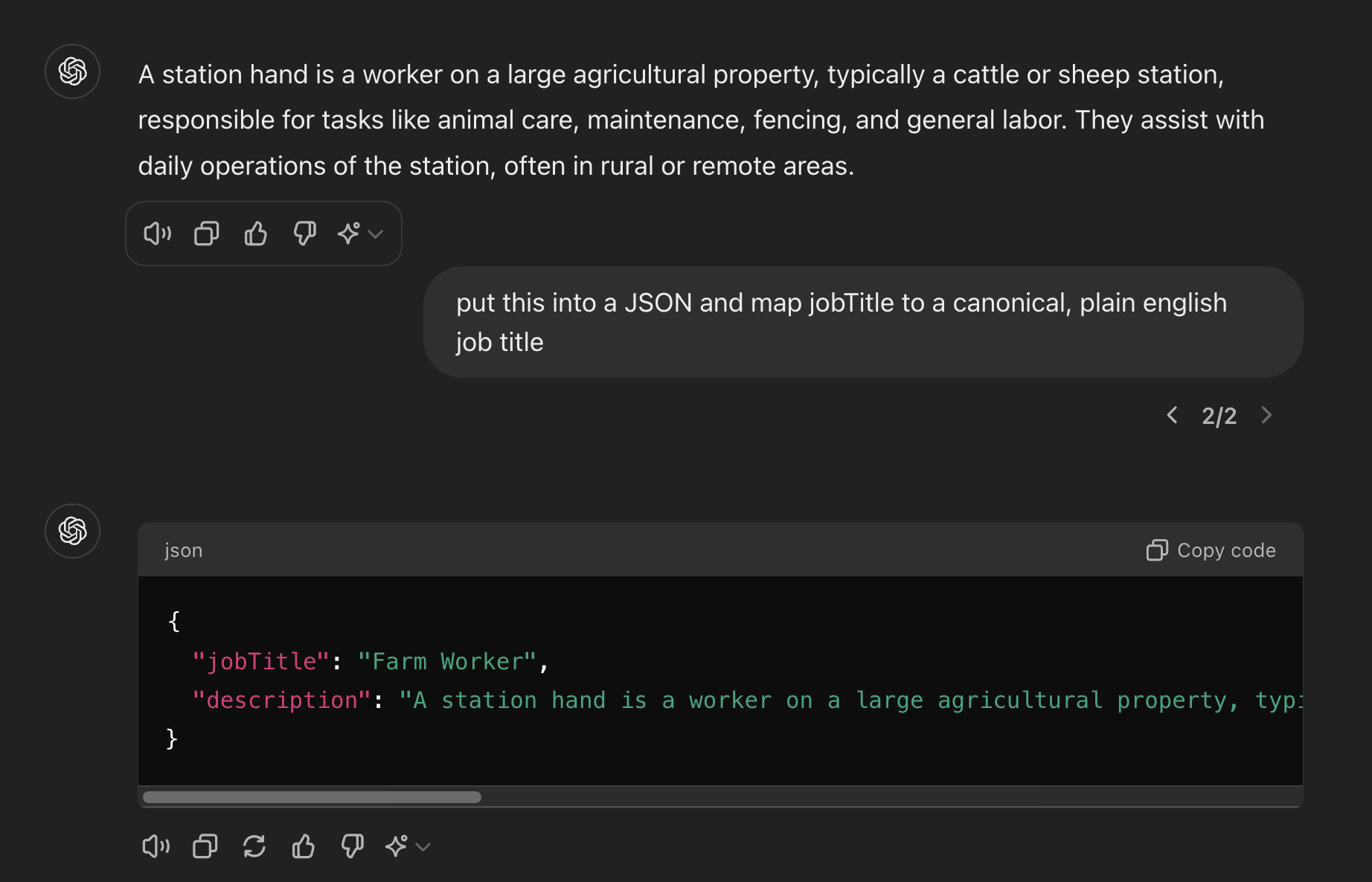

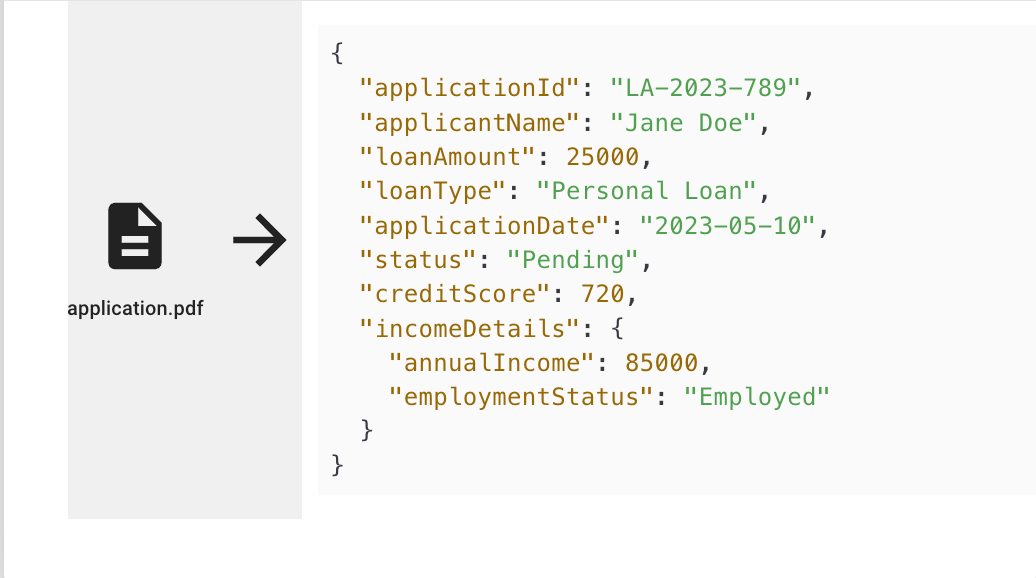

OK, so this is nice, but if I’m working for LinkedIn, I’ll need concrete data types. I want a JSON.

\

More work is needed to map job titles into a standard taxonomy - a finite list of acceptable predefined job titles. But you can see how something that was very hard in the past becomes trivial.

Office Work Becomes an AI PlaygroundReading résumés is a nice use case, but I think it’s not revolutionizing. LinkedIn is a tech company and has always applied some of the sharpest razors to the problem. It can get somewhat better, but we’re only replacing one code automation process with another.

\ Things get much more interesting when you replace tedious manual labor. A giant chunk of the economy is based on people doing expert tasks that boil down to “reading a document, figuring out what it says, and repeating that process ad nauseam.”

\ Let me hit you with some examples:

Expense management: You’ve got an invoice, and someone needs to turn it into a list of numbers — what was paid, to whom, and in what currency. Sounds easy? Not when it’s buried in a mess of extra info, incomplete tables, or PDFs that look like someone ran them through a blender.

\

Healthcare claim processing: This one is a nightmare, that is solved by an army of healthcare claims adjudicators. They sift through mountains of invoices, clinician notes, and invoices that all have to come together in a tangled mess with duplicates, and have to match it to an existing health insurance policy and figure out whether the charge is covered, under which category, and to what amount. But when you come down to it it’s mostly just reading, sorting, and labeling. The decisions aren’t hard; it's the data extraction that’s the challenge.

\

Loan underwriting: Reviewing someone's bank statements and categorizing their cash flow. Again, it's more about structuring unstructured info than rocket science.

\ Glamorous? No. Useful? I think so.

Document Extraction is a Grounded TaskBy now, LLMs are notorious for hallucinations—aka making shit up. But the reality is more nuanced: hallucinations are expected when you ask for world knowledge but are basically eliminated in a grounded task.

\ LLMs aren't particularly good at assessing what they "know"—it’s more of a fortunate byproduct that they can do this at all since they weren’t explicitly trained for that. Their primary training is to predict and complete text sequences. However, when an LLM is given a grounded task - one where only the input explicitly given to it is required to make a prediction, hallucination rates can be brought down to basically zero. For example, if you paste this blog post into ChatGPT and ask if it explains how to take care of your pet ferret, the model will give the correct response 100% of the time. The task becomes predictable. LLMs are adept at processing a chunk of text and predicting how a competent analyst would fill in the blanks, one of which might be {“ferret care discussed”: false}.

\ As a former AI consultant, we worked on projects focused on extracting information from documents, particularly in industries like insurance and finance. The common fear was "LLMs hallucinate,” but in practice, the biggest challenges were often due to errors in extracting tables or other input inconsistencies. LLMs only fail when we fail to present them with clean, unambiguous input. There are two key components to successfully automating document processing:

\

Perfect Text Extraction – This involves converting the document into clean, machine-readable text, including handling tables, handwritten notes, or varied layouts. The LLM needs a clear, comprehensible text to work with.

\

Robust Schemas – These schemas should define what outputs you're looking for, how to handle edge cases, and the format of the data, ensuring the system knows exactly what to extract from each document type.

\ The gap between the potential risks of hallucination and the actual technical hurdles can be vast, but with these fundamentals in place, you can leverage LLMs effectively in document processing workflows.

\

Text extraction is trickier than first meets the eyeHere’s what causes LLMs to crash and burn, and get ridiculously bad outputs:

- The input has complex formatting like a double-column layout, and you copy & paste text from e.g. a PDF from left to right, taking sentences completely out of context.

- The input has checkboxes, checkmarks, hand-scribbled annotations, and you missed them altogether in the conversion to text

- Even worse: you thought you could get around converting to text, and hope to just paste a picture of a document and have GPT reason about it on its own. THIS gets you into hallucination city. Just ask GPT to transcribe an image of a table with some empty cells and you’ll see it happily going apeshit and making stuff up willy-nilly.

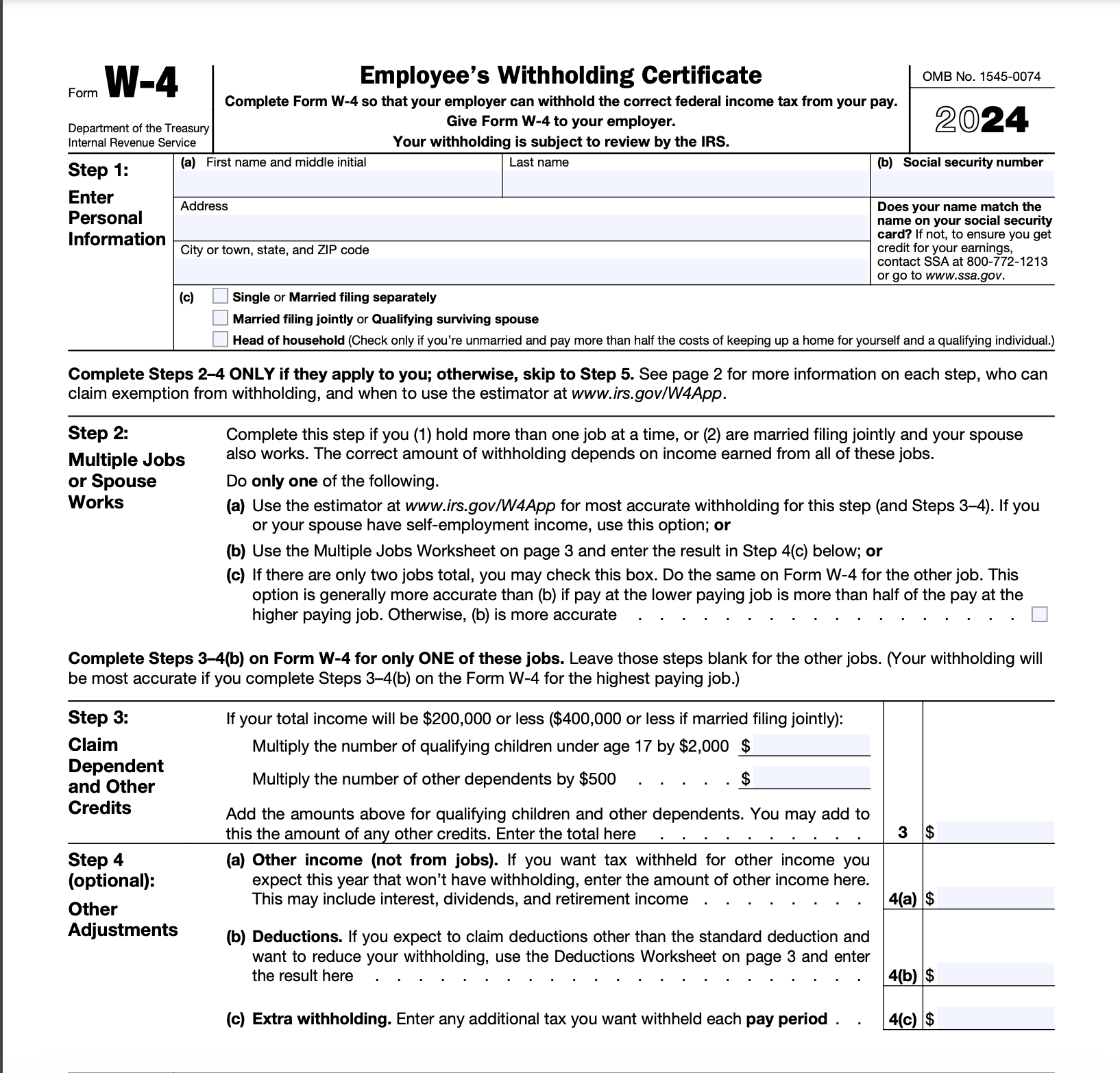

\ It always helps to remember what a crazy mess goes on in real-world documents. Here’s a random tax form:

\ Of course, real tax forms have all these fields filled out, often in handwriting

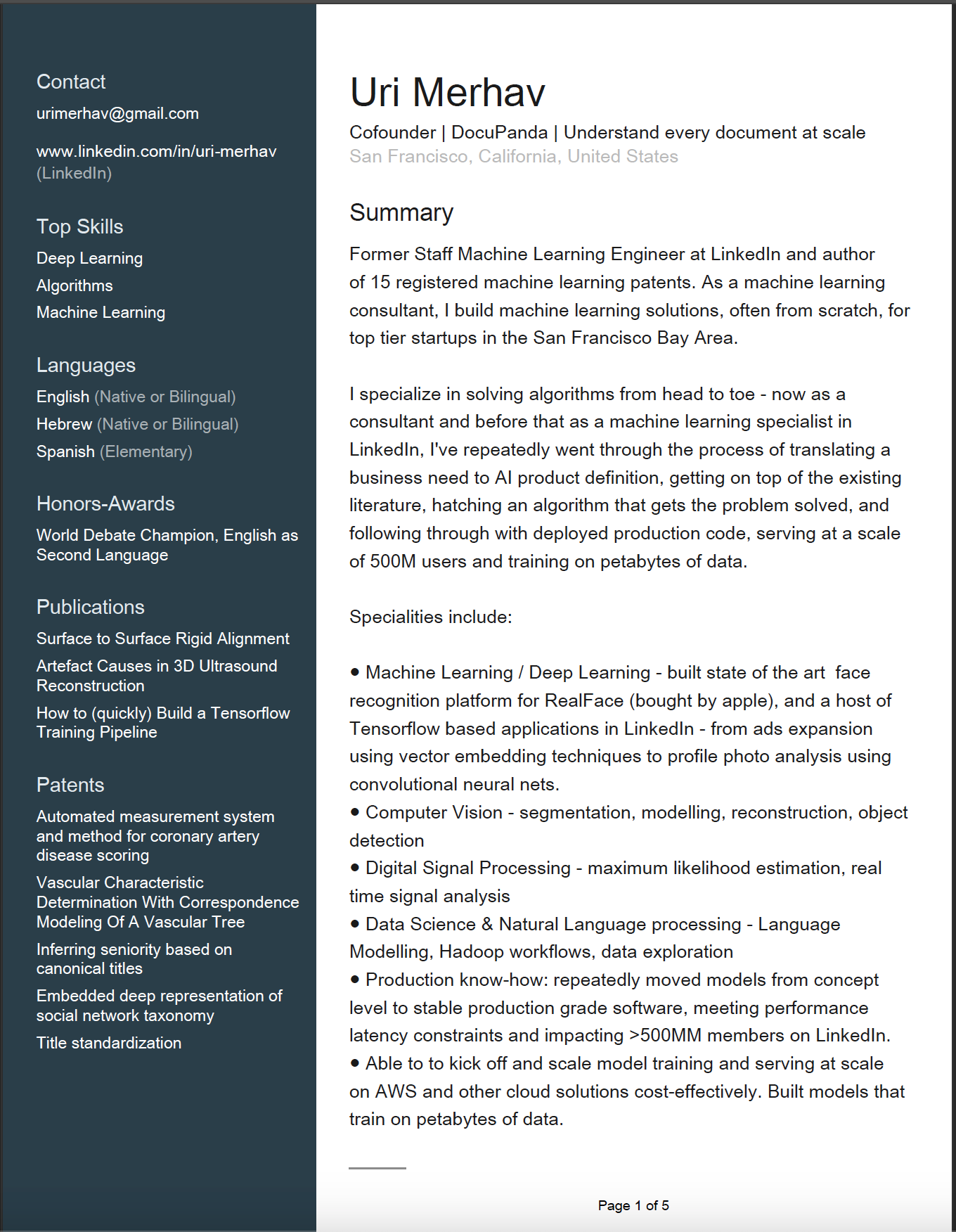

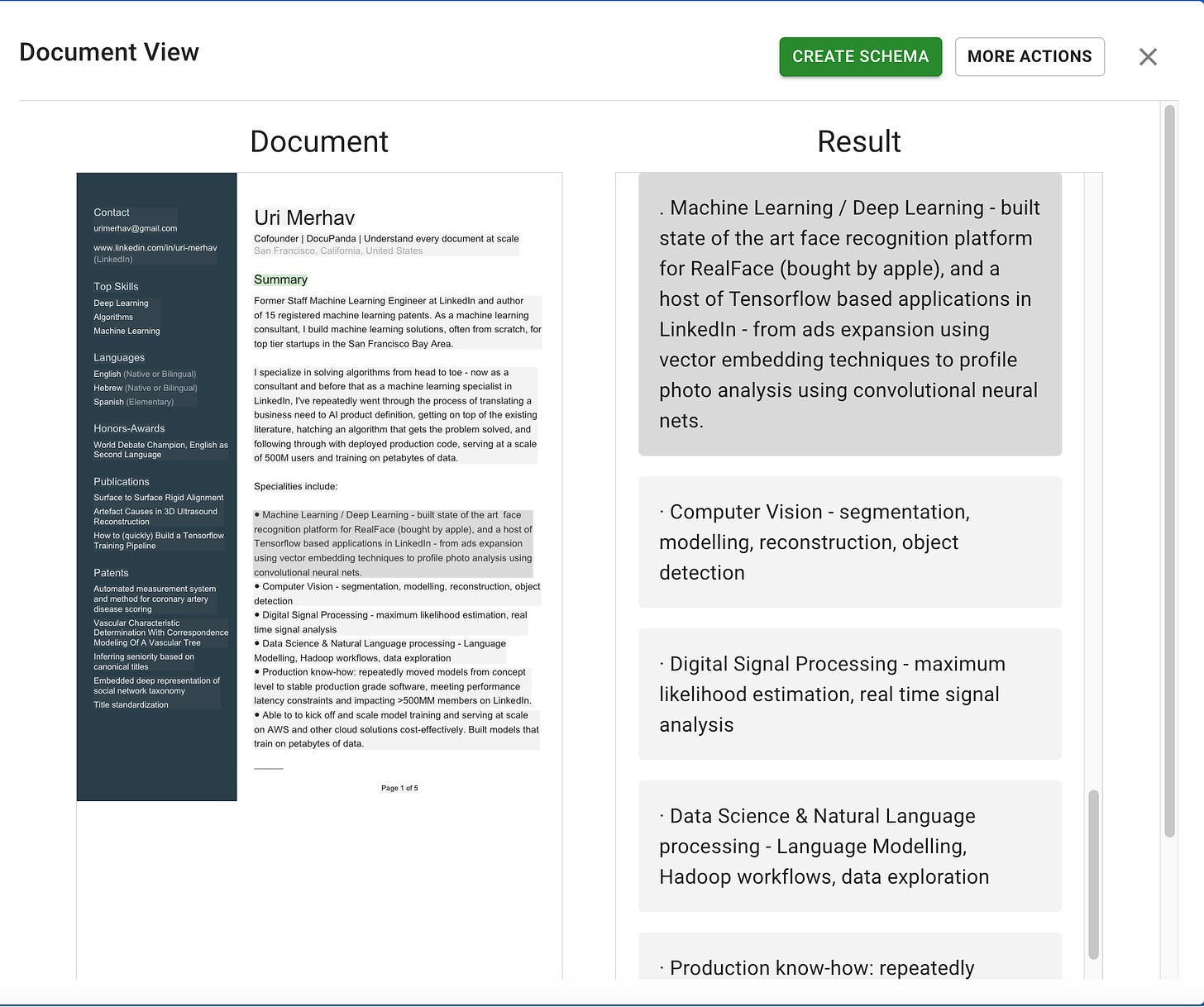

\ Or here’s my resumè

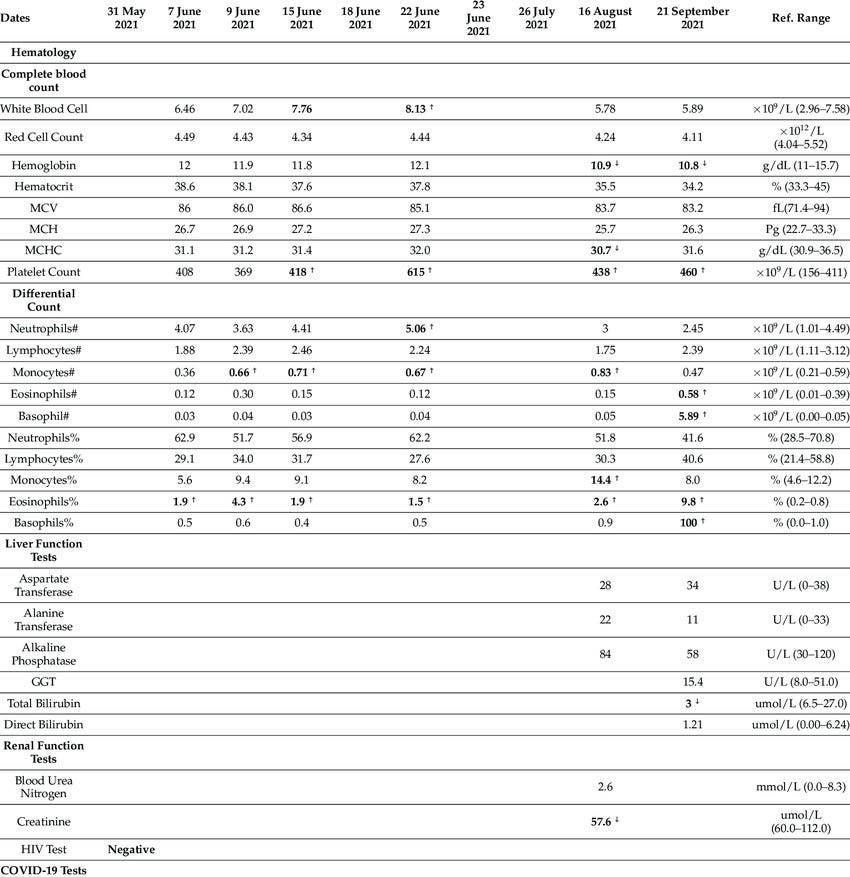

\ Or a publicly available example lab report (this is a front-page result from Google)

\

\

\ The absolute worst thing you can do, by the way, is ask GPT’s multimodal capabilities to transcribe a table. Try it if you dare — it looks right at first glance, absolutely makes random stuff up for some table cells, takes things completely out of context, etc.

If something’s wrong with the world, build a SaaS company to fix itWhen tasked with understanding these kinds of documents, my cofounder Nitai Dean and I were befuddled that there weren’t any off-the-shelf solutions for making sense of these texts.

\ Some people claim to solve it, like AWS Textract. But they make numerous mistakes on any complex document we’ve tested on. Then you have the long tail of small necessary things, like recognizing checkmarks, radio button, crossed-out text, handwriting scribbles on a form, etc etc.

\ So, we built Docupanda.io — which first generates a clean text representation of any page you throw at it. On the left hand you’ll see the original document, and on the right, you can see the text output.

\

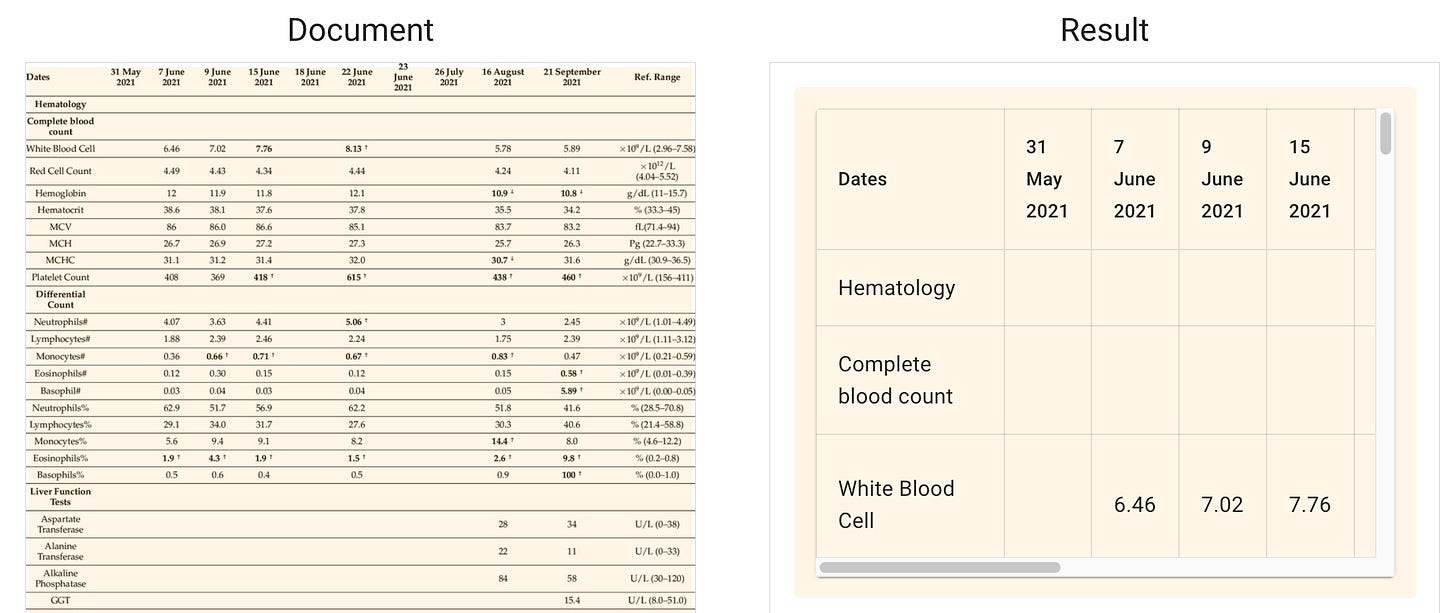

\ Tables are similarly handled. Under the hood, we just convert the tables into human and LLM-readable markdown format:

\ The last piece to making sense of data with LLMs is generating and adhering to rigid output formats. It’s great that we can make AI mold its output into a JSON, but to apply rules, reasoning, queries, etc on data — we need to make it behave regularly. The data needs to conform to a predefined set of slots which we’ll fill up with content. In the data world, we call that a Schema.

Building Schemas is a trial and error process… That an LLM can doThe reason we need a schema is that data is useless without regularity. If we’re processing patient records, and they map to “male” “Male” “m” and “M” — we’re doing a terrible job.

\ So how do you build a schema? In a textbook, you might build a schema by sitting long and hard and staring at the wall, and defining what you want to extract. You sit there, mull over your healthcare data operation, and go “I want to extract patient name, date, gender and their physician’s name. Oh, and gender must be M/F/Other.”

\ In real life, to define what to extract from documents, you freaking stare at your documents… a lot. You start with something like the above, but then you look at documents and see that one of them has a LIST of physicians instead of one. And some of them also list an address for the physicians. Some addresses have a unit number and a building number, so maybe you need a slot for that. On and on it goes.

\ What we came to realize is that being able to define exactly what’s all the things you want to extract, is both non-trivial, difficult and very solvable with AI.

\ That’s a key piece of DocuPanda. Rather than just asking an LLM to improvise an output for every document, we’ve built the mechanism that lets you:

\

- Specify what things you need to get from a document in free language

- Have our AI map over many documents and figure out a schema that answers all the questions and accommodates the kinks and irregularities observed in actual documents.

- Change the schema with feedback to adjust it to your business needs

\ What you end up with is a powerful JSON schema — a template that says exactly what you want to extract from every document, and maps over hundreds of thousands of them, extracting answers to all of them, while obeying rules like always extracting dates in the same format, respecting a set of predefined categories, etc.

Like with any rabbit hole, there’s always more stuff than first meets the eye. As time went by, we’ve discovered that more things are needed:

Often organizations have to deal with an incoming stream of anonymous documents, so we automatically classify them and decide what schema to apply to them

Documents are sometimes a concatenation of many documents, and you need an intelligent solution to break apart a very long documents into its atomic, seperate components

Querying for the right documents using the generated results is super useful

\

If there’s one takeaway from this post, it’s that you should look into harnessing LLMs to make sense of documents in a regular way. If there’s two takeaways, it’s that you should also try out Docupanda.io. The reason I’m building it is that I believe in it. Maybe that’s a good enough reason to give it a go?

\

\ \n

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.