and the distribution of digital products.

Adding Random Horizontal Flipping Contributes To Augmentation-Induced Bias

:::info Authors:

(1) Athanasios Angelakis, Amsterdam University Medical Center, University of Amsterdam - Data Science Center, Amsterdam Public Health Research Institute, Amsterdam, Netherlands

(2) Andrey Rass, Den Haag, Netherlands.

:::

Table of Links- Abstract and 1 Introduction

- 2 The Effect Of Data Augmentation-Induced Class-Specific Bias Is Influenced By Data, Regularization and Architecture

- 2.1 Data Augmentation Robustness Scouting

- 2.2 The Specifics Of Data Affect Augmentation-Induced Bias

- 2.3 Adding Random Horizontal Flipping Contributes To Augmentation-Induced Bias

- 2.4 Alternative Architectures Have Variable Effect On Augmentation-Induced Bias

- 3 Conclusion and Limitations, and References

- Appendices A-L

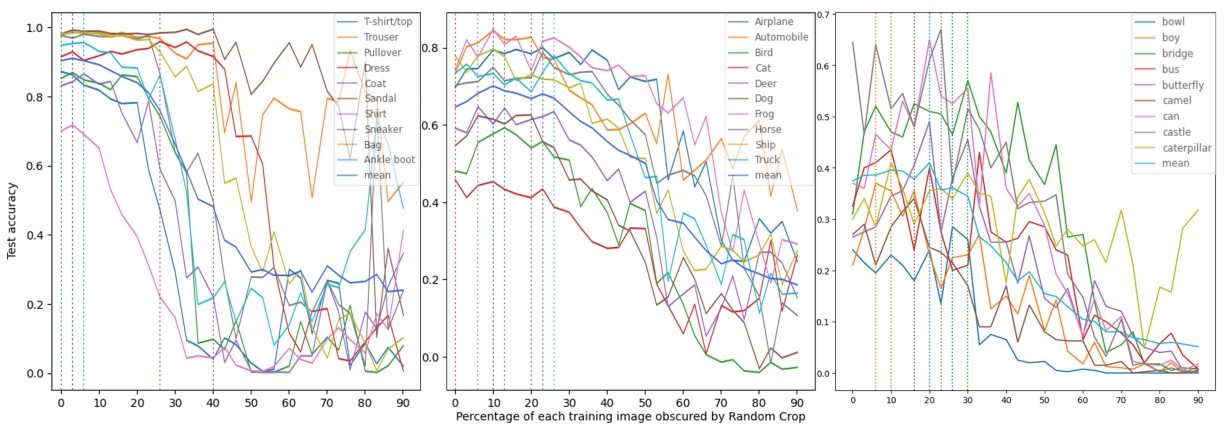

As part of this work’s goal was to confirm the effects of DA on the bias-variance trade-off of image classification problems as seen in Balestriero, Bottou, and LeCun (2022), we also chose to delve deeper into the specifics of the DA policy implemented by the original paper. In particular, we felt that it overlooked the possible effects its universal application of Random Horizontal Flipping (henceforth ”RHF”) as a supplemental DA may have introduced. In an effort to investigate this, we once again conducted a series of experiments similar to Section 2.2, this time excluding RHF.

\ Our trials showed (see Figure 3 and appendices F, J and K) similar trends and results when compared to Section 2.1. However, as could be expected from removing a minor source of regularization such as RHF, overall mean performance was marginally worse across all three datasets. In addition, it appears that the thresholds of α (past which overall and class-specific performances begin to fall, as well as at which best perclass and mean accuracies are reached) have generally increased - for example, ”Sandal”’s best performance is up to 40% from 36% compared to the previous section. In this way, we see that RHF compounds with the scaling Random Cropping DA, acting as a ”constant” source of additional regularization, while preserving, if accelerating, the dynamics of test set accuracies as α grows. With this in mind, Balestriero, Bottou, and LeCun (2022) is validated, as the conclusions reached in the work would likely not have been impacted had

\

\ RHF been omitted. While not gravely consequential, this finding should serve as a reminder that caution should be exercised when chaining a plurality of data augmentations together. While such an approach is standard practice in contemporary computer vision tasks, it can rapidly increase complexity, and controlling for the influence of a given augmentation on class-specific bias may become difficult.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.