and the distribution of digital products.

How to Craft Inclusive AI Prompts That Mitigate Bias

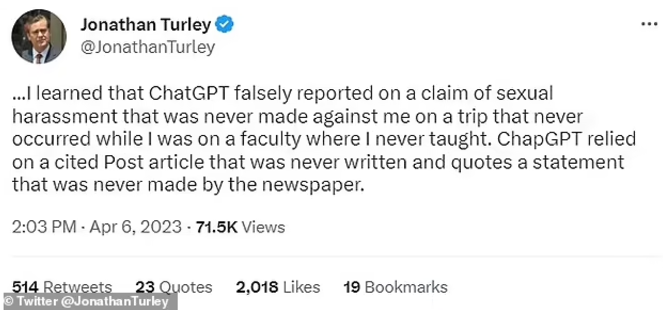

Remember Amazon's sexist AI recruiting tool that favored men over women? Or ChatGPT's invented sexual scandal against a law professor? These are two cases, among many, of AI perpetuating bias.

\

\ Often, AI biases result from training the models. But we must also acknowledge that ==prompts significantly shape AI behaviors.==

\ What users feed AI models influences their response. While addressing bias needs structural efforts across systems, prompts offer control.

\

\ Well-crafted prompts promote equal outputs by ==establishing guardrails against prejudice==. They positively guide AI towards diversity and awareness.

\ Checking our language, asking for thoughtfulness, adding context - these best practices make a difference.

\ This guide therefore aims to empower thoughtful AI users and prompt writers of all levels with practical ways to craft better, bias-mitigating prompts that limit exclusion.

First, What Makes an AI Prompt Inclusive?==An AI prompt is a text or voice input that instructs an AI model==. Prompts, generally, are the guiding forces that shape AI assistant outputs. Here is an example:

\

We often build on a prompt’s instruction to directly influence what information our AI model accesses, how it frames understanding, and the attitudes it reflects back. Check:

\

Crafted properly, ==prompts can expand AI’s knowledge and compassion== - constructed poorly, they perpetuate biases. See below:

\

==An inclusive AI prompt is a subset of AI prompts that explicitly guides AI systems towards equitable, aware responses for diversity.== An example here:

\

Thoughtful prompts like the above affirm values such as empowerment and humanity while avoiding assumptions. ==Inclusive language leads to nuanced perspectives==.

Why Should You Care About Inclusive AI Prompts?Promoting inclusive prompts challenges us to ==use our seat at the keyboard to consciously lift underrepresented voices in technology==.

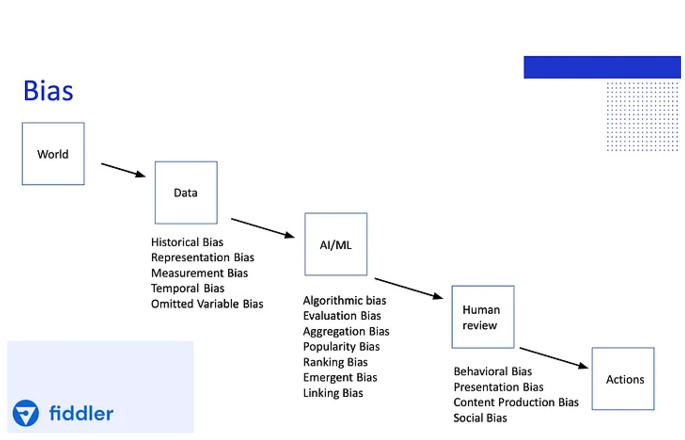

\ According to a NIST report, bias in AI systems can stem from the data, the algorithms, and ==the societal context in which they are used==. See:

\

\ A study by USC researchers found that up to 38.6% of facts used by AI systems are biased. This finding shows that bias in AI is a serious and widespread problem that affects millions of people around the world.

\

And unless we mitigate it, says Francesca Rossi, IBM AI Ethics Global Leader, we will face systems that are unfair, inaccurate, and unreliable.

How to Create Inclusive AI Prompts That Respect Diversity (With Examples)==AI systems learn from the data we expose them to==. This includes the wording and framing of our prompts. Crafting inclusive prompts that respect diversity is essential for mitigating bias in model responses.

\ Here are ways, techniques, and strategies any prompt engineer can use to promote diversity:

1. Provide Contextual Details and Relevant InformationWhen interacting with AI systems, providing contextual details and relevant information is crucial for mitigating bias and ensuring inclusive, respectful responses.

\ Without proper context, AI lacks the understanding of nuanced situations needed to avoid problematic assumptions.

\ ==To provide context, just be detailed==.

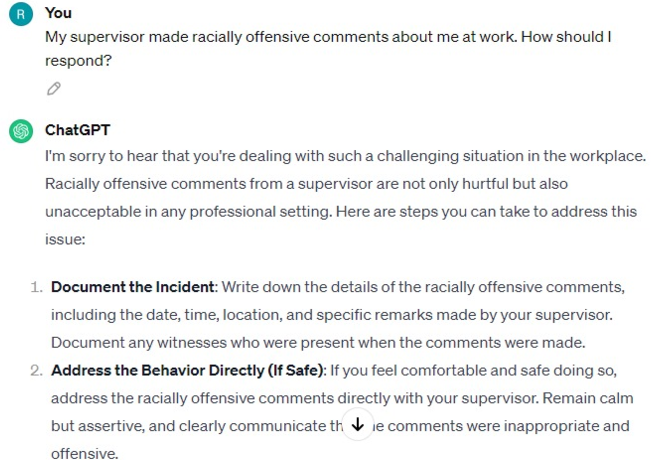

\ For example, imagine someone hurt you with their words and you try inputting it into ChatGPT this way:

\

The response contains naive assumptions and generic advice that may not apply to your situation or could make it worse.

\ The response focuses heavily on giving the benefit of the doubt through "considering context" and "educating" the offender when in reality, the remark could have been intentionally malicious.

\ To improve, ==let's add some context like the identity of the parties involved and the nature of the offensive remark== while keeping relevant details minimal.

\ Here is an updated prompt:

\

We get much more applicable advice grounded in handling discrimination issues.

\ You can see the ==emphasis on documentation, reporting procedures, legal rights/protections, and the emotional impact of racism.==

\ However, details on the context and pattern of the prompt are still lacking. For instance, a one-off ignorant statement would warrant a different approach than repeated, intentional harassment.

\ And without knowing if others were also targeted or witnessed it, the interpersonal dynamics are unclear. We still run the risk of putting too much burden on the victim if the organization fails to take adequate action.

\ So, let's provide more helpful context with this prompt:

\

We get more empathy and more helpful instructions on how to handle discrimination.

\ ==Providing detailed context enables AI to give targeted, practical advice for the exact situation and not a generic, one-size-fits-all response==.

\ As against making assumptions, context allows AI to account for nuances and complexities when recommending. This helps ensure the advice provided is thoughtful, constructive, and avoids causing unintentional harm.

\ While this example centers on abusive workplace behavior, the need for detailed context applies widely - whether a pitch deck, workplace issue, or other interpersonal situations.

2. Use Respectful and Inclusive Language and Terminology==The words we use shape how AI systems perceive the world==.

\ Insensitive or outdated language leads to biased, problematic model responses - even inadvertently. Using respectful, inclusive terminology is essential for mitigating assumptions and ensuring AI promotes diversity.

\ Language reflects and reinforces societal attitudes and norms. If we input prompts with non-inclusive phrasing, AI responds in kind by mirroring harmful biases.

\ ==Gendered languages reinforce the gender binary. Ableist terminology diminishes people with disabilities. And racial slurs promote discrimination.==

\ The terminology we choose acts as a lens for how AI interprets situations and frames recommendations. Say we have the prompt:

\

We get a similar response from ChatGPT. The use of non-inclusive terms like "confined to a wheelchair" and "handicap accessible" promotes outdated, insensitive assumptions about people with disabilities. It paints them as helpless victims rather than empowered.

\ The response also places an undue burden on the non-disabled coworker to advocate on their behalf instead of directing the facilities manager to proactively consult the disabled employee directly about their needs.

\ While the recommendations provide helpful accessibility considerations, the underlying language perpetuates paternalistic stereotypes. It implies people with disabilities should be "accommodated" when in reality, accessibility benefits everyone and should be the default.

\ Using respectful, people-first language like "wheelchair user" and "accessible" fosters inclusiveness without reliance on assumptions.

\ Consider the following prompt:

\

The updated prompt's use of inclusive language allows for a more respectful, ethical response from ChatGPT. The difference is glaring:

\

- =="Coworker who uses a wheelchair" replaces “coworker confined to a wheelchair” to focus on the person first.==

- =="Lack of accessibility features" as against “handicap accessibility” frames it as a needed right.==

- =="Expressed frustrations" gives agency and does not just speak for them.==

- =="Improving accessibility" promotes inclusion for all.==

\ By framing the coworker as facing barriers rather than being inherently disabled, ChatGPT focuses solutions on universal accessibility for all instead of being a charitable accommodation.

3. Avoid Stereotypes and AssumptionsFeeding stereotypes into AI leads to biased outputs. When prompts make broad generalizations about groups, models respond based on limited assumptions and not based on real-world complexities.

\ For example, implying attributes like “Asians are good at math” seems harmless. But it trains AI to make decisions rooted in reductive race-based schemas rather than individual merits and needs.

\ Also, performance-based prompts that start with "As a woman…" cue gender stereotypes. Instead of considering the individual's specific skills, AI provides generalized perspectives of women.

\ ==One small stereotype scales quickly across the exponentially wide datasets used to train models. Soon that “tiny” bias warps AI systems deployed in everything from resume screening to financial lending==.

\ We must catch ourselves when prompts veer into assumptions or oversimplifications about social groups. No individual fits neat stereotypes perfectly in reality.

\ Here is one prompt. Notice any stereotypes?

\

The prompt plays into the assumption that older adults inherently struggle with technology use due to mental or physical decline. This ==risks ageist stereotypes of technological ineptitude, overlooking immense diversity within older demographics.==

\ Though the tips provided by ChatGPT generally sound like digital literacy advice, the tone is overly simplistic.

\ Phrases like "be patient", "start with the basics", and "use simple language" convey low expectations rooted in ageist stereotypes about declining cognitive abilities.

\ An improved prompt could be:

\

The new prompt avoids blanket assumptions about older adults. It focuses solutions on ==providing general new user advice== rather than age-specific accommodation.

\ This allows ChatGPT to respond inclusively with universal guidance that empowers self-directed learning.

4. Upvote and Downvote AI Prompt Responses==Almost all chatbots allow users to upvote and downvote prompt responses. This provides key feedback for AI systems and helps train models on what content is high or low quality, useful or harmful.==

\ However, voting also impacts what biases persist. Discriminatory tendencies can unintentionally be reinforced if users downvote certain demographic perspectives more harshly. Responsible participation matters.

\ ==We must be fair and apply consistent standards when judging AI responses==. This allows the technology to progress ethically by uplifting inclusive answers and respecting diversity.

\ Below are ways to promote fairness and social progress when providing user feedback to AI systems:

\

- Provide fair, reasoned feedback.

- Do not reflexively downvote responses you disagree with

- Clearly explain any issues using neutral, constructive language

\

- Check biases when voting.

- Be mindful that all user perspectives bring value

- Avoid allowing personal biases to over-influence voting

\

- Reward helpful, inclusive answers.

- Upvote well-reasoned responses respecting diversity

- Highlight useful advice benefitting society

\

- Advocate Responsible Improvement

- Downvote only if an answer promotes harm

- Request additional model training on flagged areas

\ User feedback shapes AI systems. ==Upvoting inclusive responses trains models to promote unbiased advice. Downvoting harmful content steers algorithms away from discrimination.==

\ But this influence depends entirely on participation practices. If users apply double standards along gender, racial, or other lines, so will AI.

\ Fair, thoughtful input - not reflexive votes - leads to ethical progress.

Conclusion==Crafting ethical, inclusive AI prompts is a collaborative effort. Our individual word choices and framing decisions collectively impact model training over time==.

\ Being a thoughtful prompt engineer requires conscious self-awareness. We must catch our own assumptions and overgeneralizations. Providing contextual details, using inclusive language, and avoiding stereotypes - these matter.

\ The goal is to prompt not just technically sound but socially responsible AI. The technology promises immense progress across sectors. However, unchecked bias perpetuates real-world discrimination and harm.

\ Paraphrasing Renee Cummings, an AI Ethicist:

\

Guiding AI to uplift diversity empowers both human and machine potential.

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.